The Fascinating Mystery Around the BOAT Gamma-Ray Burst

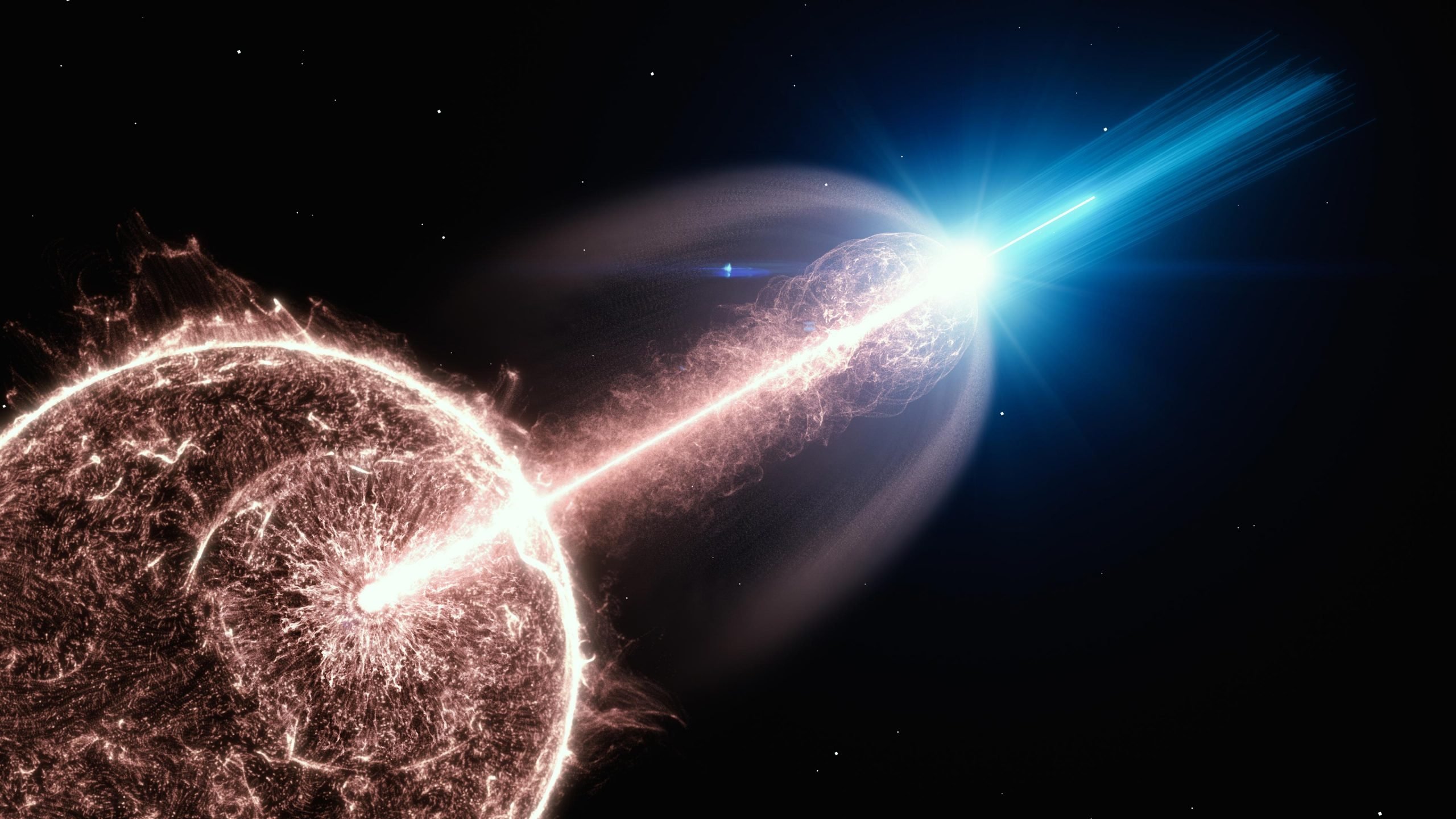

In October 2022, the universe’s canvas was pierced by a blinding flash, brighter than anything previously observed by humanity. This gamma-ray burst, creatively dubbed the “BOAT” (Brightest of All Time), sent shockwaves through our scientific community, igniting intense study and marveling astronomers across the world. The magnitude of the BOAT was nothing short of extraordinary, surpassing the emissions of our sun’s entire lifespan in just a few seconds.

From my own experience with cosmology through various amateur astronomy projects, including developing custom CCD cameras with my friends back in Upstate New York, I understand how unfathomable such an event appears. Our telescopes and sensors have caught their fair share of fascinating phenomena, but the BOAT took this to a new level. As such, it serves as an indispensable opportunity to understand some of the most profound processes in physics.

The State of Gamma-Ray Bursts

Gamma-ray bursts have long fascinated scientists, offering glimpses into the violent deaths of stars. There are two primary categories of gamma-ray bursts:

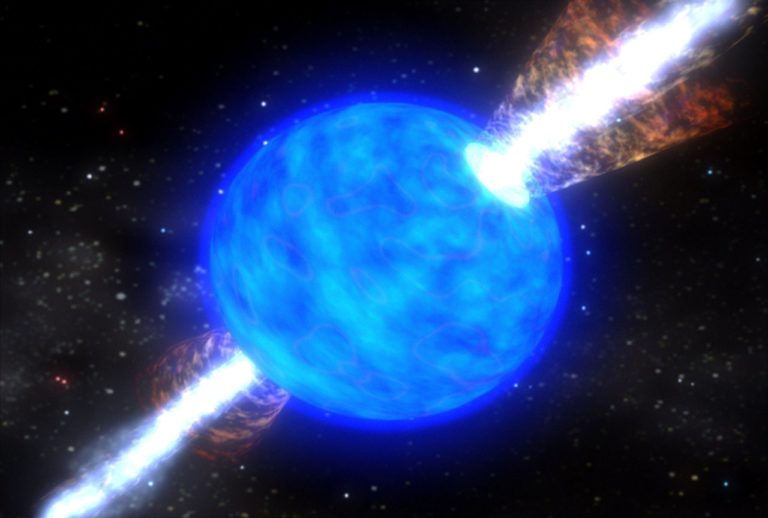

- Short Gamma-Ray Bursts: These last less than two seconds and are typically linked to neutron star collisions or the merger of a neutron star and a black hole.

- Long Gamma-Ray Bursts: These burst events can last anywhere between a few seconds to several minutes and are usually tied back to the collapse of massive stars, leading to their exploding as supernovae.

For decades, gamma-ray bursts have piqued interest within the astronomy community because they offer a window into cosmic processes that cannot be replicated here on Earth. Studies have shown that they may also play a crucial role in the creation of heavy elements such as gold, silver, and platinum through processes like r-process nucleosynthesis.

< >

>

What Made the BOAT Stand Out?

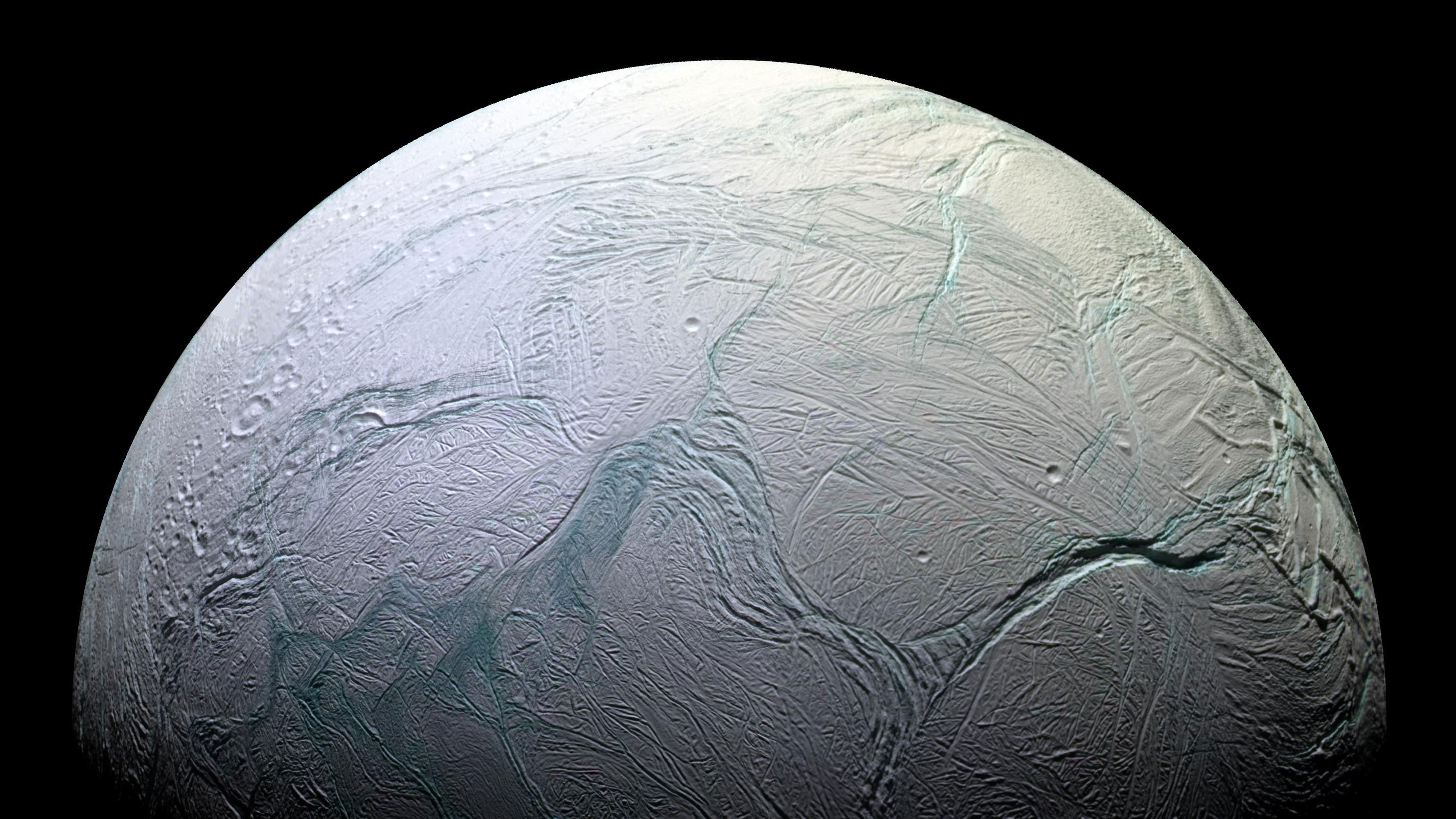

The BOAT wasn’t just another gamma-ray burst — it shattered every record in our collective scientific memory. Unlike typical gamma-ray bursts which fade within minutes, this explosion was detectable for nearly 10 hours. On top of that, it took place in the Sagitta constellation, a mere 2 billion light years away (relatively speaking), making it one of the closest gamma-ray bursts ever detected. Scientists believe such an event only happens once in 10,000 years. To place this in perspective: the last occurrence of something this powerful predated the advent of human civilization’s early farming practices!

But it wasn’t just the proximity that amazed scientists. The BOAT exhibited 70 times the energy of any previous gamma-ray burst, a truly perplexing figure. Initially, the scientific community speculated that the burst might have stemmed from the supernova of an extraordinarily massive star. However, further investigation revealed rather ordinary behavior from the supernova itself — at least in terms of its brightness.

The Nature of the BOAT’s Gamma-Rays

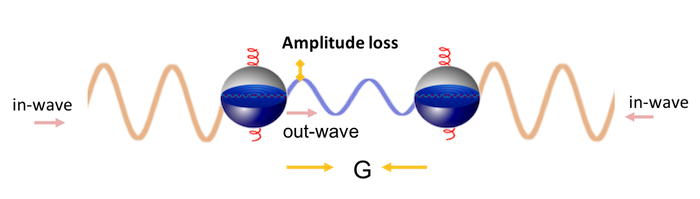

Astronomers trying to explain the unprecedented strength of the gamma rays look towards the geometry of the collapsing star. Specifically, they propose that we may have caught a more concentrated stream of focused energy known as a beam concentration effect. Imagine the light from a flashlight versus that of a focused laser; the latter, while containing the same total energy, appears much more intense.

<

>

In the case of BOAT, it seems the particle jets emitted from the newly-formed black hole were extraordinarily narrow, making the burst 70 times brighter as they interacted with the surrounding matter. Not only were these jets more focused, but the particles were moving at near-light speed, which amplified the effect astronomers observed back here on Earth. Our own planet’s ionosphere was temporarily impacted due to the intensity of the event, an occurrence rarely seen from cosmic phenomena this far away.

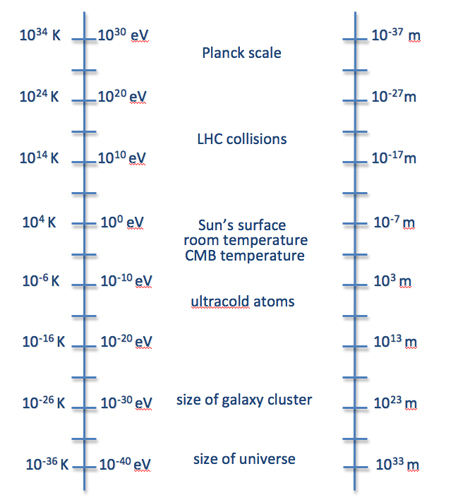

The Cosmological Implications: Heavy Elements and Dark Matter

The ramifications of studying the BOAT go well beyond gamma-ray astronomy. The event introduced new challenges to the Standard Model of physics, particularly because scientists detected an unusual number of super high-energy photons. These photons seemed far too energetic to have survived 2 billion light years worth of the cosmic radiation background, intergalactic dust, and red shifting caused by universal expansion. One hypothesis suggests these photons might have converted into hypothetical axions (potential dark matter particles) before converting back once they entered our galaxy’s magnetic field. This discovery points to potential Missing Axion Particle Explanations that challenge our current understanding of particle physics.

< >

>

The BOAT’s Link to Element Formation

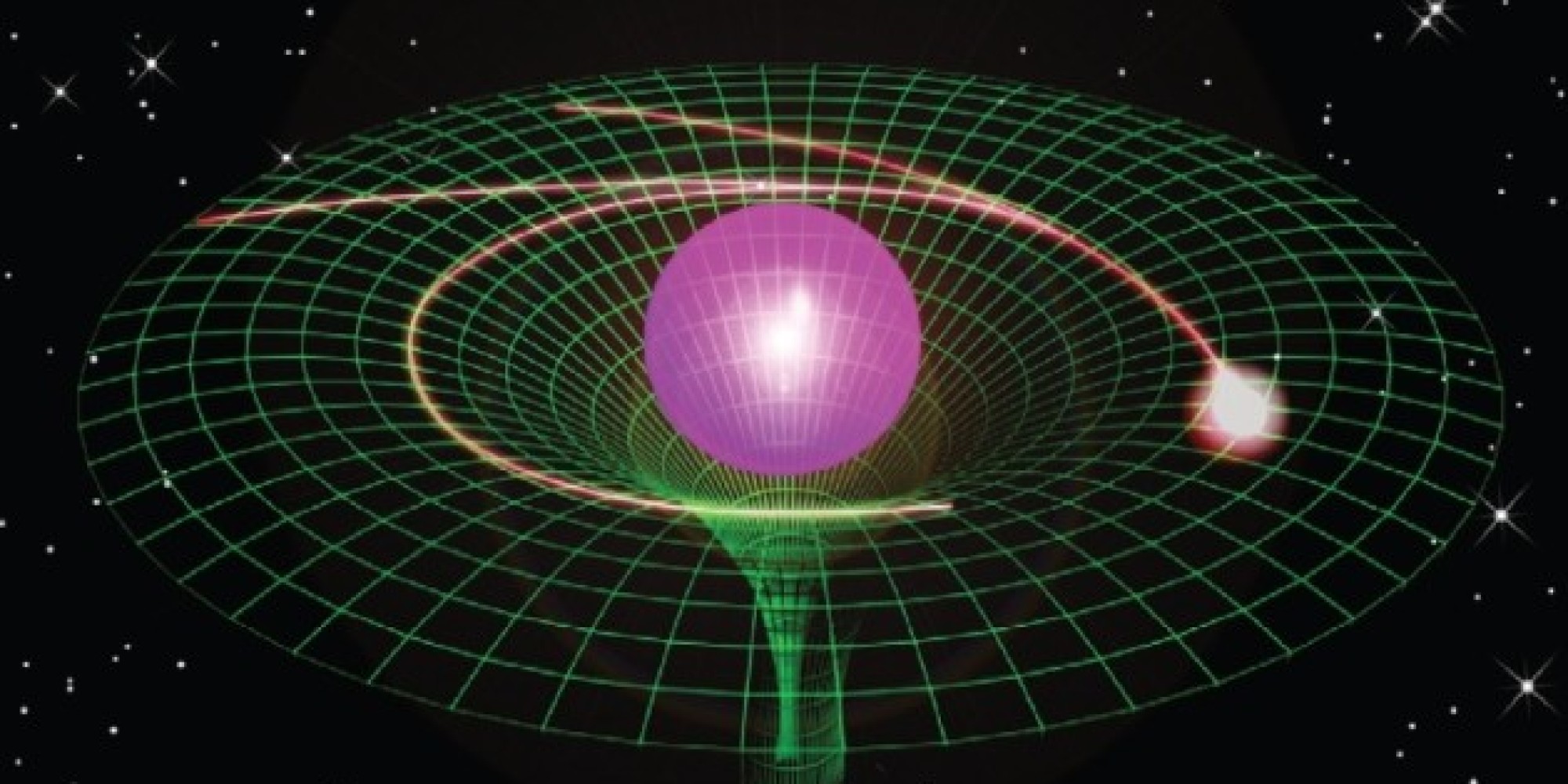

Another incredible aspect of gamma-ray bursts is their ability to forge heavy elements through nucleosynthesis. Collapsing stars like the one that caused the BOAT aren’t just destructive forces; they are creators, forging elements heavier than iron through a process known as rapid neutron capture.

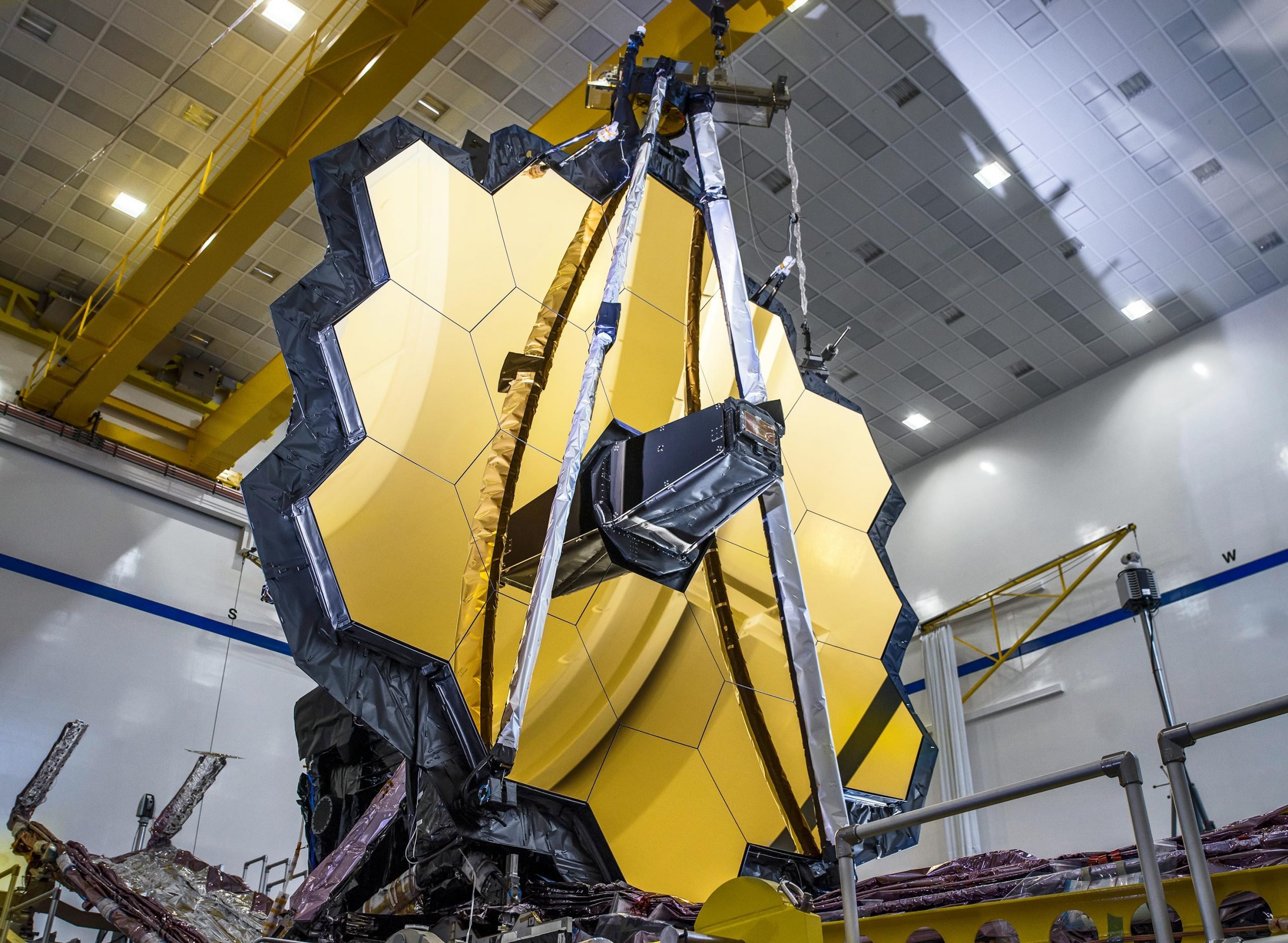

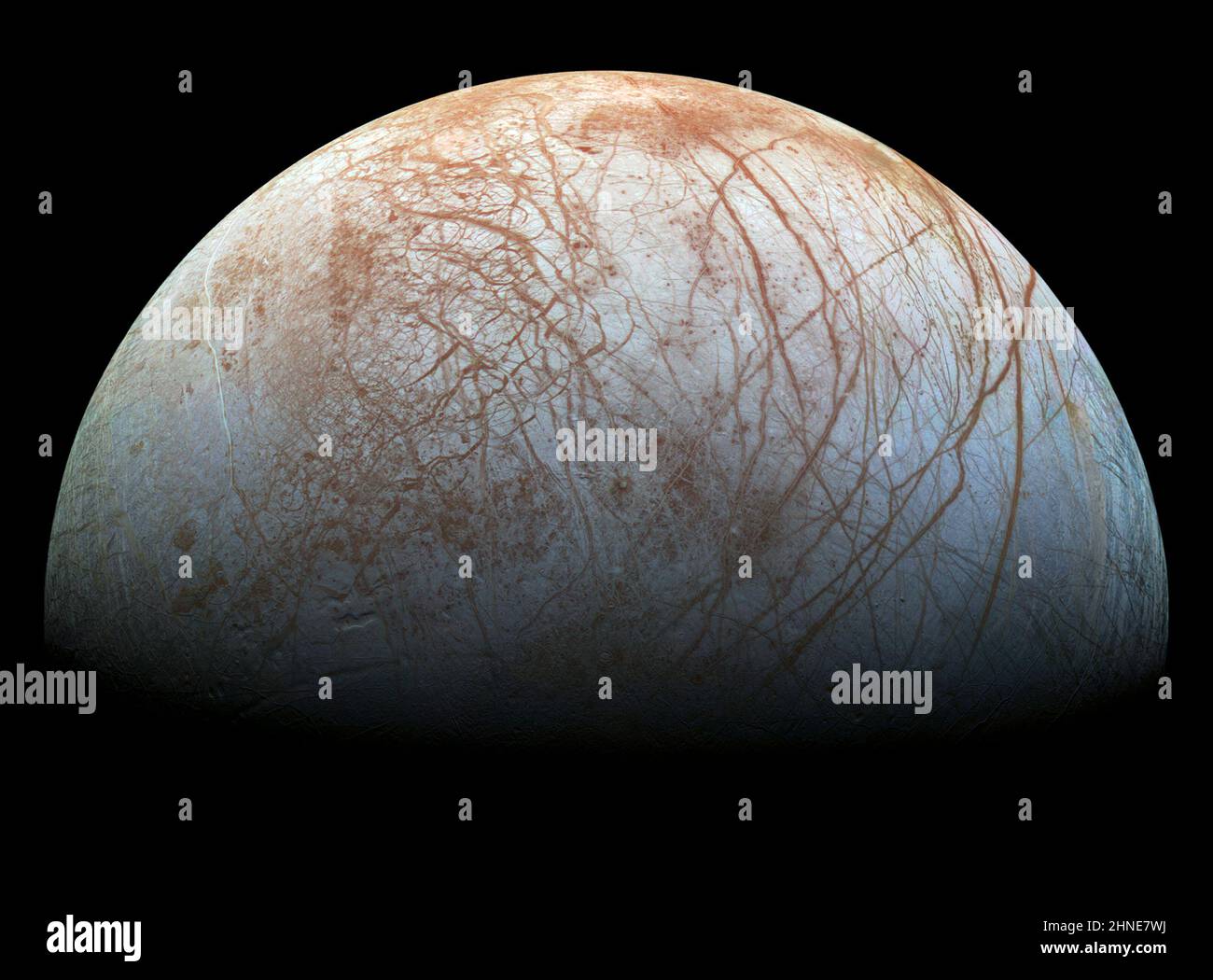

Similar processes occur in neutron star mergers, as demonstrated by results from the James Webb Space Telescope. The r-process creates highly valuable elements — among them, gold. However, curiously, the spectral analysis from the BOAT didn’t reveal a surprising abundance of heavy elements. This poses yet another puzzle regarding the nature of collapsars and their ability to enrich the universe with these fundamental components.

It bears mentioning that many of these questions connect back to my previous exploration of cosmic phenomena and their role in broader astronomical mysteries. Each event, from microbial life to gamma-ray bursts, seems to reinforce the bigger picture of how the universe evolves — often making us rethink our assumptions about how material life seeds and regenerates across space.

Conclusion: New Frontiers in Cosmology

The discovery of the BOAT is a humbling reminder that the universe still holds many secrets. Despite all the advancements in telescopic technology and cosmological modeling, we stand on the edge of a never-ending frontier, continually discovering more. The BOAT not only forces us to rethink our understanding of gamma rays but could point toward fundamental flaws in our interpretation of element formation, black holes, and dark matter.

As I have always believed, the beauty of cosmology lies in the constant evolution of knowledge. Just as new findings keep us rethinking our models, the BOAT ensures that we remain in awe of the heavens above — the ultimate laboratory for understanding not just our solar system but the very essence of life itself.

< >

>

There’s still much work to do as we continue to analyze the data, but one thing is certain — the BOAT has left a lasting legacy that will shape our understanding for decades, if not centuries, to come.

Focus Keyphrase: BOAT Gamma-Ray Burst

>

> >

>

>

>  >

>  >

>  >

> >

>