AI Recreates Minecraft: A Groundbreaking Moment in Real-Time Interactive Models

In recent discussions surrounding AI advancements, we’ve witnessed the transition from models that generate pre-defined content on request, such as images or text, to stunningly interactive experiences. The most recent example reveals an AI capable of observing gameplay in Minecraft and generating a fully playable version of the game in real-time. This leap has truly left me in awe, redefining the possibilities for interactive systems. It’s remarkable not just for what it has achieved but also for what it signals for the future.

The Evolution from Text and Image Prompts to Interactive AI

In the past, systems like Google’s work with Doom focused on creating AI-run enhancements that could interpret and interact with gaming environments. However, this Minecraft AI system has pushed the boundary much further. Unlike traditional models where we offer text or image prompts, this AI allows us to physically engage with the environment using a keyboard and mouse. Much like how we interface with conventional gaming, we’re able to walk, explore, jump, and even interact with object-based functionalities like placing a torch on a wall or opening and using the inventory in real-time.

Reflecting on my experience working with machine learning models for various clients through my firm, DBGM Consulting, it’s astonishing to see how fast AI has advanced in real-time applications. The ability to interact with an AI-driven system rather than simply observe or receive an output is genuinely transformative. Predictive models like the ones we’ve previously discussed in the context of the Kardashev Scale and AI-driven technological advancement show us how quickly we’re approaching milestones that once seemed decades away.

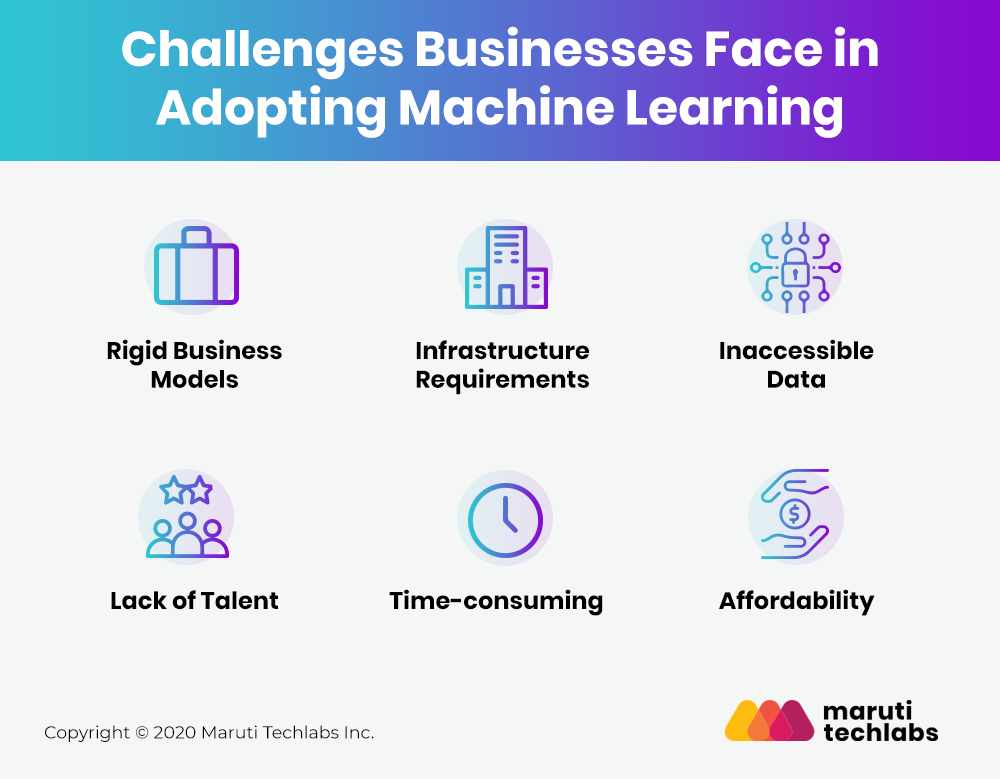

Pros and Cons: The Dual Nature of Progress

Without a doubt, this development opens new doors, but it comes with its challenges. The brilliance of this system lies in its ability to generate over 20 frames per second, which provides a smooth, real-time multiplayer environment. Yet, the current visual fidelity leaves something to be desired. The graphics often appear pixelated to the point where certain animals or objects (like pigs) become almost indistinguishable. Coupled with the fact that this AI system has a short memory span of fewer than three seconds, the immersion can often devolve into a surreal, dreamlike experience where object permanence doesn’t quite exist.

It is this strange juxtaposition of excellence and limitation that makes this a “running dream.” The AI’s response time reflects vast progress in processing speed but highlights memory obstacles that still need to be addressed. After all, Artificial Intelligence is still an evolving field—and much like GPT-2 was the precursor to the more powerful ChatGPT, this Minecraft model represents one of the many foundational steps in interactive AI technology.

What’s Next? Scaling and Specialized Hardware

Impressively, this system runs on proprietary hardware, which has left many experts within the field intrigued. As technology evolves, we anticipate two key areas of growth: first, the scaling up of models that today run at “half-billion parameter” capacities, and second, the utilization of more refined hardware systems, possibly even entering competition with heavyweights like NVIDIA. I already see huge potential for this kind of interactive, dynamic AI system, not just in gaming but in other fields like real-time 3D environments for learning, AI-driven simulations for autonomous planning, and perhaps even collaborative digital workspaces.

As an AI consultant and someone deeply invested in the future of interactive technology, I believe this AI development will pave the way for industries beyond computer gaming, revolutionizing them in the process. Imagine fully interactive AI for autonomous robots, predictive simulations in scientific research, or even powerful AI avatar-driven systems for education. We are getting closer to a seamless integration between AI and user-interaction environments, where the boundaries between what’s virtual and what’s real will fade even further.

Conclusion: A Small Step Leading to Major Shifts in AI

In the end, this new AI achievement—though far from perfect—is a glimpse into the near future of our relationship with technology. Much like we’ve seen with the rise of quantum computing and its impact on Artificial Intelligence, we are witnessing the early stages of a technological revolution that is bound to reshape various fields. These developments aren’t just incremental—they are paradigm-shifting, and they remind us that we’re at the cusp of a powerful new era in the way we interact with both digital and real-world systems.

If you are someone who’s fascinated by the combination of machine learning and real-world applications, I highly encourage you to explore these developments for yourself and stay tuned to what’s next in the ever-accelerating evolution of AI technology.

![]()

Focus Keyphrase: AI recreates Minecraft

>

> >

> >

>

>

> >

> >

>

>

> >

> >

> >

> >

> >

> >

> >

>