The Impact of Quantum Computing on Artificial Intelligence and Machine Learning

As someone deeply involved in the fields of both Artificial Intelligence (AI) and Machine Learning (ML), I’ve spent a large portion of my career pondering the upcoming revolution in computing: quantum computing. This new paradigm promises to accelerate computations beyond what we could ever imagine using classical systems. Quantum computing, which takes advantage of quantum mechanics, is set to solve problems that have long been deemed intractable due to their complexity and scale. More importantly, when applied to AI and ML, the implications could be astonishing and truly transformative.

What is Quantum Computing?

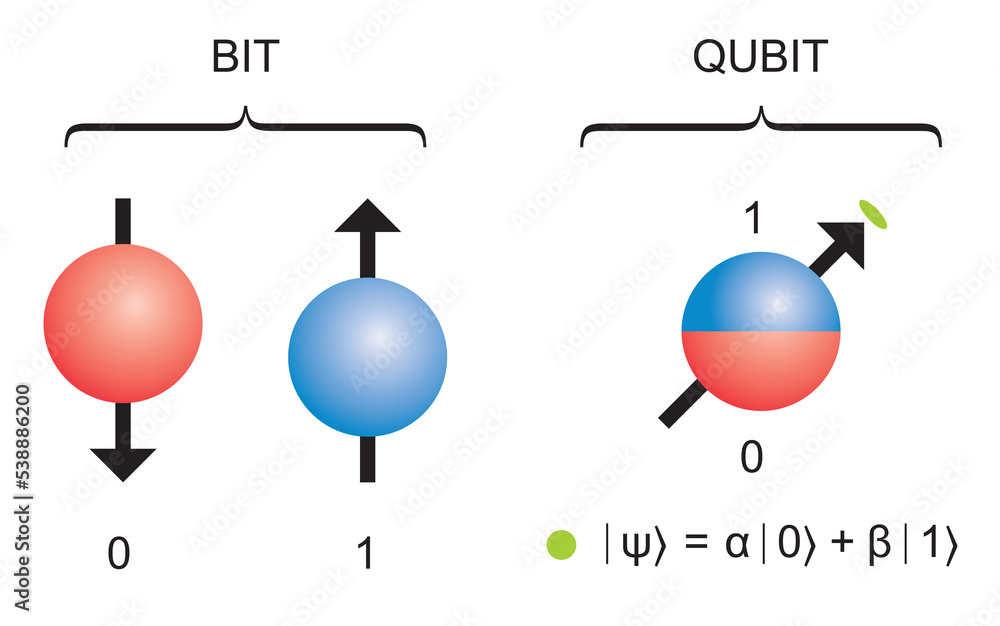

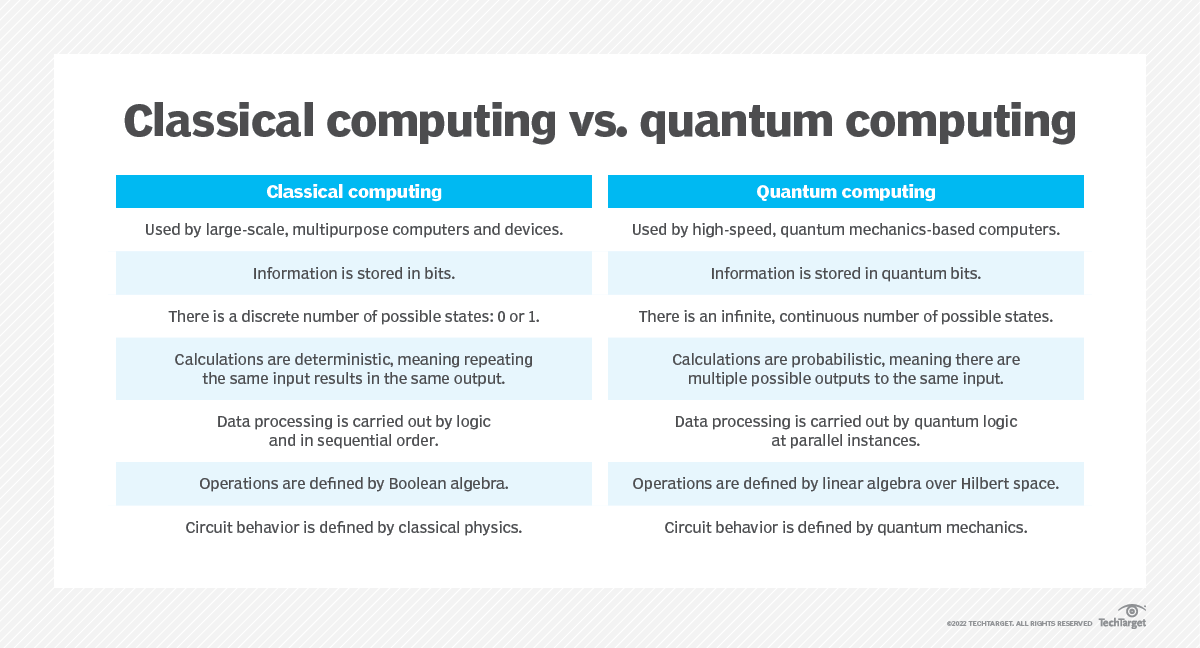

At its core, quantum computing leverages the principles of quantum mechanics — superposition, entanglement, and interference — to execute computations. Unlike classical computers that process information as binary bits (0s and 1s), quantum computers use qubits. A qubit can be both a 0 and a 1 at the same time due to superposition, which enables a quantum computer to explore many possibilities simultaneously. This capability grows the potential for massive parallelization of computations.

To put this into context, imagine that in the very near future, quantum computers can tackle optimization problems, drug discovery, and cryptography tasks in ways that a traditional computer cannot—even with supercomputers. Just last year, companies like IBM, Google, and Microsoft made significant strides, moving toward practical quantum computers that could be deployed commercially in fields such as AI and ML (IBM Q system, Google’s Sycamore processor).

Quantum Computing’s Role in Artificial Intelligence

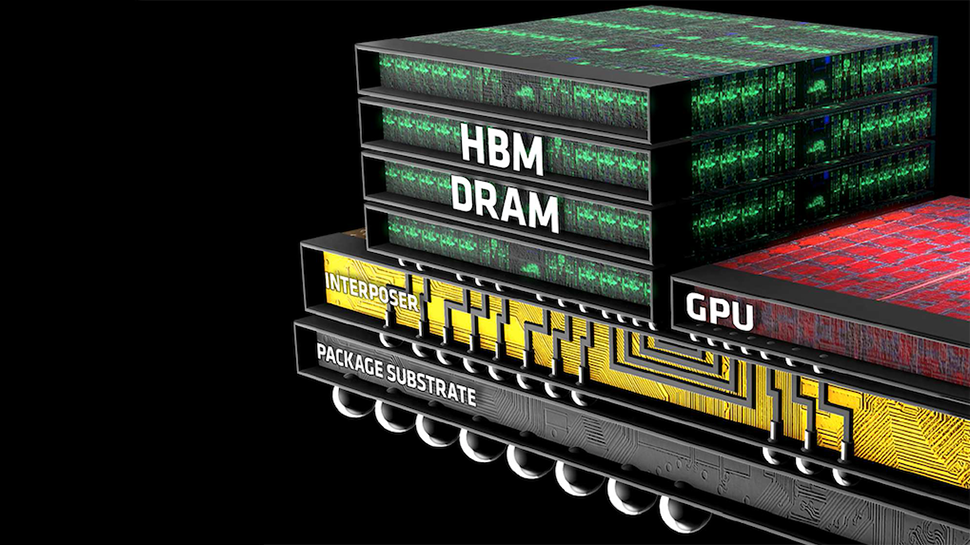

In recent years, AI has thrived thanks to advancements in processing power, cloud computing, and GPUs that facilitate vast amounts of data to be trained in machine learning models. However, there are inherent limitations to classical resources, such as time-consuming training phases, high costs, and energy inefficiency. Quantum computers provide an answer by potentially reducing the time it takes to train AI models and handle large datasets by a substantial degree.

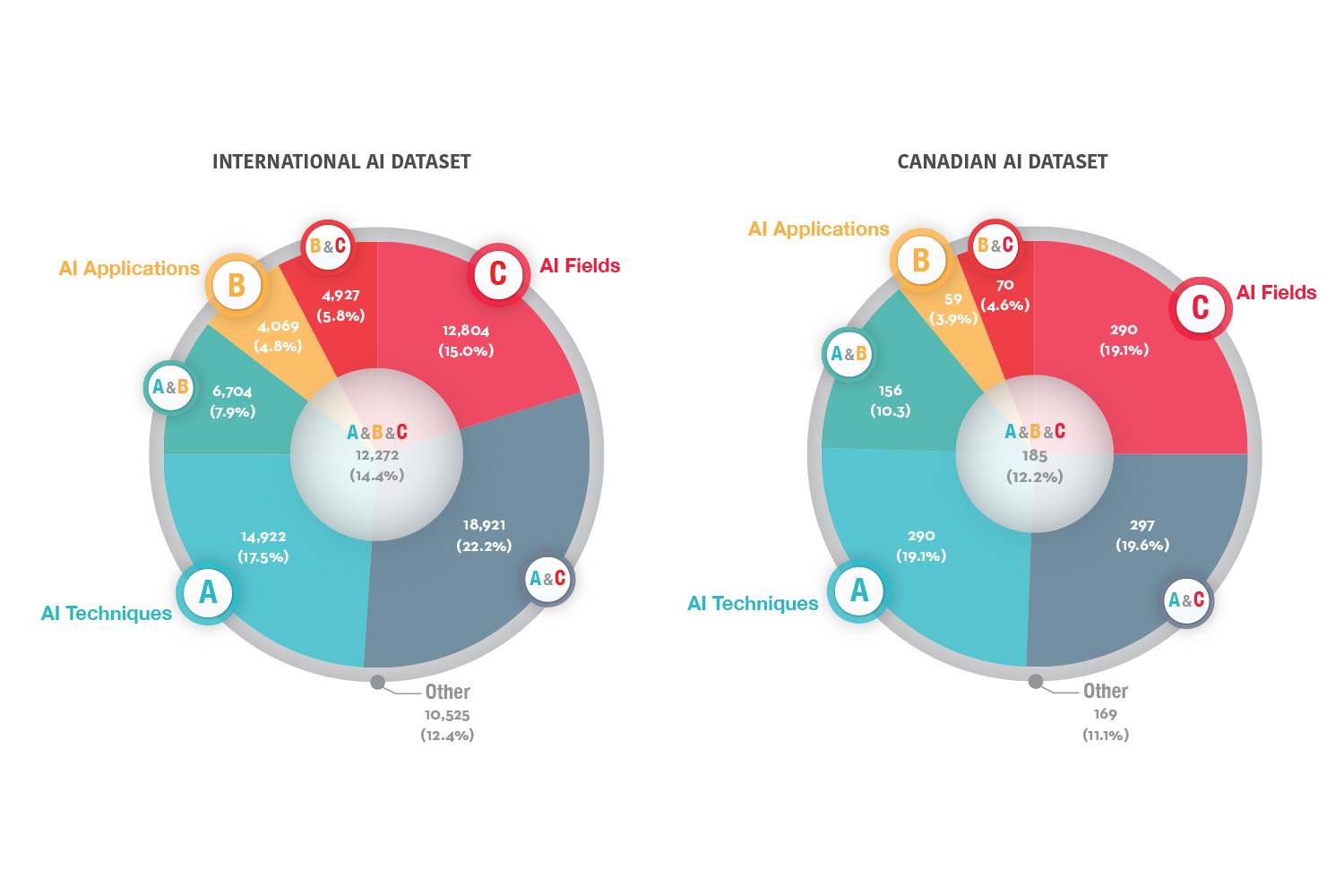

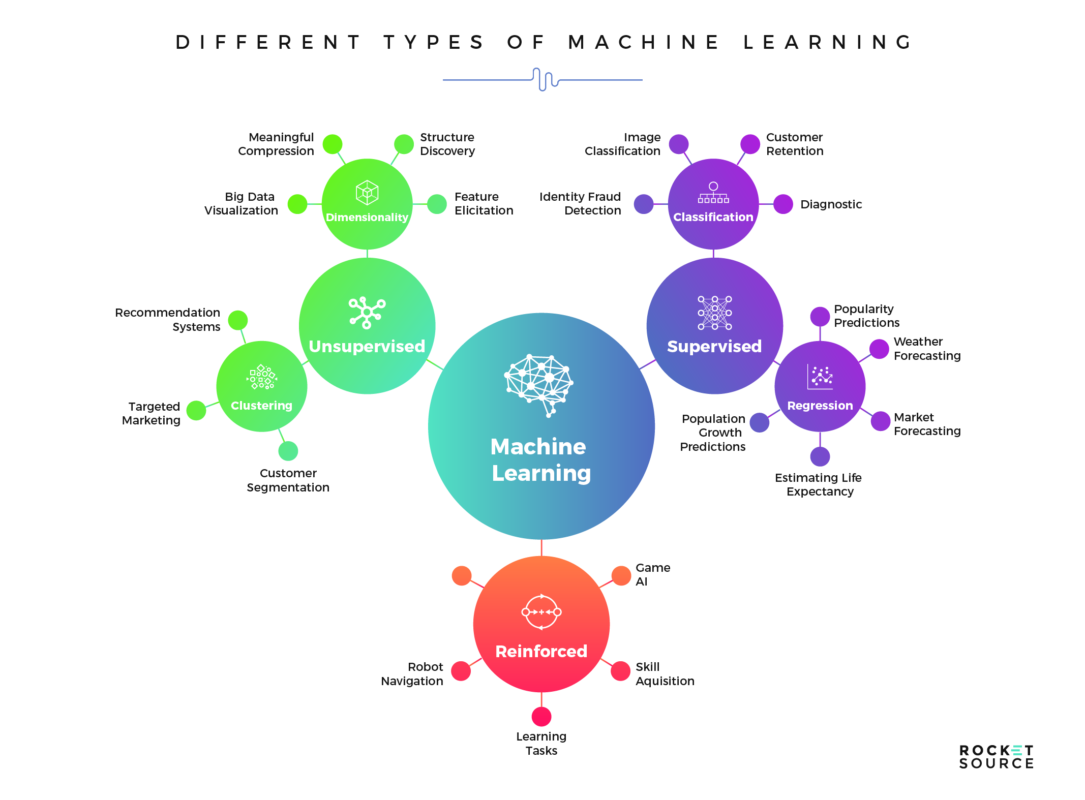

A 2023 article published by IBM Research, whom I follow closely, emphasized quantum computing’s potential to enhance tasks like solving complex combinatorial optimization problems, which frequently appear in machine learning contexts such as clustering and classification. The disruptive force of quantum computing on AI can be broken down into several game-changing aspects:

- Faster Training: Quantum systems can reduce training times of neural networks by exploiting quantum-enhanced optimization techniques. While conducting AI/ML workshops, my team and I have seen firsthand that classical computing models often take days or even weeks to train on certain complex datasets. With quantum computing, this is expected to be reduced significantly.

- Improved Model Accuracy: Quantum algorithms, such as the Quantum Approximate Optimization Algorithm (QAOA), have the potential to search for optimal solutions more thoroughly than classical methods, which ultimately improves the accuracy of machine learning models.

- Reduction in Computational Cost: Many machine learning applications, from natural language processing to pattern recognition, are computationally expensive. Adopting quantum AI methodologies could alleviate the energy demands and costs associated with running large-scale AI models.

Over the years, I have gained experience in both AI and quantum theory, often exploring these intersections during my tenure at DBGM Consulting. It’s particularly fascinating to think of the way quantum algorithms might help shape the next generation of machine learning models, obtaining solutions traditionally classified as NP-hard or NP-complete.

Quantum Algorithms for Machine Learning

If you’re familiar with neural networks and optimization algorithms, quantum computing’s possibilities in this area should be thrilling. Typical machine learning problems like classification, clustering, and regression require linear algebra operations on large matrices. Fortunately, quantum computing thrives in performing linear algebra-based computations quickly and efficiently.

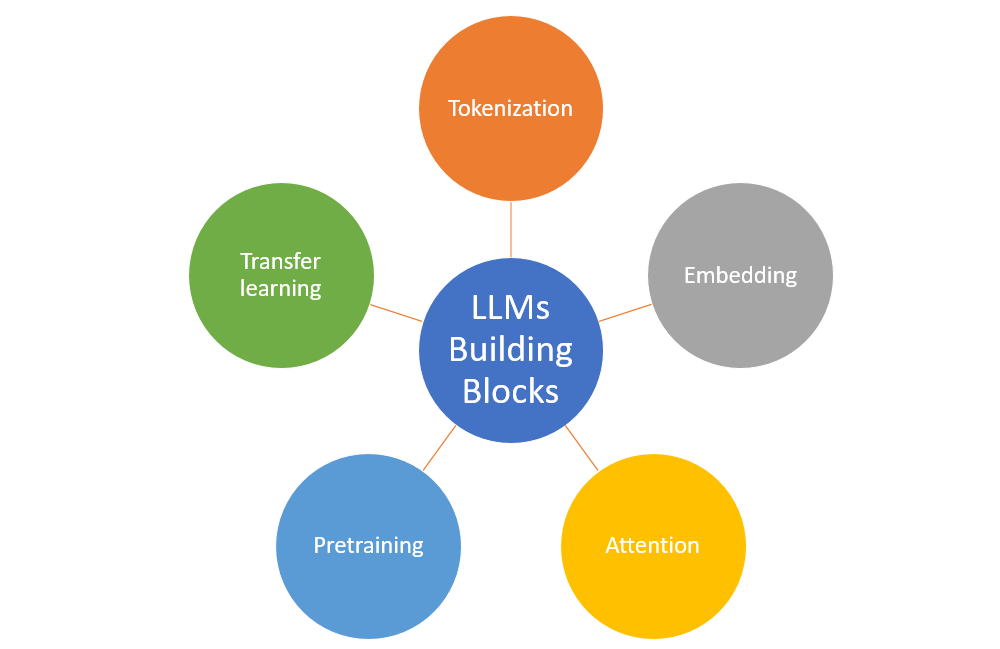

Quantum algorithms best suited for machine learning include:

| Algorithm | Use Case |

|---|---|

| Quantum Principal Component Analysis (QPCA) | Efficiently finds the principal components of large datasets, which is critical for dimensionality reduction in machine learning. |

| Harrow-Hassidim-Lloyd (HHL) Algorithm | Solves systems of linear equations exponentially faster than classical algorithms, which is important for many learning models. |

| Quantum Support Vector Machines (QSVM) | Enhances the binary classification tasks involved in AI models. QSVMs show potential by being more efficient compared to their classical counterparts. |

Quantum computing has particular implications for solving computationally-intensive tasks such as training deep neural networks. In a recent workshop my firm led, we examined how quantum-enhanced hybrid models could speed up hyperparameter tuning and feature extraction, steps vital in constructing efficient and highly accurate models.

Furthermore, none of this is far-future speculation. Just last month, research published in PNAS (Proceedings of the National Academy of Sciences) demonstrated the experimental application of quantum computing in fields like protein folding and simulations of molecular dynamics—areas where machine learning and artificial intelligence already play a crucial role.

Challenges and Cautions

It’s important to acknowledge that while quantum computing holds incredible potential for improving AI, we are still in the early stages of delivering practical, scalable systems. There’s significant hype around quantum superiority, but the industry faces several key challenges:

- Decoherence: Qubits are fragile and prone to errors due to interference from environmental noise.

- Algorithm Development: Developing robust quantum algorithms to solve practical AI/ML tasks remains a difficulty.

- Engineering Limitations: Current quantum hardware can only handle a limited number of qubits, and scaling up quantum systems is challenging—both in terms of energy and cost.

As an engineer and AI enthusiast, I remain cautiously optimistic. The parallel between scaling neural networks and scaling quantum hardware is not lost on me, and I believe that as quantum systems become more robust over the coming decade, we will begin to unlock its full capabilities within machine learning domains.

Bringing it All Together

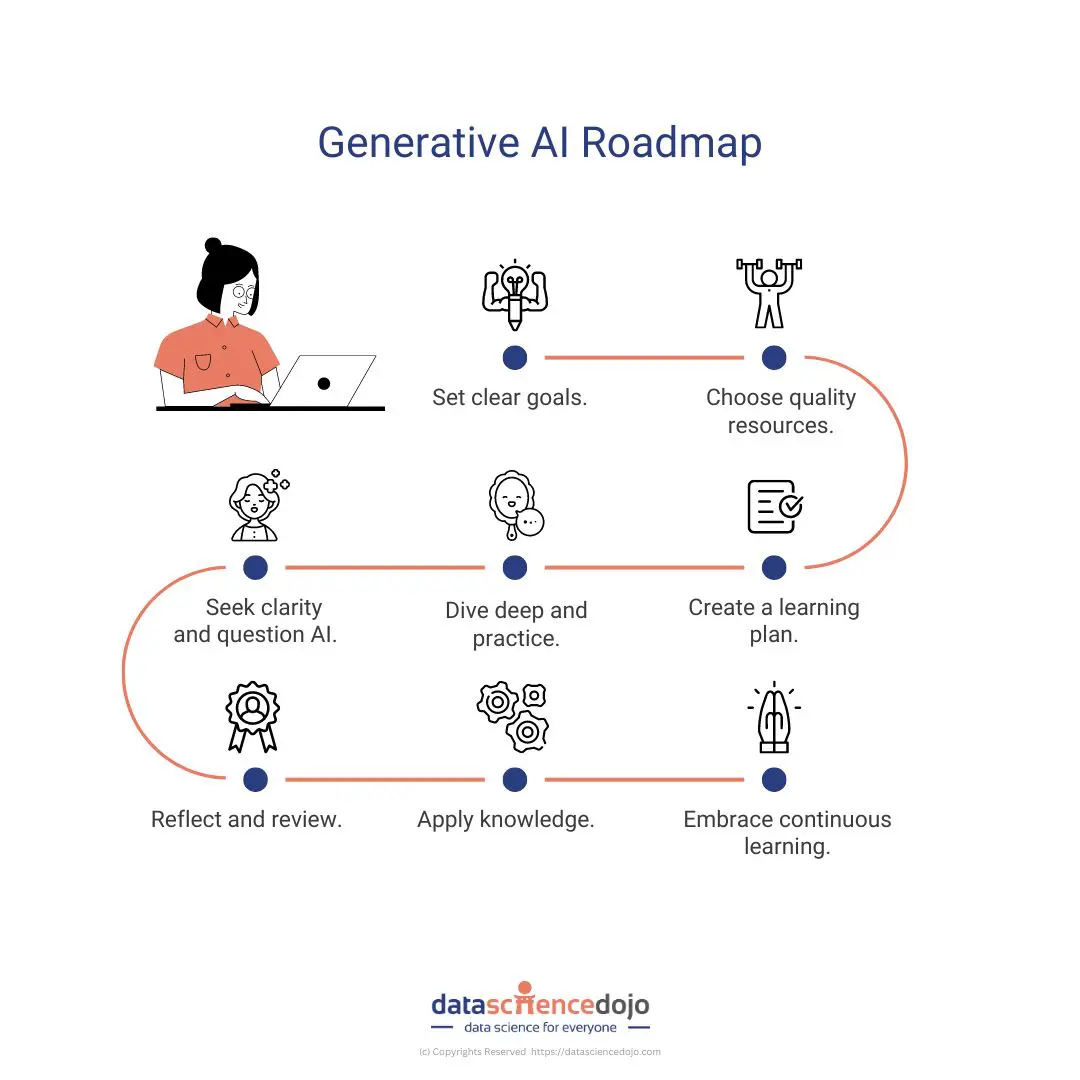

While quantum computing’s integration into artificial intelligence is a few years away from practical mainstream application, it’s a frontier I’m eagerly watching. The synergies between these fields are thrilling—bringing us closer to solving the most complex problems the world faces, from drug discovery to climate predictions, much faster and more efficiently.

In a way, quantum AI represents one of the ultimate “leaps” in tech, underscoring a theme discussed in my previous article on scientific discoveries of November 2024. There is no doubt in my mind that whoever masters this fusion will dominate sectors ranging from computing to financial markets.

I’ve long been an advocate of applying the latest technological innovations to practical domains—whether it’s cloud infrastructure at DBGM Consulting or neural networks as highlighted in previous articles about AI search models. Finally, with quantum computing, we are standing on the shoulders of giants, ready to accelerate yet another wave of innovation.

It’s a field not without challenges, but if history teaches us anything, new technological paradigms—in AI, physics, or automotive design—are what drive humanity forward.

Focus Keyphrase: Quantum Computing and Artificial Intelligence

*

* *

*

>

> >

> >

>