Deep Diving into Supervised Learning: The Core of Machine Learning Evolution

Machine Learning (ML) has rapidly evolved from a niche area of computer science to a cornerstone of technological advancement, fundamentally changing how we develop, interact, and think about artificial intelligence (AI). Within this expansive field, supervised learning stands out as a critical methodology driving the success and sophistication of large language models (LLMs) and various AI applications. Drawing from my background in AI and machine learning during my time at Harvard University and my work at DBGM Consulting, Inc., I’ll delve into the intricacies of supervised learning’s current landscape and its future trajectory.

Understanding the Core: What is Supervised Learning?

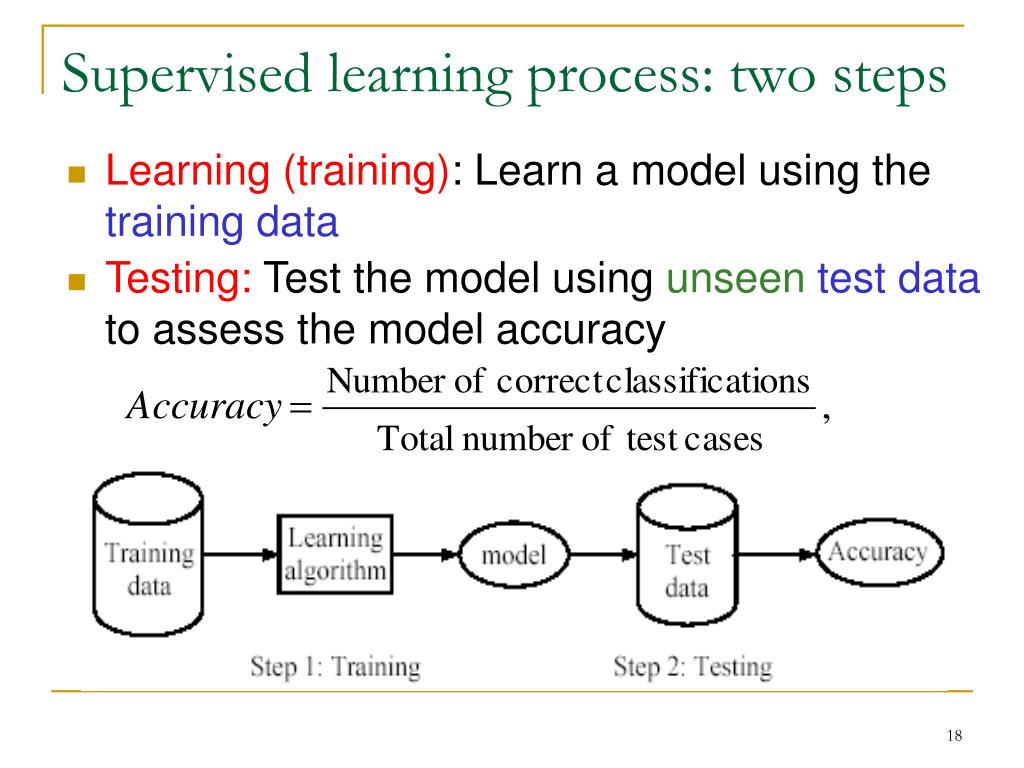

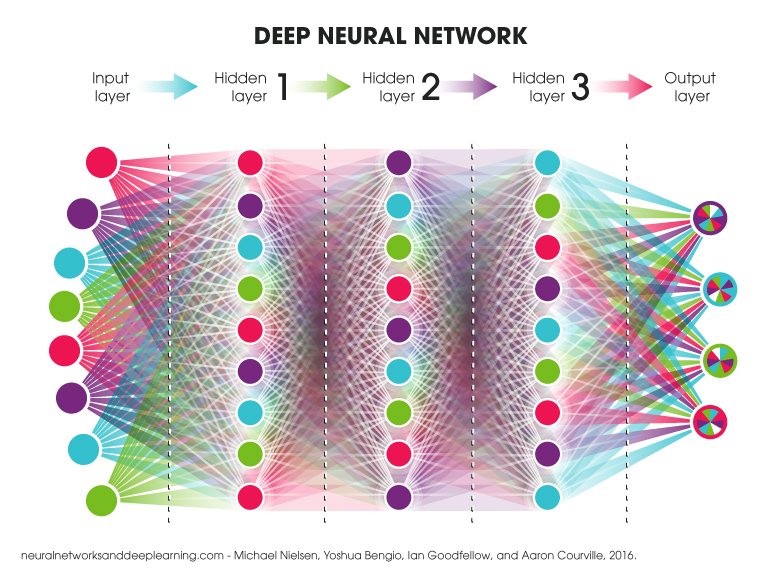

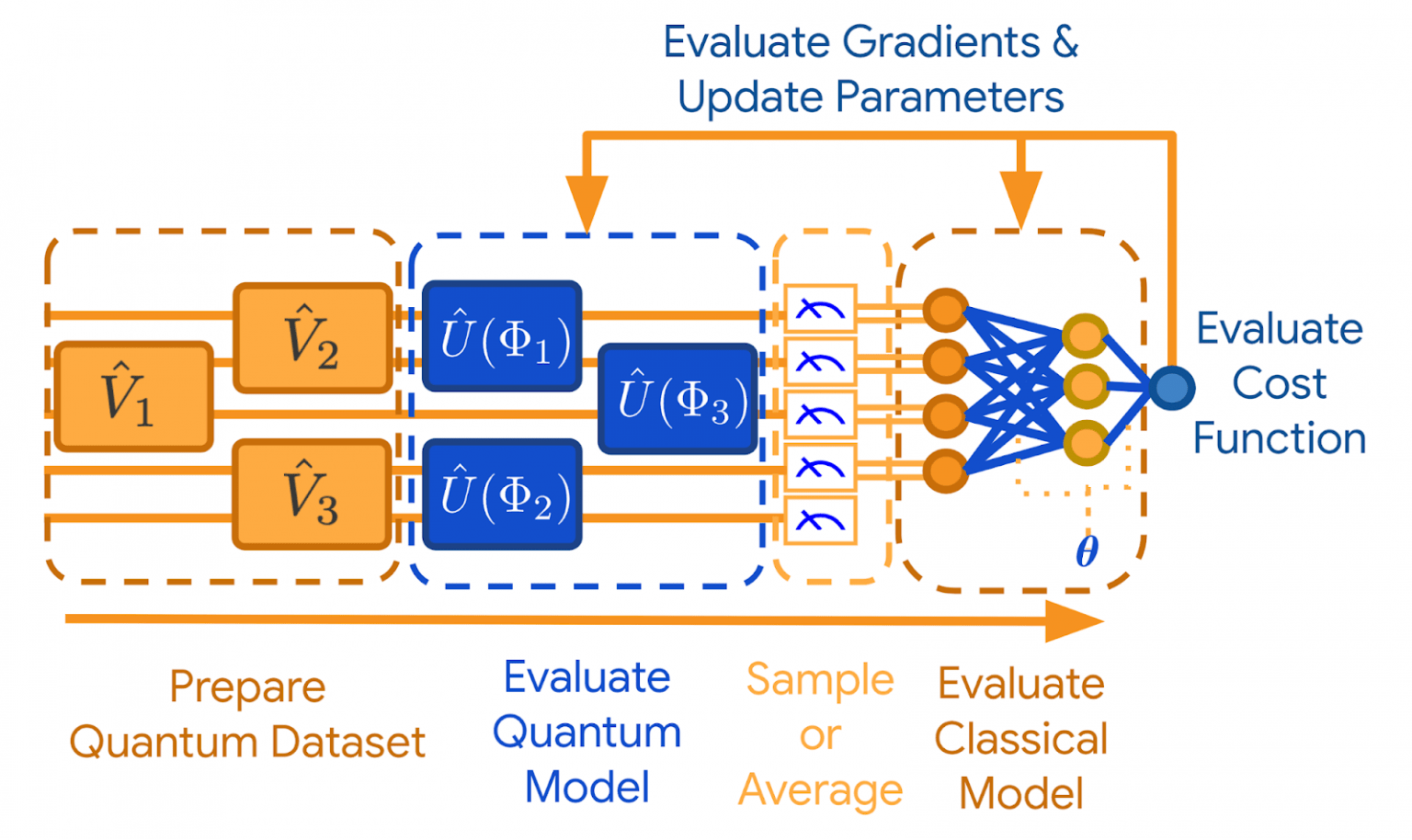

At its simplest, supervised learning is a type of machine learning where an algorithm learns to map inputs to desired outputs based on example input-output pairs. This learning process involves feeding a large amount of labeled training data to the model, where each example is a pair consisting of an input object (typically a vector) and a desired output value (the supervisory signal).

< >

>

The model’s goal is to develop a mapping function so well that when it encounters new, unseen inputs, it can accurately predict the corresponding output. It forms the bedrock of many applications we see today, from spam detection in emails to voice recognition systems employed by virtual assistants.

The Significance of Supervised Learning in Advancing LLMs

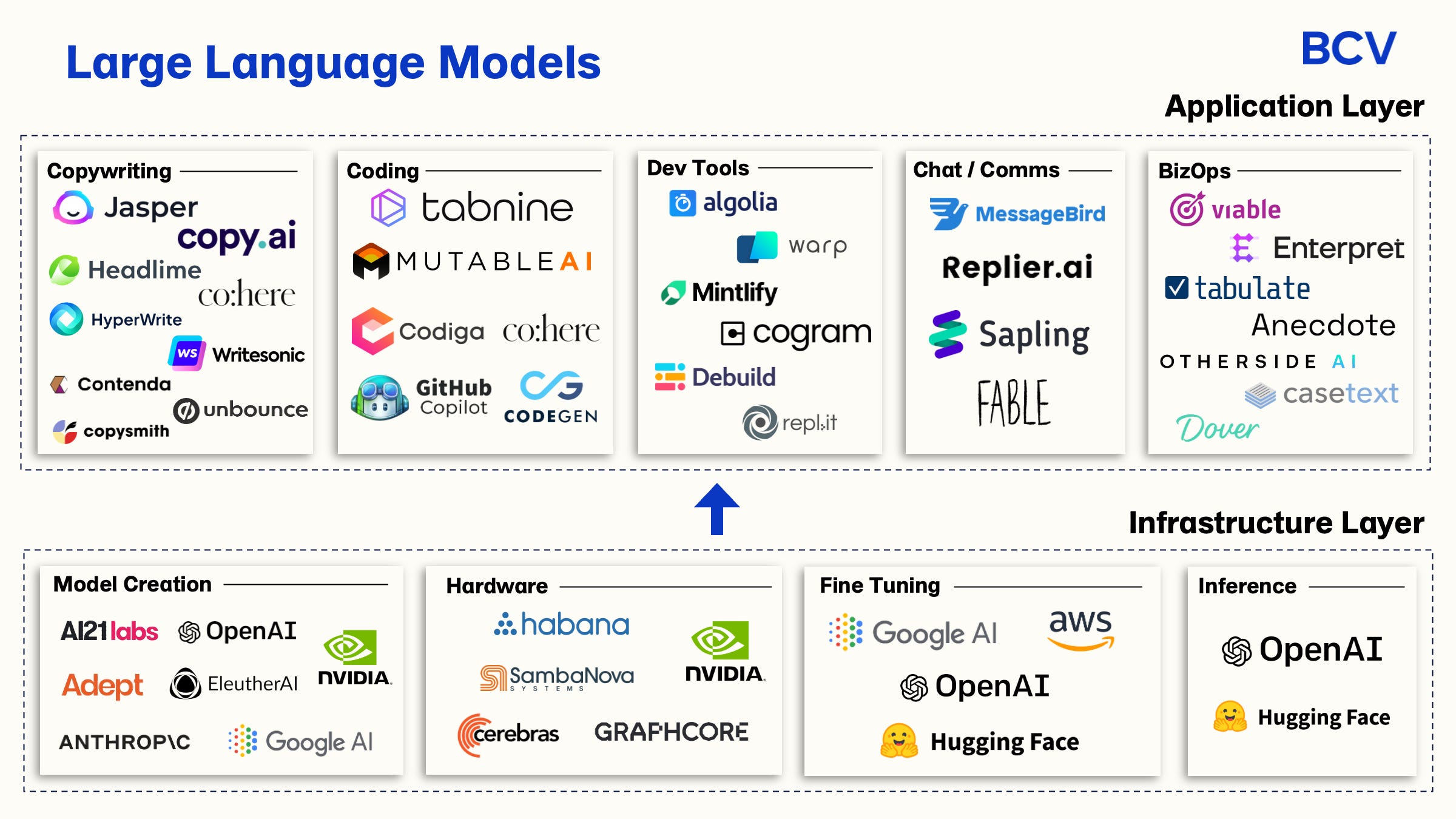

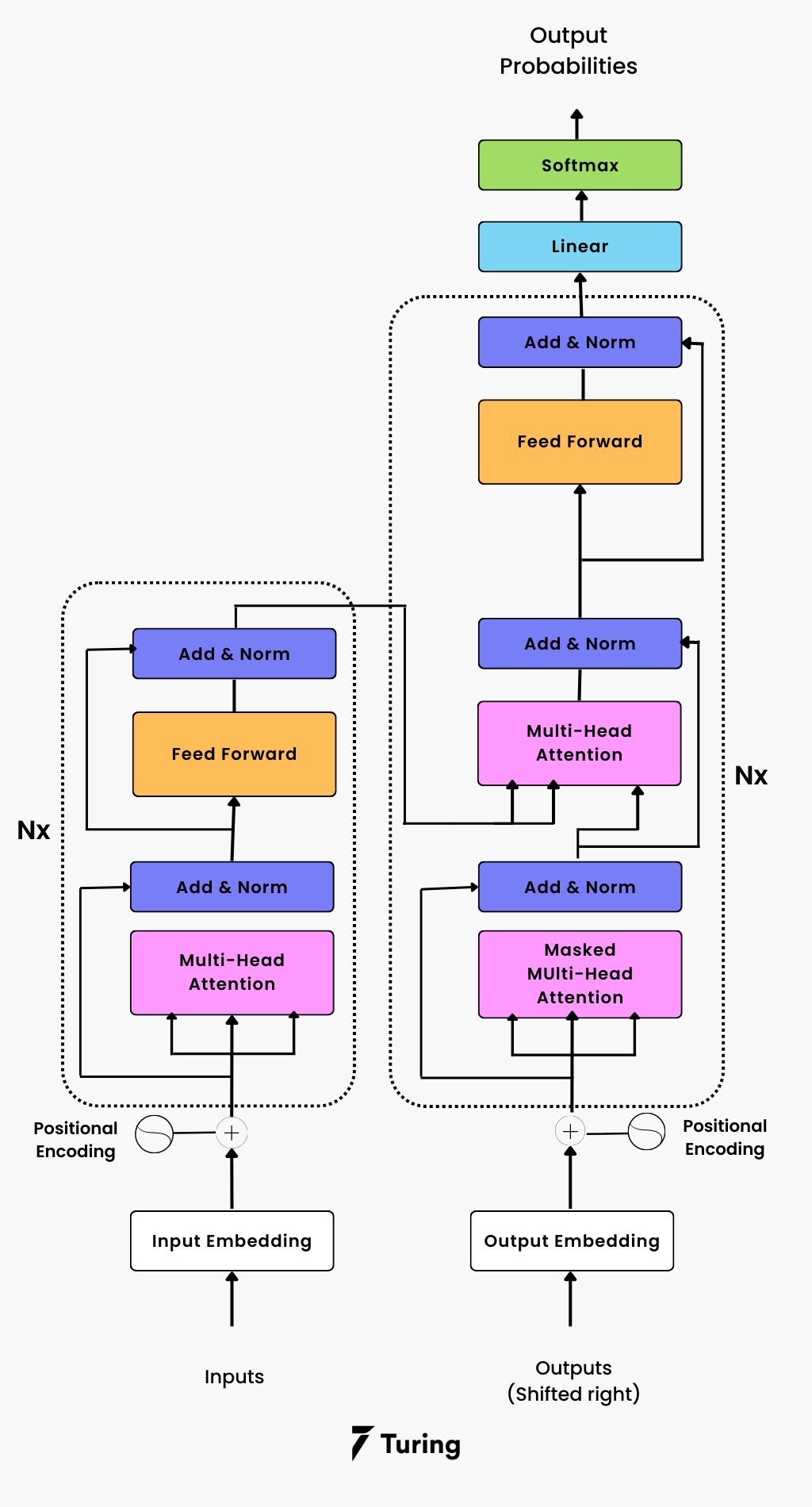

As discussed in recent articles on my blog, such as “Exploring the Mathematical Foundations of Large Language Models in AI,” supervised learning plays a pivotal role in enhancing the capabilities of LLMs. By utilizing vast amounts of labeled data—where texts are paired with suitable responses or classifications—LLMs learn to understand, generate, and engage with human language in a remarkably sophisticated manner.

This learning paradigm has not only improved the performance of LLMs but has also enabled them to tackle more complex, nuanced tasks across various domains—from creating more accurate and conversational chatbots to generating insightful, coherent long-form content.

< >

>

Leveraging Supervised Learning for Precision and Personalization

In-depth understanding and application of supervised learning have empowered AI developers to fine-tune LLMs for precision and personalization unprecedentedly. By training models on domain-specific datasets, developers can create LLMs that not only grasp generalized language patterns but also exhibit a deep understanding of industry-specific terminologies and contexts. This bespoke approach imbues LLMs with the versatility to adapt and perform across diverse sectors, fulfilling specialized roles that were once considered beyond the reach of algorithmic solutions.

The Future Direction of Supervised Learning and LLMs

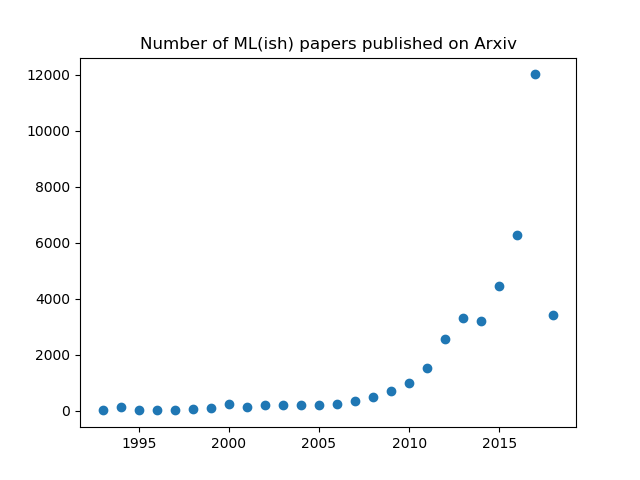

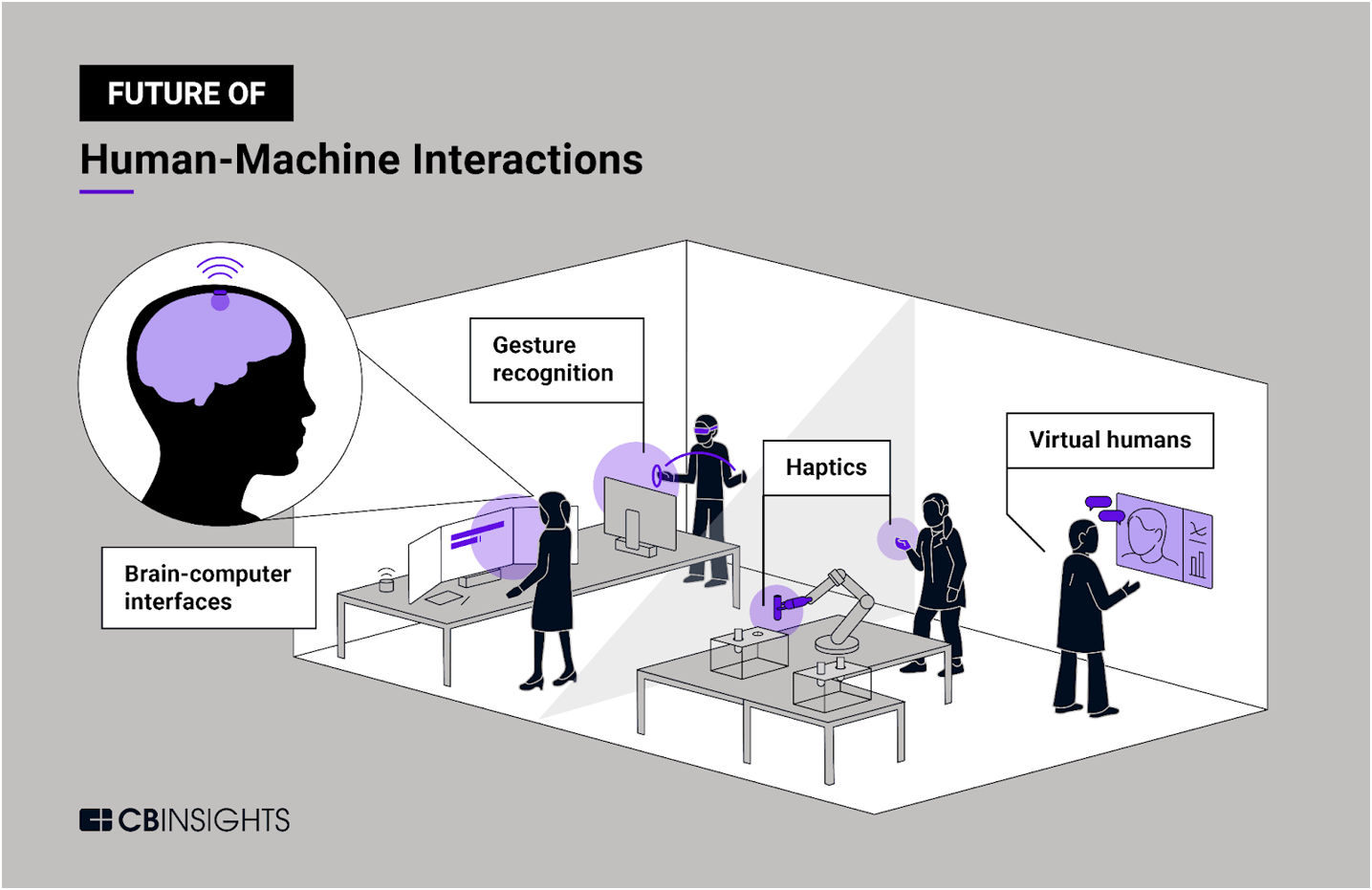

The journey of supervised learning and its application in LLMs is far from reaching its zenith. The next wave of advancements will likely focus on overcoming current limitations, such as the need for vast amounts of labeled data and the challenge of model interpretability. Innovations in semi-supervised and unsupervised learning, along with breakthroughs in data synthesis and augmentation, will play critical roles in shaping the future landscape.

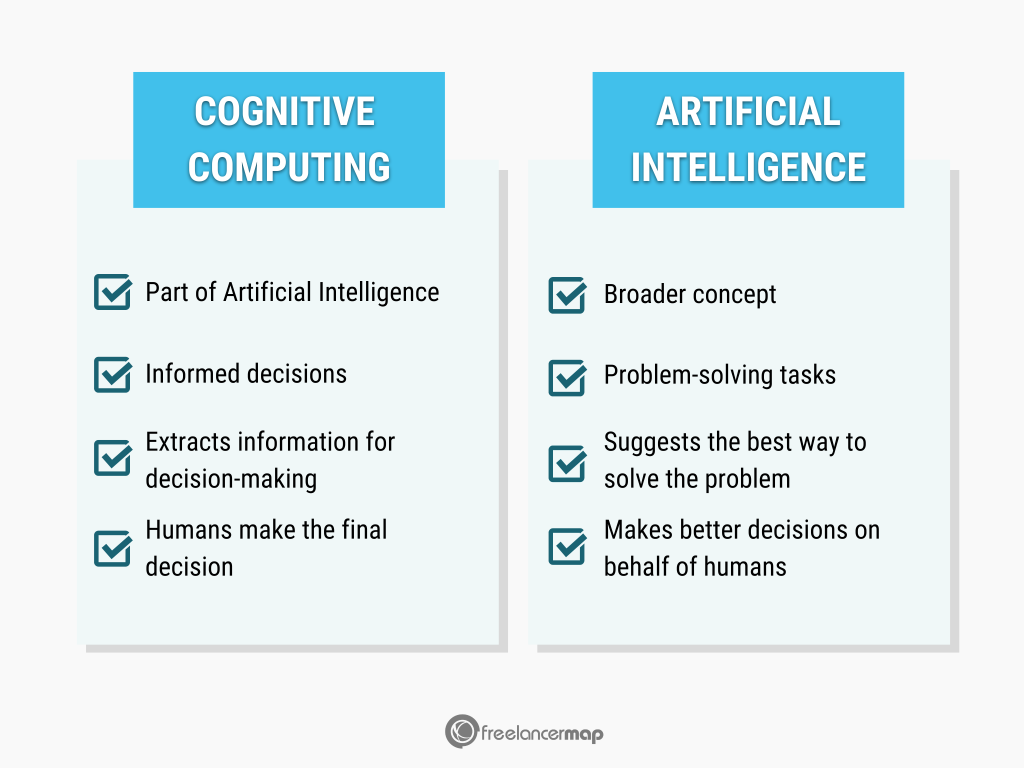

Moreover, as cognitive models and understanding of human learning processes advance, we can anticipate supervised learning algorithms to become even more efficient, requiring fewer data and computational resources to achieve superior results.

<

>

Conclusion: A Journey Towards More Intelligent Machines

The exploration and refinement of supervised learning techniques mark a significant chapter in the evolution of AI and machine learning. While my journey from a Master’s degree focusing on AI and ML to spearheading DBGM Consulting, Inc., has offered me a firsthand glimpse into the expansive potential of supervised learning, the field continues to evolve at an exhilarating pace. As researchers, developers, and thinkers, our quest is to keep probing, understanding, and innovating—driving towards creating AI that not only automates tasks but also enriches human lives with intelligence that’s both profound and practical.

The journey of supervised learning in machine learning is not just about creating more advanced algorithms; it’s about paving the way for AI systems that understand and interact with the world in ways we’re just beginning to imagine.

< >

>

For more deep dives into machine learning, AI, and beyond, feel free to explore my other discussions on related topics at my blog.

Focus Keyphrase: Supervised Learning in Machine Learning

>

> >

> >

> >

> >

> >

> >

> >

>

>

> >

> >

>