Decoding the Complex World of Large Language Models

As we navigate through the ever-evolving landscape of Artificial Intelligence (AI), it becomes increasingly evident that Large Language Models (LLMs) represent a cornerstone of modern AI applications. My journey, from a student deeply immersed in the realm of information systems and Artificial Intelligence at Harvard University to the founder of DBGM Consulting, Inc., specializing in AI solutions, has offered me a unique vantage point to appreciate the nuances and potential of LLMs. In this article, we will delve into the technical intricacies and real-world applicability of LLMs, steering clear of the speculative realms and focusing on their scientific underpinnings.

The Essence and Evolution of Large Language Models

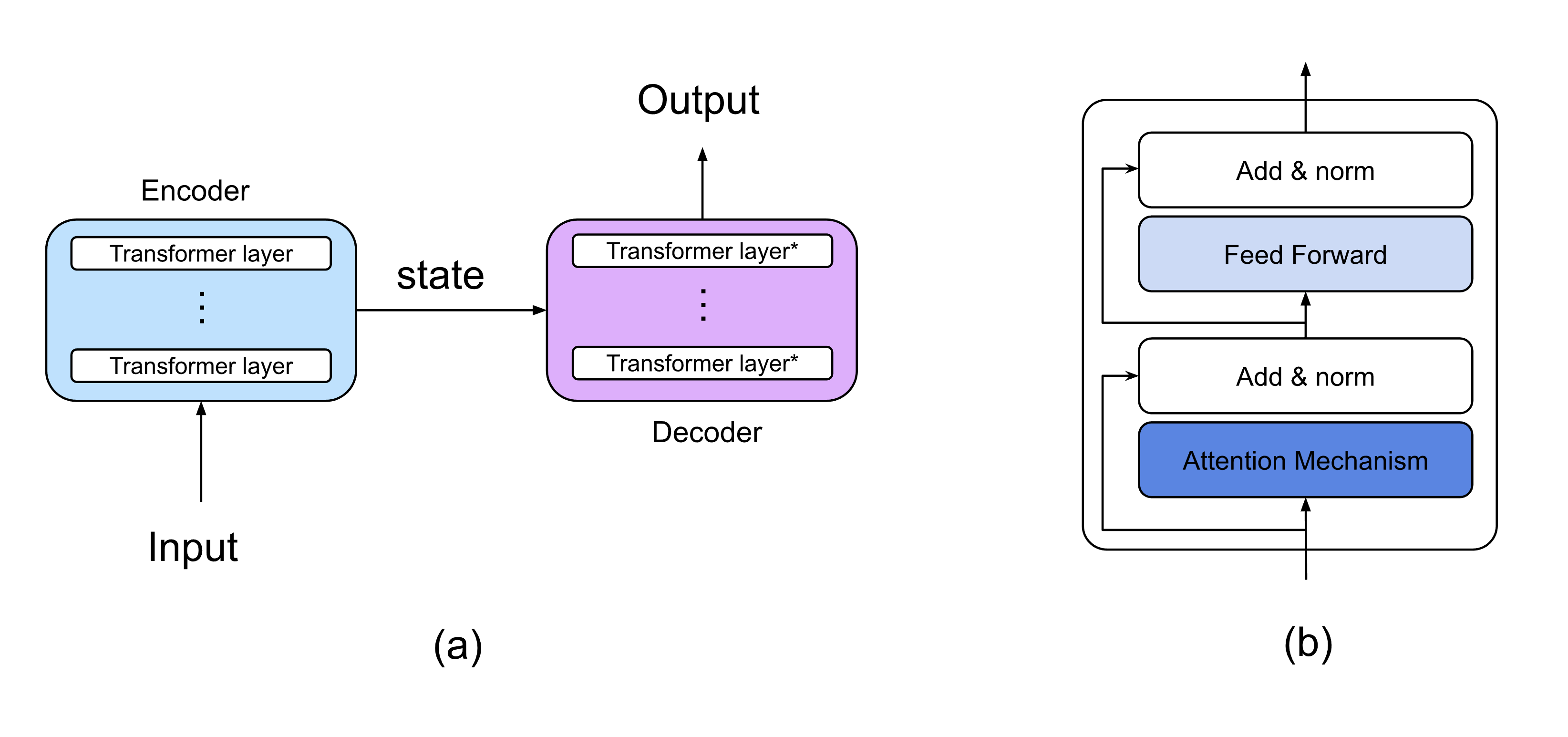

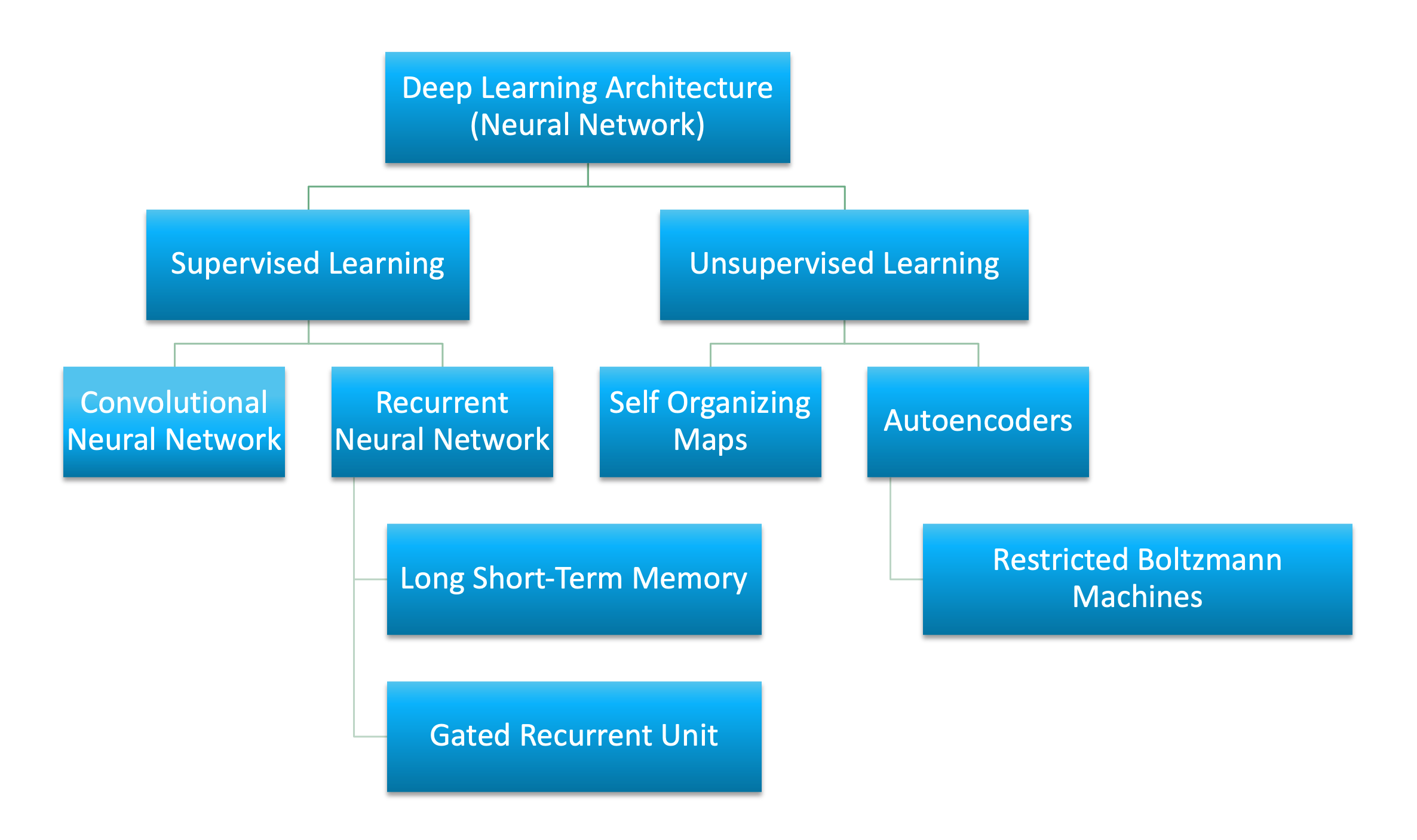

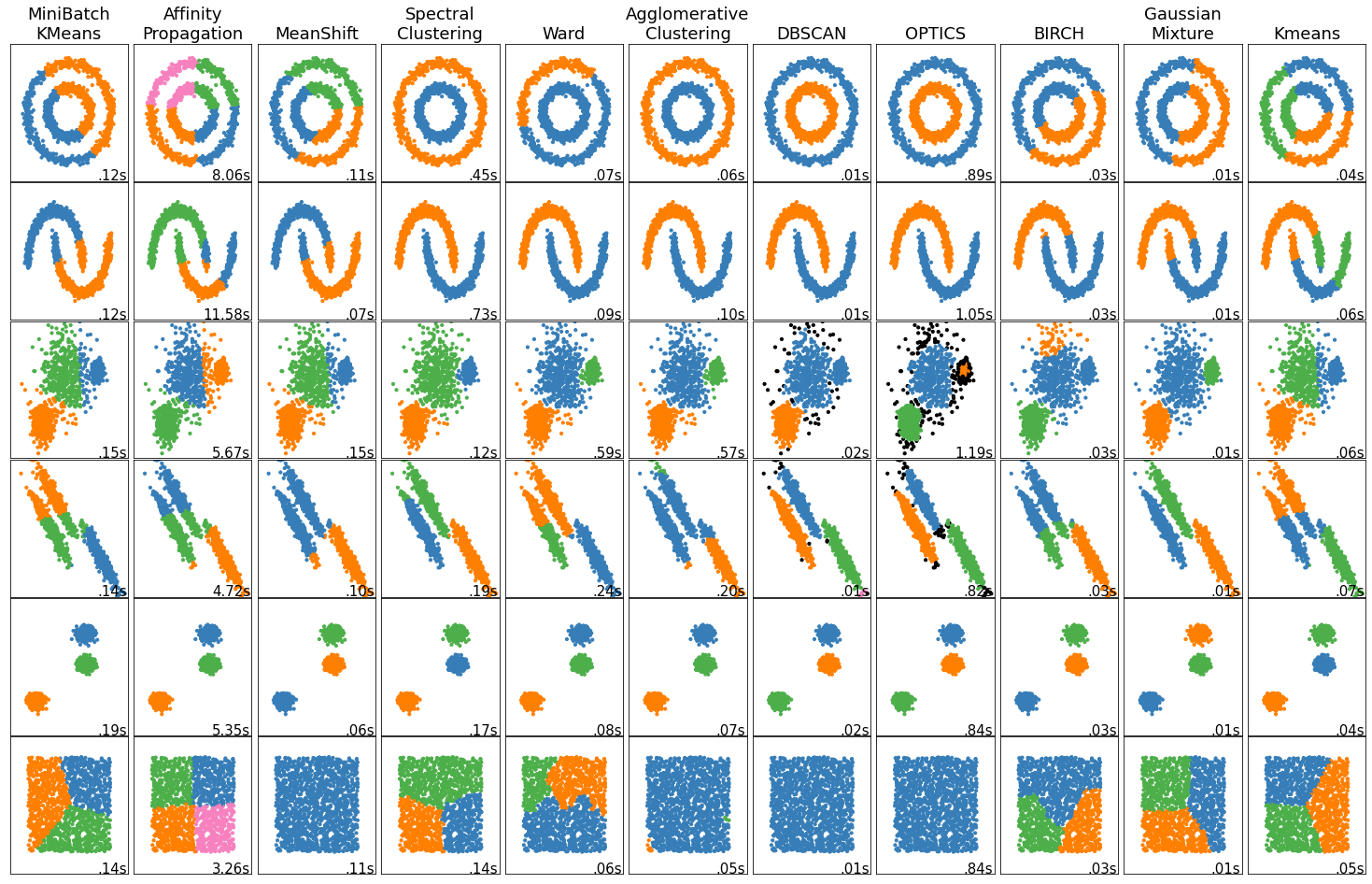

LLMs, at their core, are advanced algorithms capable of understanding, generating, and interacting with human language in a way that was previously unimaginable. What sets them apart in the AI landscape is their ability to process and generate language based on vast datasets, thereby mimicking human-like comprehension and responses. As detailed in my previous discussions on dimensionality reduction, such models thrive on the reduction of complexities in vast datasets, enhancing their efficiency and performance. This is paramount, especially when considering the scalability and adaptability required in today’s dynamic tech landscape.

Technical Challenges and Breakthroughs in LLMs

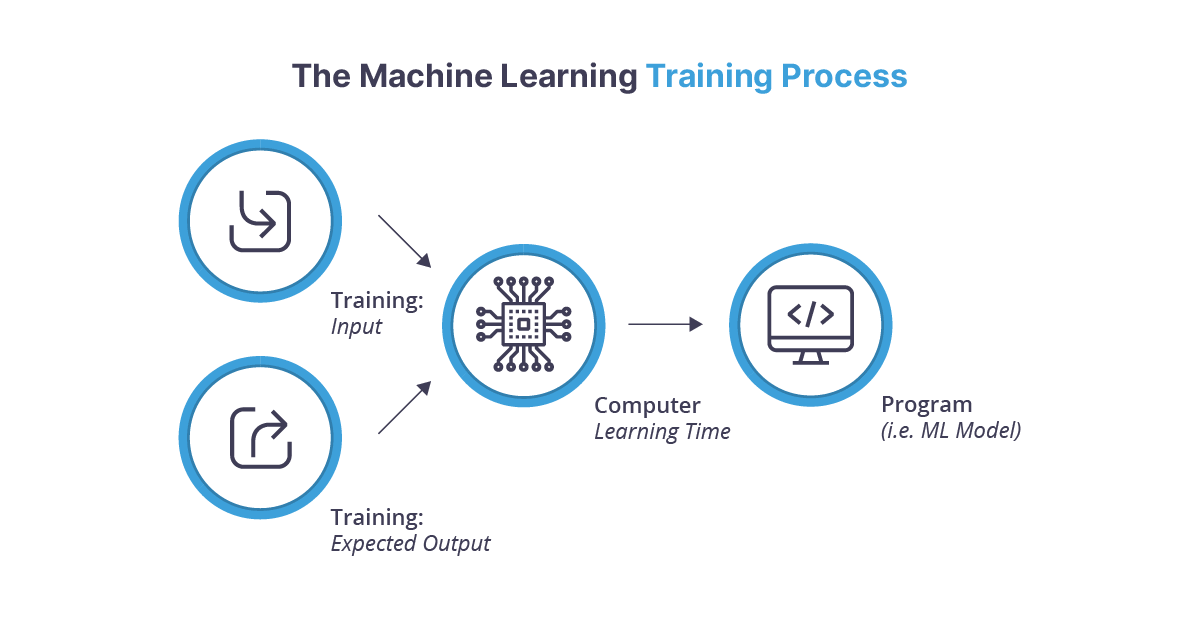

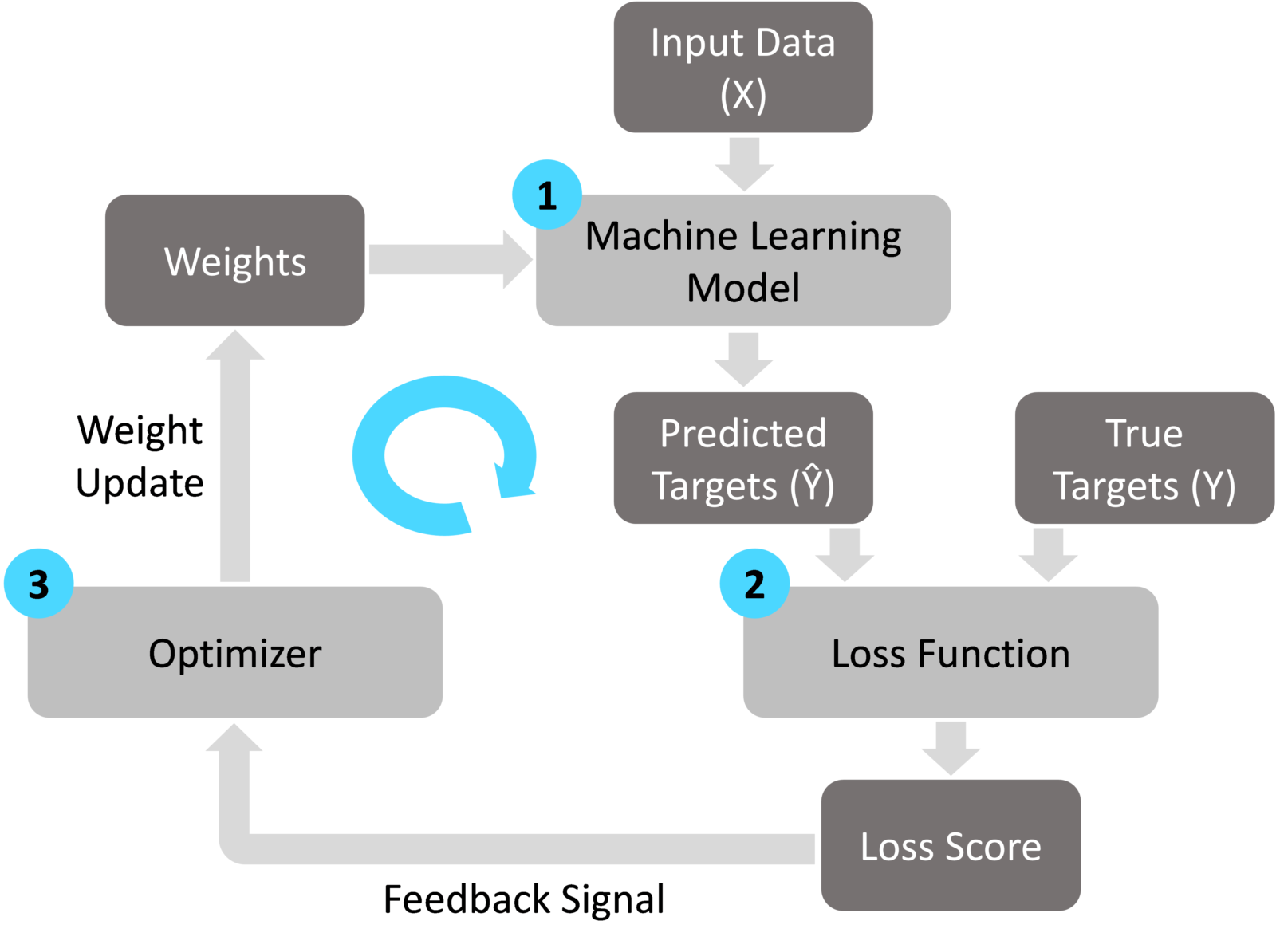

One of the most pressing challenges in the field of LLMs is the sheer computational power required to train these models. The energy, time, and resources necessary to process the colossal datasets upon which these models are trained cannot be understated. During my time working on machine learning algorithms for self-driving robots, the parallel I drew with LLMs was unmistakable – both require meticulous architecture and vast datasets to refine their decision-making processes. However, recent advancements in cloud computing and specialized hardware have begun to mitigate these challenges, ushering in a new era of efficiency and possibility.

An equally significant development has been the focus on ethical AI and bias mitigation in LLMs. The profound impact that these models can have on society necessitates a careful, balanced approach to their development and deployment. My experience at Microsoft, guiding customers through cloud solutions, resonated with the ongoing discourse around LLMs – the need for responsible innovation and ethical considerations remains paramount across the board.

Real-World Applications and Future Potential

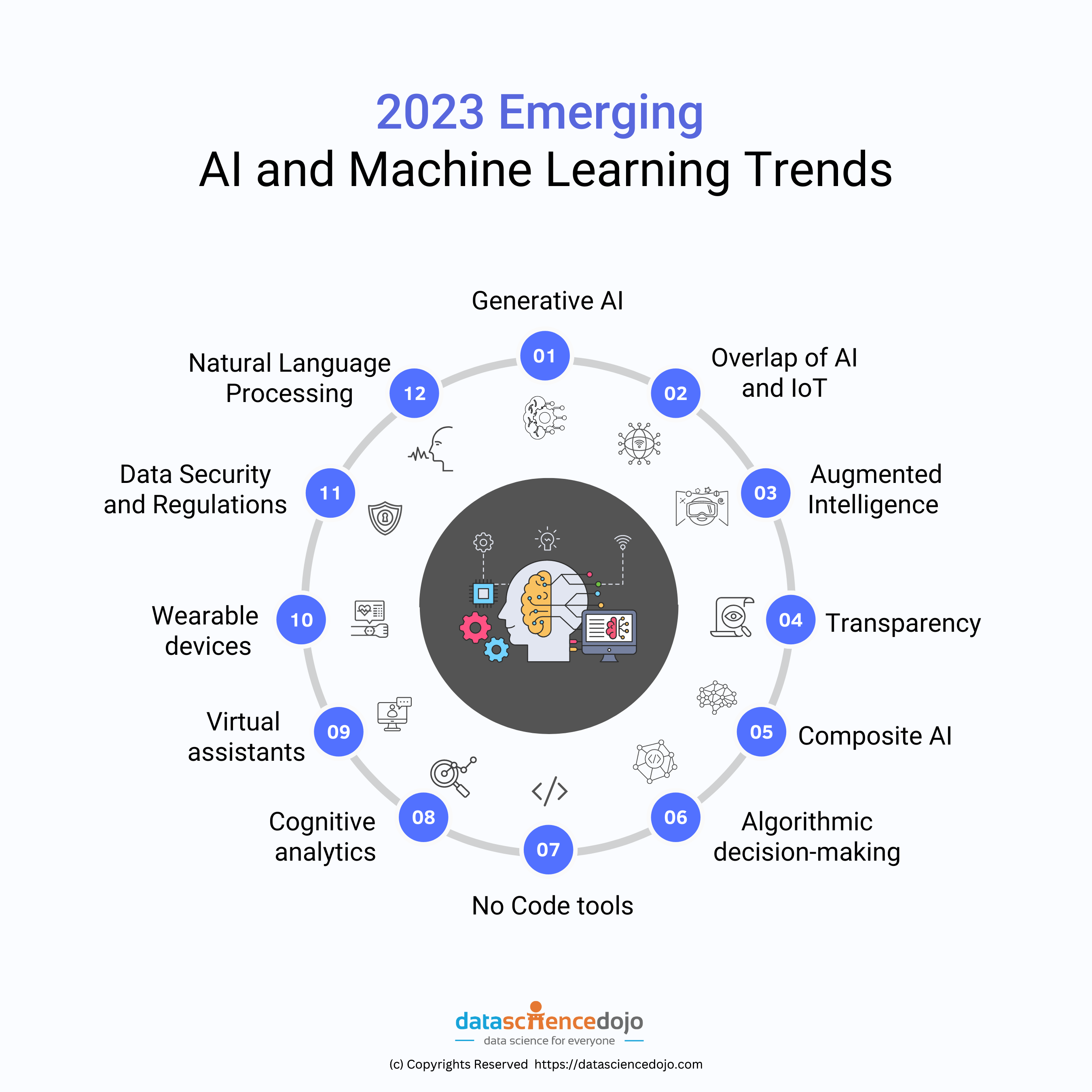

The practical applications of LLMs are as diverse as they are transformative. From enhancing natural language processing tasks to revolutionizing chatbots and virtual assistants, LLMs are reshaping how we interact with technology on a daily basis. Perhaps one of the most exciting prospects is their potential in automating and improving educational resources, reaching learners at scale and in personalized ways that were previously inconceivable.

Yet, as we stand on the cusp of these advancements, it is crucial to navigate the future of LLMs with a blend of optimism and caution. The potentials for reshaping industries and enhancing human capabilities are immense, but so are the ethical, privacy, and security challenges they present. In my personal journey, from exploring the depths of quantum field theory to the art of photography, the constant has been a pursuit of knowledge tempered with responsibility – a principle that remains vital as we chart the course of LLMs in our society.

Conclusion

Large Language Models stand at the frontier of Artificial Intelligence, representing both the incredible promise and the profound challenges of this burgeoning field. As we delve deeper into their capabilities, the need for interdisciplinary collaboration, rigorous ethical standards, and continuous innovation becomes increasingly clear. Drawing from my extensive background in AI, cloud solutions, and ethical computing, I remain cautiously optimistic about the future of LLMs. Their ability to transform how we communicate, learn, and interact with technology holds untold potential, provided we navigate their development with care and responsibility.

As we continue to explore the vast expanse of AI, let us do so with a commitment to progress, a dedication to ethical considerations, and an unwavering curiosity about the unknown. The journey of understanding and harnessing the power of Large Language Models is just beginning, and it promises to be a fascinating one.

Focus Keyphrase: Large Language Models

>

> >

> >

>

>

> >

> >

> >

> >

> >

> >

> >

>