Google Cloud Architecture

Google Cloud Architecture

Role of the Google Cloud Architect

As a Google Cloud architect, we are here to help customers leverage Google Cloud technologies. You need to have a deep understanding of cloud architecture and the Google Cloud Platform. You should be able to design, develop, and manage robust, secure, scalable, highly available and dynamic cloud solutions to drive business objectives. You should be talented in enterprise cloud strategy, solution strategy and best architectural design practices. The architect is also experienced in software development methodologies and different software approaches that could span multi-cloud or hybrid environments.

Getting Started with Google Cloud Platform

To start with you own free account to help you along, start by going to https://cloud.google.com/ and click “Get Started for Free”. This will get you into the cloud console with some free money to play around with different services.

Cloud Resources, Regions and Zones

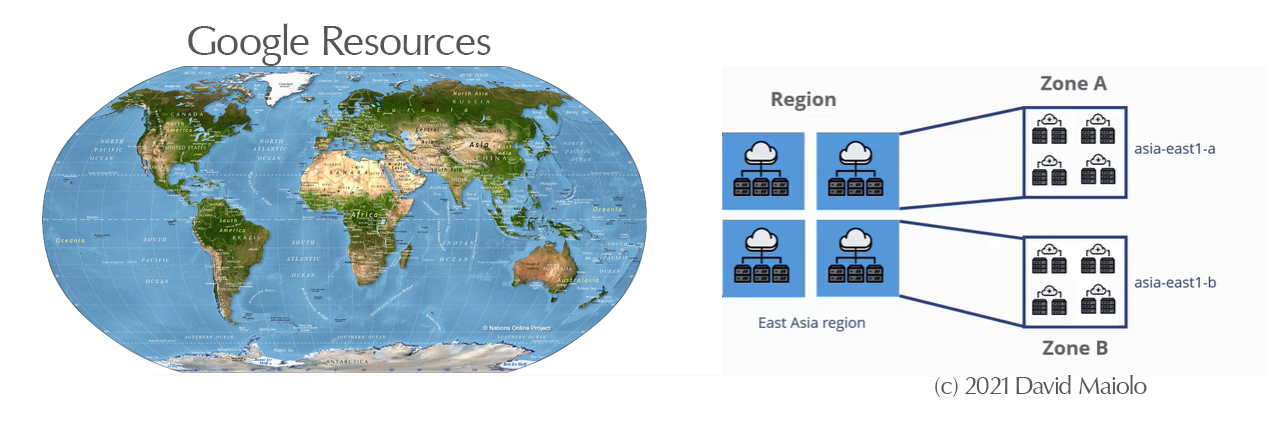

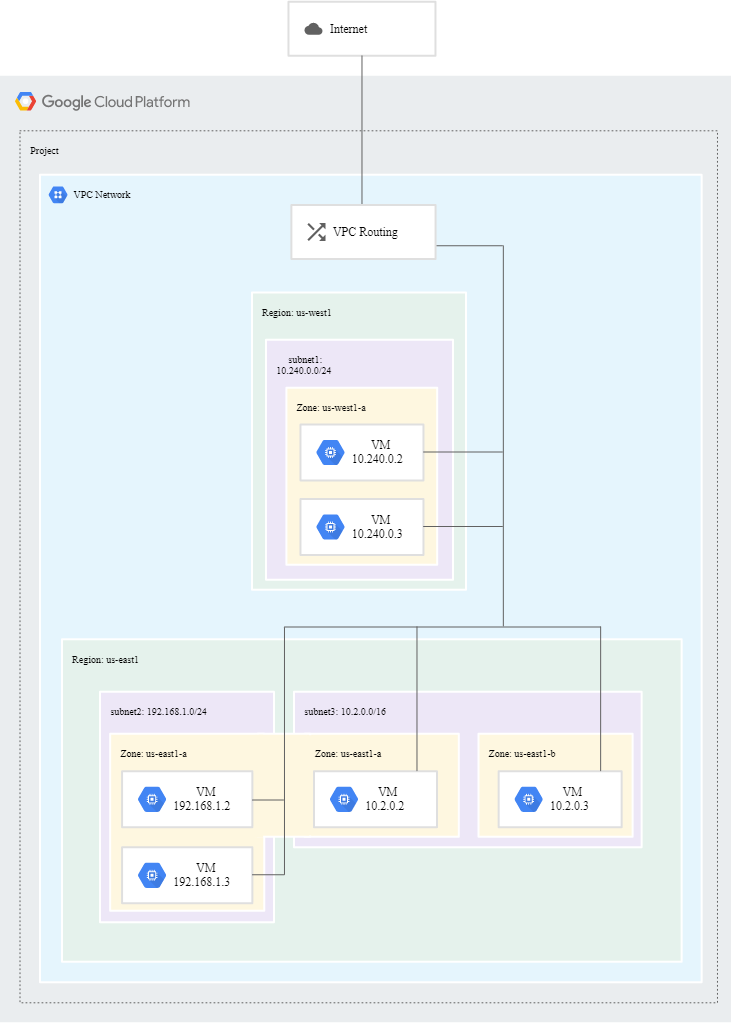

Prior to getting too deep into Google Cloud Platform, it is important that we understand resources, regions and zones. A resource is a physical asset such as a physical server or hard drive or virtual asset, such as a virtual machine that is sitting in one of Google’s datacenters. It is these resources that Google makes available to their customers that you then use to create your own instances, etc.

As I mentioned, these resources are all somewhere in a datacenter, and where that somewhere is, we call a region. So think about it, if google has a Physical server in some datacenter over in NY, then regardless of where you might be accessing that resource, it will be in an eastern US region. To further break up these regions (such as eastern US region), we isolate by zones. For example, there will be a us-east1-a, us-east1-b, us-east1-c, etc. zones, all in the US east1 region.

The point of having these regions and zones transparent to the customer is so they can choose where to place things physically in the cloud for redundancy in case of failure and reduced latency to your audience. Think about it, if Google never told you where that Virtual Machine you are creating was physically hosted, than maybe it’ll be in China, near a flood plain. If you were a US customer, wouldn’t you want to be able to choose that your Virtual Machine is on a US server, and if it were also on a flood plain, you could have a redundant copy of it in a separate isolated zone as well?

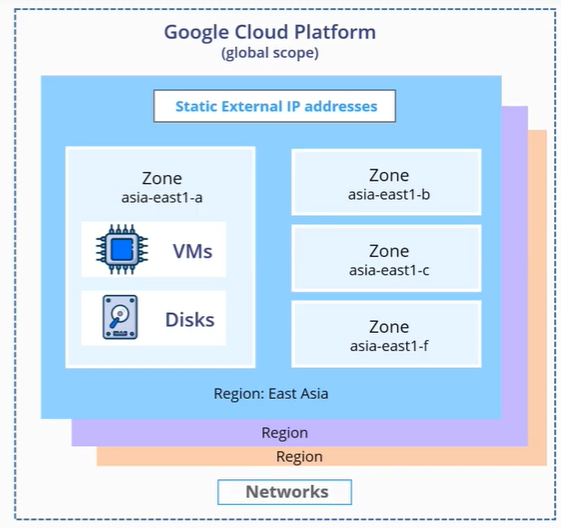

The Scope of Resources

This distribution of resources also introduces some rules about how these resources can be used together. The Global Scope shows us what resources can be accessed. Some resources can be accessed by any other resource across regions and zones. These global resources (name makes sense, doesn’t it?) include addresses, disk images, disk snapshots and firewalls, routes and cloud interconnects.

Other resources can only be accessed by resources that are located in the same region. These are called regional resources and include static external IP addresses, subnets, and regional persistent disk.

Further, some resources can only be accessed by resources that are in the same zone, and these zonal resources include Virtual Machine Instances, machine types available in that zone, persistent disks and cloud TPUs.

So, if you have a VM instance over in us-east1-a, can it talk to a VM instance over in us-east1-b? I’ll let you answer that. (hint, no).

Finally, when an operation is performed, such as “hey, I’m gonna go create a virtual machine instance”, this operation is scoped to either global, regional or zonal, depending on what that operations is creating.This is known as the scope of the operation. In our example, creating a VM instance is a zonal operation because VM Instances are zonal resources. Make sense?

Google Cloud Platform Console

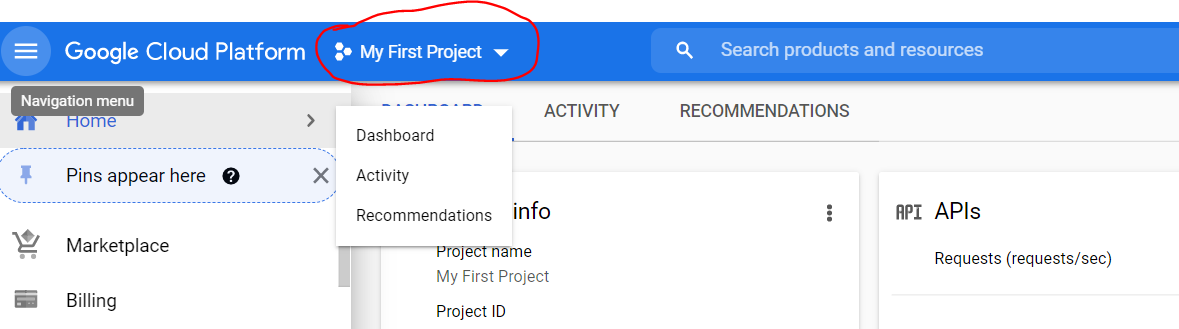

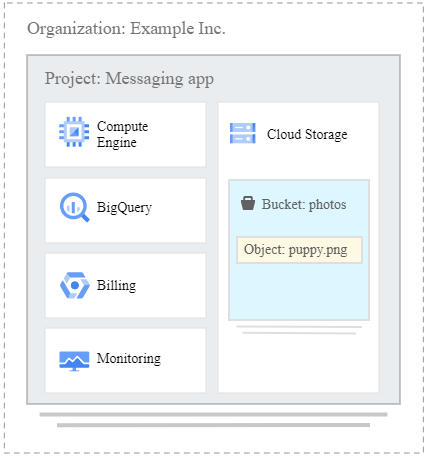

Projects

Looking back at the https://console.cloud.google.com/ dashboard you created earlie. Any GCP resource that you allocate and use must get placed into a project. Think of a project as the organizing entity for what you are building. To create a new project, just click that drop down and select ‘New Project’.

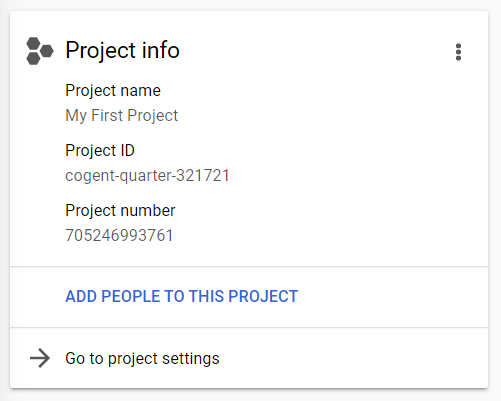

Each GCP project has a project name, project id and project number. As you work with GCP, you’ll use these identifiers in certain command lines and API calls

Interacting with GCP via Command Line

Google Cloud SDK

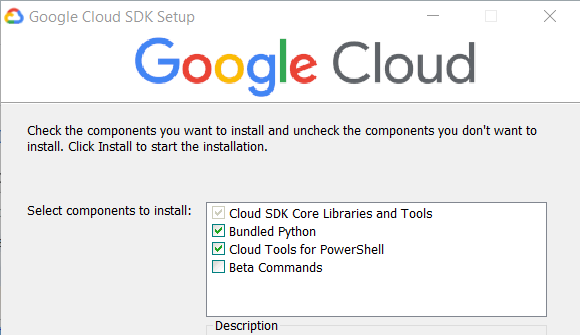

To interact with GCP via command line, install the Google Cloud SDK at https://cloud.google.com/sdk. These include gcloud (the primary CLI), gsutil (python app for cloud storage), and Bq (python BigQuery tool).

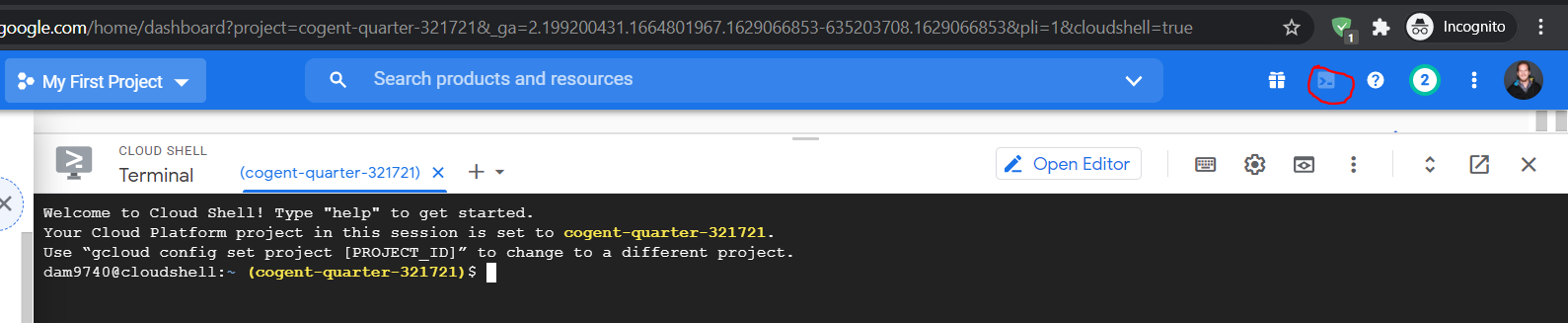

Google Cloud Shell

You can also access gcloud from Cloud Shell. Within the console, click Cloud Shell in the upper right corner. This will provision an e2 virtual machine in the cloud that you can use from any browser to open a shell.

Google Cloud API

Google Cloud APIs are central to Google Cloud Platform, allowing you to access to storage, instances, machine-learning-based tasks and more from an API.

You can access could APIs in a few different ways:

- From a server with Google client libraries installed.

- Access for mobile applications via the Firebase SDKs

- From the Google Clouds SDK or Google Cloud Console, using gcloud CLIs to manage authentication, local configuration, developer workflow, and interactions with Google Cloud APIs.

Google Cloud Platform Services

Let’s look at the most common Google Cloud Services is use.

Computing & Hosting Services

Google Cloud’s computing and hosting services allow you to work in a serverless environment, use a managed application platform, leverage container technologies, and build your own cloud infrastructure to have the most control and flexibility. Common services include:

Virtual Machines

Google Cloud’s unmanaged computer service is called Compute Engine (virtual machines. It is GCP’s Infrastructure as a Service (IaaS). The system provides you a robust computer infrastructure, but you must choose and configure the platform components that you want to use. It stays your responsibility to configure, administer and monitor the machines. Google will ensure that resources are available, reliable and ready for you to use, but it is up to you to provision and manage them. The advantage is that you have complete control of the systems with unlimited flexibility.

You use Compute Engine to:

- Create Virtual Machines, called instances

- Choose global regions and zones to deploy your resources

- Choose what Operating System, deployment stack, framework, etc. you want

- Create instances from public or private images

- Deploy pre-configured software packages with Google Cloud Marketpalce (LAMP stack with a few clicks)

- Use GCP storage technologies, or use 3rd party services for storage

- Autoscaling to automatically scale capacity based on need

- Attach/Detach disks as needed

- Connect to instances via SSH

Application Integration

Google Cloud’s application integration service is called App Engine. This is GCP’s Platform as a Service (PaaS) offering. App Engine handles most of the management of the resources on your behalf. For example, if your application requires more computing resources because traffic to your site increases, GCP will automatically scale the system to provide those resources. Additionally, let’s say the software required a security update. This is also handled for you automatically by GCP.

When you build you app on App Engine:

- You can build your app in several languages including Go, Java, Node.js. PHP, Python, or Ruby

- Use pre-configured runtimes or use custom runtimes for any language

- GCP will manage app hosting, scaling, monitoring and infrastructure for you

- Connect with Google Cloud storage products

- Use GCP storage technologies or any 3rd party storage

- Connect to Redis databases or host 3rd party databases like MongoDB and Cassandra.

- Use Web Security Scanner to identify security vulnerabilities.

Containers

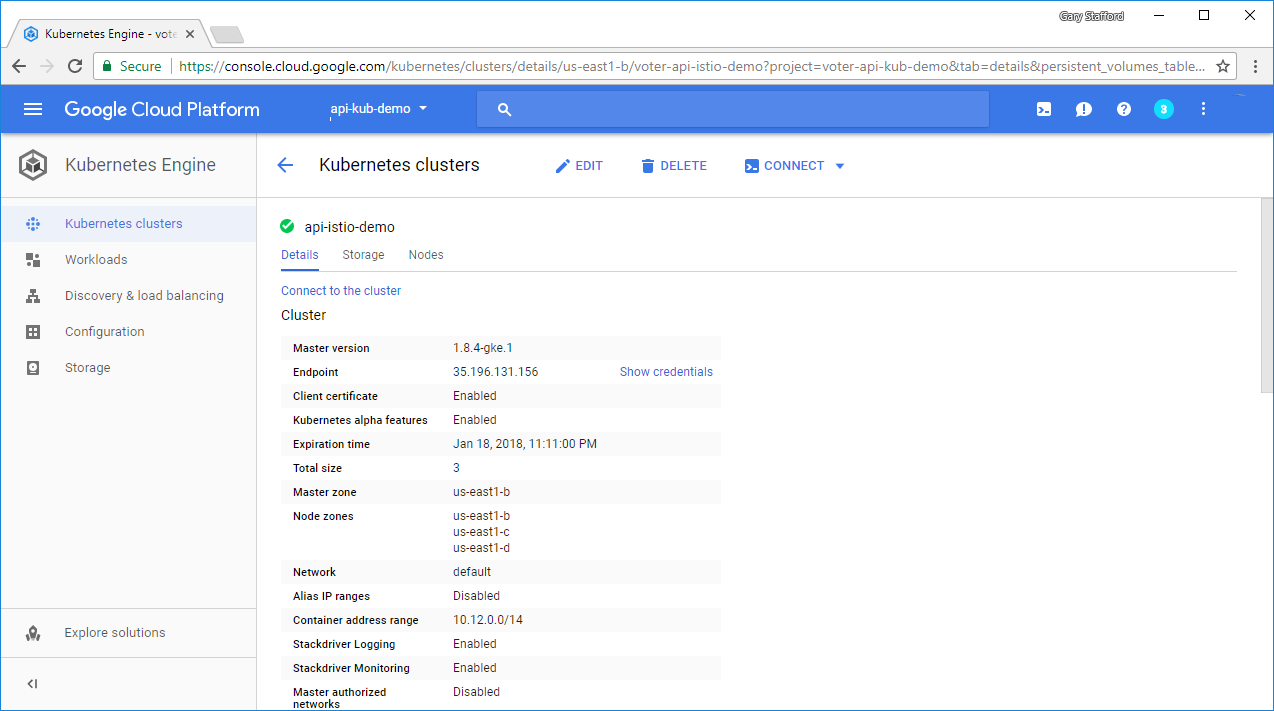

With container-based computing, you can focus on your application code rather than infrastructure deployments and integration. Google Kubernetes Engine (GKE) is GCP’s Containers as a Service (CaaS) offering. Kubernetes is open source and allows flexibility of on-premises, hybrid and public cloud infrastructure.

GKE allows you to:

- Create and manage groups of Compute Engine instances running Kubernetes, called clusters.

- GKE uses Compute Engine instances as nodes in the cluster

- Each node runs the Docker runtime, a Kubernetes node agent that monitors the health of the node and a network proxy

- Declare the requirements for your Docker containers by utilizing simple JSON config files

- Use Google’s Container Registry for management of Docker images

- Create an external network load balancer

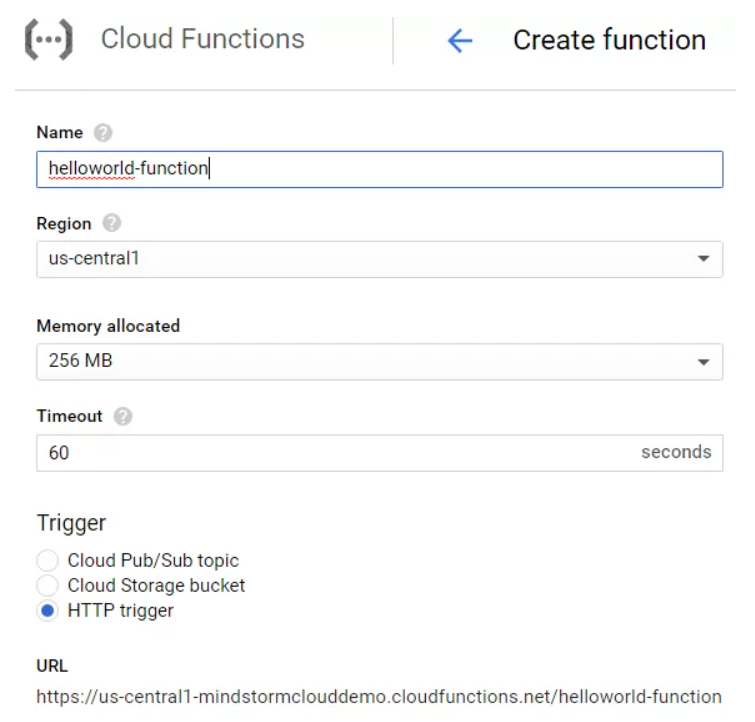

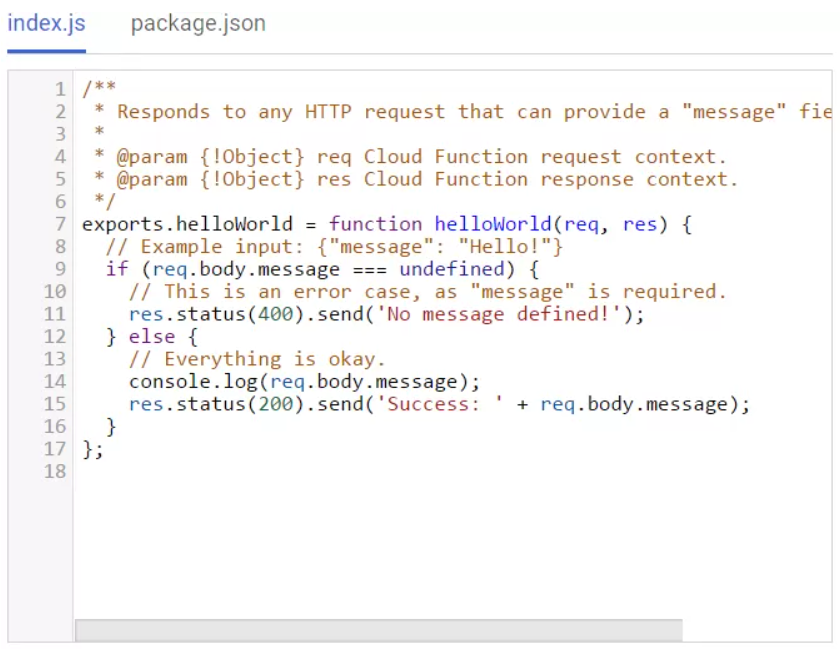

Serverless Computing

GCP’s Serverless Computing offering is known as Cloud Functions. It is Google Cloud’s Functions as a Service (FaaS) offering. It’s an environment for building and connection cloud services. Cloud Functions allows you to write simple, single purpose functions that are attached to events produced from your cloud infrastructure and services. A function is triggered when some event being watched is fired. The code within the function then executes in a fully managed environment (no infrastructure to manage or configure). Cloud Functions are typically written using JavaScript, Python, Python 3, Go or Java Runtimes in GCP. You can then run the function is any Node.js, Python 3, Go, or Java environments

You do not have stick with just one type of computing service. Feel free to combine App Engine and Compute Engine, for example, to take advantage of features and benefits of both.

Storage Services

Regardless of your application or requirement, you’ll likely need to store some media files, backups, or other file like objects. Google Cloud provides a variety of storage services.

Cloud Storage

Cloud Storage gives you consistent, reliable and large capacity data storage. Google Storage allows you to select standard storage, which gives maximum reliability, or you can choose Cloud Storage Nearline for low-cost archival storage or Cloud Storage Coldline for even lower cost archival storage. Finally, there is Cloud Storage Archive for the absolute lowest cost archival storage, which is ideal for backup and recovery or for data which you intend to access less than once a year.

Persistent Disks on Compute Engine

Persistent Disk on Compute engine is used as a primary store for your instances. It can be offered in both hard-disk based persistent disks (called “standard persistent disk”) and Solid-state persistent disks.

Filestore

You can get fully managed Network Attaches Storage (NAS) in Filestore. You can use Filestore instances to store data from applications running on Compute Engine VM Instances or GKE clusters.

Database Services

GCP provides a variety of SQL and NoSQL database services. The first is Cloud SQL, which is a SQL database for MySQL or PostgreSQL databases. Next, Cloud Spanner is a fully managed, mission-critical relational database service. It offers transactional consistency at global scale, schemas, SQL, querying, and automatic synchronous replication for high availability. Finally, there are two main options for NoSQL Data storage: Firestore and Cloud Bigtable. Firestore is for document-like data and Cloud BigTable is for tabular data.

You could also setup your preferred database technology on Compute Engine running in a VM instance.

Networking Services

These are the common services under Google’s networking services.

Virtual Private Cloud

Google’s Virtual Private Cloud (VPC) provides the networking functionality to Compute Engine virtual machine (VM) instances, GKE, and the App Engine environment. VPC provides networking for cloud-bases services and is global, scalable and flexible. One key feature of VPC is that it is global. A single VPC can be deployed across multiple regions without communicating over the public internet. It is also shareable in that a single VPC within an entire organization can be shared. Teams can be isolated within projects with separate billing and quotas, yet still be within the same shared private IP space.

With a VPC, you can:

- Set firewall rules to govern traffic coming into instances on a network.

- Implement routes to have more advanced networking functions such as VPNs

Load Balancing

If your website or application is running on compute engine, the time might come when you are ready to distribute the workload across multiple instances.

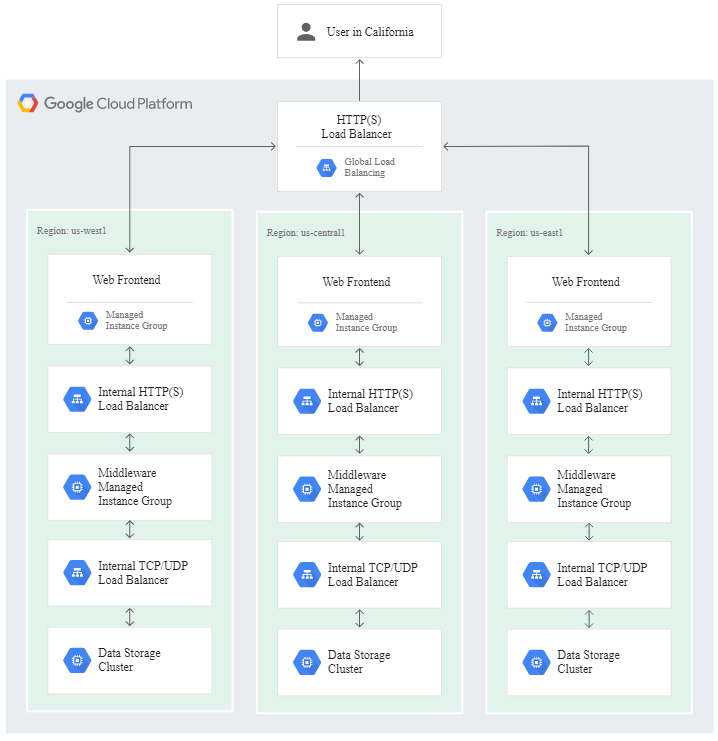

With Network Load Balancing, you can distribute traffic among server instances in the same region, based on incoming IP protocol data such as address, port, or protocol. Networking Load Balancing can be a great solution if, for example, you want to meat the demands of increasing traffic to your website.

With HTTP(S) Load Balancing you can distribute traffic across regions, so you can ensure that requests are routed to the closest region or, if there were a failure (or capacity limitation), failover to a healthy instance in the next closest region. You could also use HTTP(S) Load Balancing to distribute traffic based on content type. For example, you might setup your servers to deliver your static content such as media and images from one server, and any dynamic content from a different server.

Cloud DNS

You can publish and maintain DNS records by using the same infrastructure Google uses. You can do this via the Cloud Console, command line, or REST APIs to work with managed zones and DNS records.

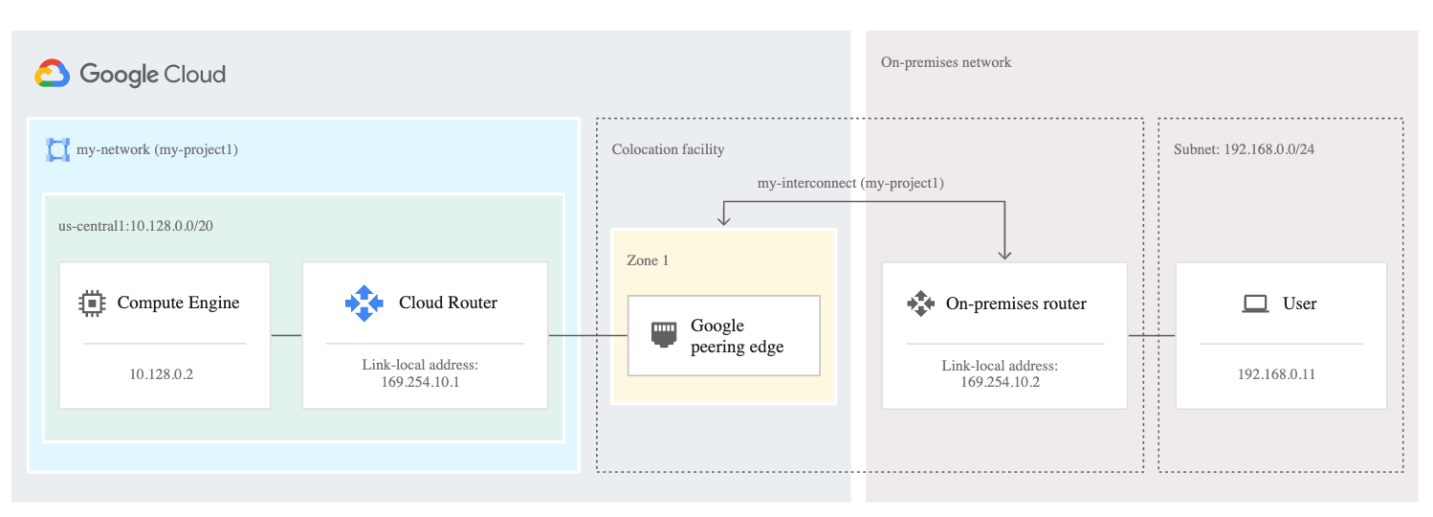

Advanced Connectivity

If you have an existing network that you want to connect to Google Cloud resources, Google cloud offers the following advanced connectivity

- Cloud Interconnect: This allows you to connect your existing network to your VPC network through a highly available, low-latency, enterprise grade connection.

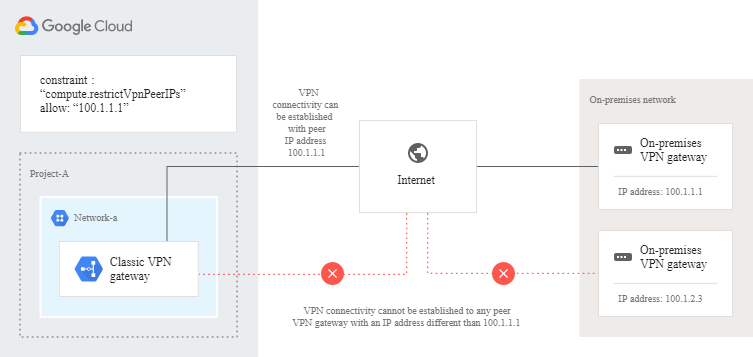

- Cloud VPN: This enables you to connect your existing network to your VPC network via an IPsec connection. You could also use this to connect two VPN Gateways to each other.

- Direct Peering: Enables you to exchange traffic between your business network and Google at one of Google’s broad reaching edge network locations

- Carrier Peering: Enables you to connect your infrastructure to Google’s network edge through a highly available, lower latency connections by using service providers.

Big Data and Machine Learning

Let’s look at some of Google’s commonly used Big Data and Machine learning technologies.

Data Analysis

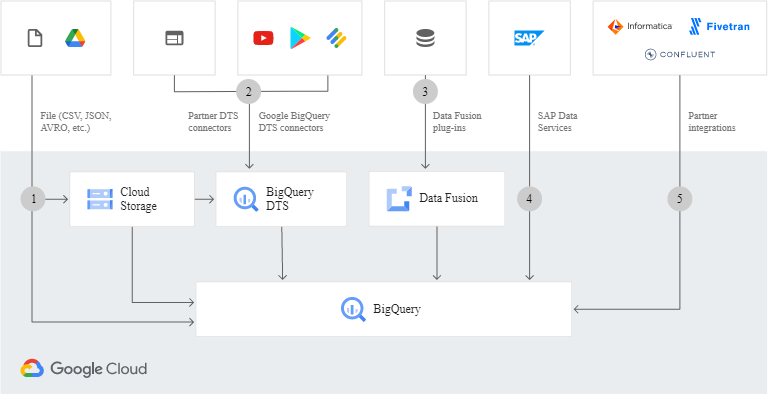

First, there is Data Analysis. GCP has BigQuery for implementing data analysis tasks. BigQuery is an enterprise data warehouse, providing analysis services. With BigQuery you can organize data into datasets and tables, load data from a variety of sources. Query massive datasets quickly and manage and protect data. BigQuery ML enables you to build and operationalize ML modems on planet-scale data directly inside BigQuery using simple SQL. BigQuery BI Engine is a fast, in-memory analysis service for BigQuery that allows you to analyze large and complex datasets interactively with sub-second query response time.

Batch and Streaming Data Processing

For batch and streaming data processing, Dataflow is a managed service for executing a wide variety of data processing patterns. It also provides a set of SDKs that you can use to perform batch and streaming processing tasks. It works well for high-volume computation, especially when tasks can be clearly divided into parallel workloads.

Machine Learning

The AI Platform offers a variety of machine learning (ML) services. You can use APIs to provide pre-trained models optimized for specific applications, or build and train your own large-scale, sophisticated models using a managed TensorFlow framework. There are a variety of Machine Learning APIs you can use:

- Video Intelligence API: Video analysis technology

- Speech-to-Text: You can transcribe through audio or a microphone using several languages

- Cloud-Vision: Integrate optical character recognition (OCR), and tagging of explicit content.

- Cloud Natural Language API: This is for sentiment analysis, entity analysis, entity-sentiment analysis, content classification and syntax analysis.

- Cloud Translation: Translate source text into any of over a hundred supported languages.

- DialogFlow: Build conversational interfaces for websites. Mobile apps and messaging platforms.

Leave a Reply

Want to join the discussion?Feel free to contribute!