Google Anthos

Anthos is a fully managed hybrid cloud platform that enables you to run Kubernetes clusters in the cloud and on-premises environments. As an open cloud computing platform that works well with multi-cloud environments, it works across public clouds such as Microsoft Azure and AWS. In other words, as you work to containerize and modernize your existing application environment, Anthos allows you to do this on-prem or across any cloud provider. It does not force you to have to use the Google Cloud Platform to modernize.

History of Anthos

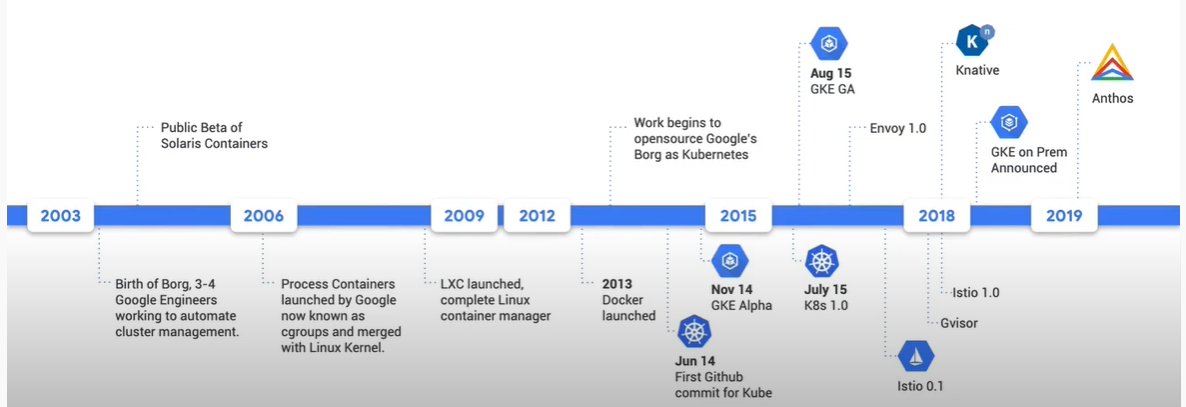

Because Anthos is a pretty broad product suit, Anthos means different things to different people. Before we get any deeper into Anthos, let’s take a journey on how Google got to Anthos. Over the last 10 years, there have been a number of fundamental technological shifts. These shifts have made it different in how we build our applications, and the way they are run in the cloud. Some of the new fundamental technologies that have helped shape Anthos include:

- Cgroups: 2006, developed by Google as an early containerized implementation for Google’s internal software

- Docker: 2013, built a lot of tooling around containers based on things learned from Cgroups. It’s used for deploying containerized software to one machine. As developers were making more and more containers as monolithic software was being reorganized into microservices, it became hard to orchestrate all these containers.

- Kubernetes: 2014, Leveraging what Google had learned running Containers in Docker at scale, this was released. Kubernetes is used for deploying containerized software to multiple machines and is now the standard way for running containers at scale.

- Istio: to help manage services in production the same way Google does. Allows you to deploy things like Site Reliability Engineering Practices.

Many of you are saying cloud is here to stay and an even larger portion believe multi-cloud (multiple cloud vendors being used) and Hybrid (on-prem and in cloud) is key and have multi-cloud plans, yet most company’s applications remain on-prem, with a small fraction of workloads having been moved to the cloud. Many of you have also made big investments into your on-prem infrastructure and datacenters and, if moving to the cloud, want to still leverage your on-prem investments as you move incrementally to the cloud. Additionally, for those of you that did move your applications to the cloud, not all were successful, and some of you had to roll-back to your on-prem environments.

As you tend to try and modernize your applications, and pull apart your monolithic services and try to make microservices out of them, you are moving to the cloud at the same time and things can get complicated. This is full of risk as you are reengineering your application, familiarizing yourself with new tools and processes, and trying to discover new cloud workflows at the same time.

Introduce, Anthos

Anthos was developed in 2019 to try and meet you where you currently stand, to help you try and modernize where you are, in-place within the on-prem setup, in the datacenter, before you move to the cloud. That way, by the time you want to move to the cloud, things are already set; you’ve done the organizational work on-prem. Therefore, Anthos was developed to work in the cloud as well as in the datacenter.

At a high level, Anthos is an application deployment and management tool for on-premise and multi-cloud setups. It accomplishes this while remaining entirely a software solution, with no hardware lock-in. From your standpoint, your infrastructure is abstracted away so you can focus on building your applications, not managing your infrastructure. As Anthos is built on open technologies, you can typically avoid vendor lock-in as well.

Anthos Tools

Anthos has different types of tools for different types of people within your organization. There are both open-source and hosted versions of many tool types as outlined in the table.

| Type | Open-Source Version | Gold-Class Hosted Version |

| Developer | Knative | Cloud Run |

| Service Operator / SRE | Istio | Service Mesh (Istio on GKE) |

| Infrastructure Operator | Kubernetes | Kubernetes Engine (GKE) |

When you work on-premises, the hosted versions of the software are brought to you via VMware vSphere.

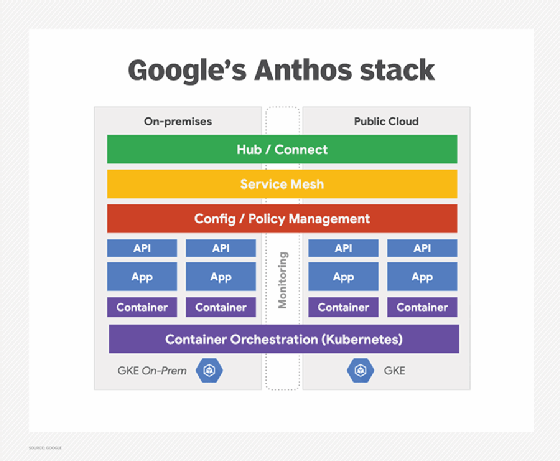

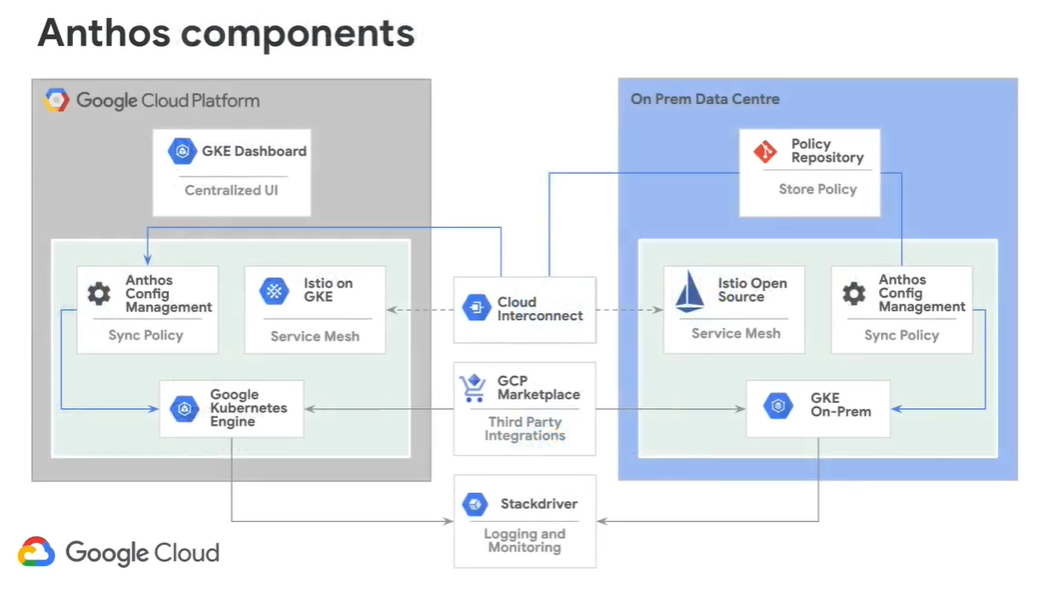

Anthos Components

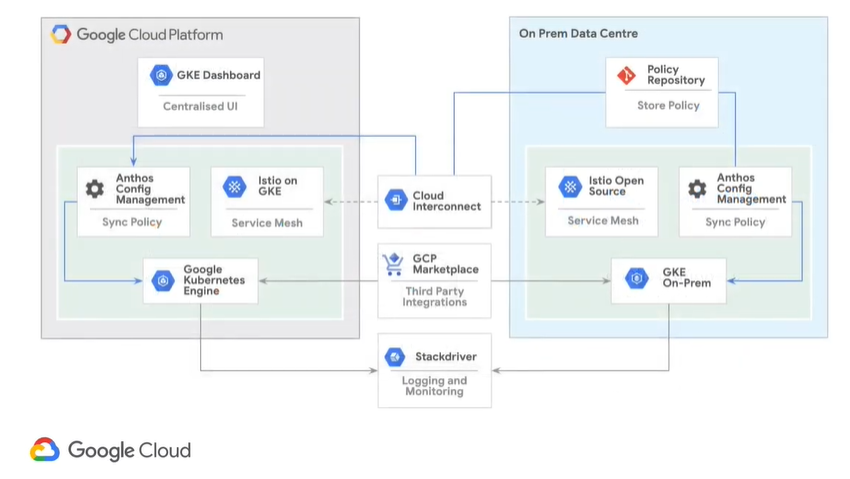

Let’s look at all the Anthos components:

When you first look at diagrams like this, you may be overwhelmed by the complexity of Anthos, but the more we delve into these components, the more familiar you will become. We will break this diagram down piece by piece to understand every component.

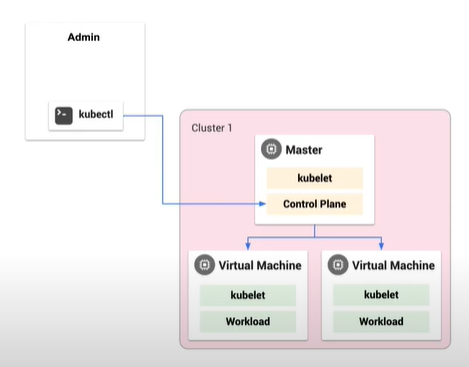

Kubernetes

Let’s spend a moment looking back at Kubernetes. Kubernetes are:

- Container packaged (portable and predictable deployment with resource isolation)

- Dynamically scheduled (Higher efficiency with lower operational costs)

- Microservices oriented (Loosely coupled services that support independent upgrades)

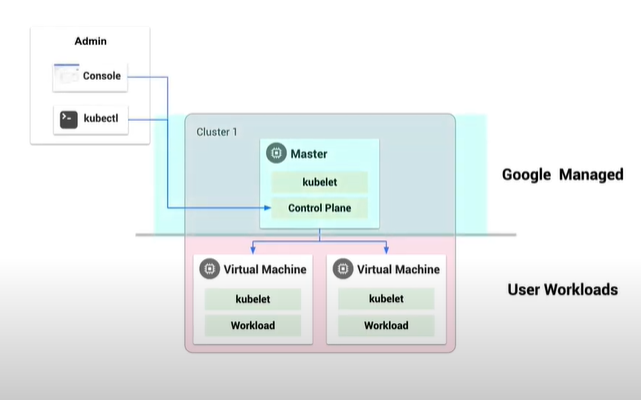

Kubernetes uses the kubectl command to administer your cluster. Kubectl communicates with the master via a set of APIs and tells it to deploy the required containers, scaling type, etc.

Kubernetes Engine (GKE)

Kubernetes Engine was then developed as a managed Kubernetes platform that will run on GCP. It also includes:

- Managed Kubernetes

- Fast cluster creation

- Automatic upgrade and repairing of nodes

- Curated versions of Kubernetes and node OS

This made it much easier to run your Kubernetes clusters on GCP. With Kubernetes, it could take hours to spin up your infrastructure and get it to a Kubernetes-ready state. With GKE, there is an easy interface on the cloud console and after a few clicks, you will have a running Kubernetes cluster.

With GKE, you can still use kubectl to communicate with the master, but you are also given the cloud console to interface with the master from the cloud. This uses the same set of APIs to communicate with the Master as Kubernetes.

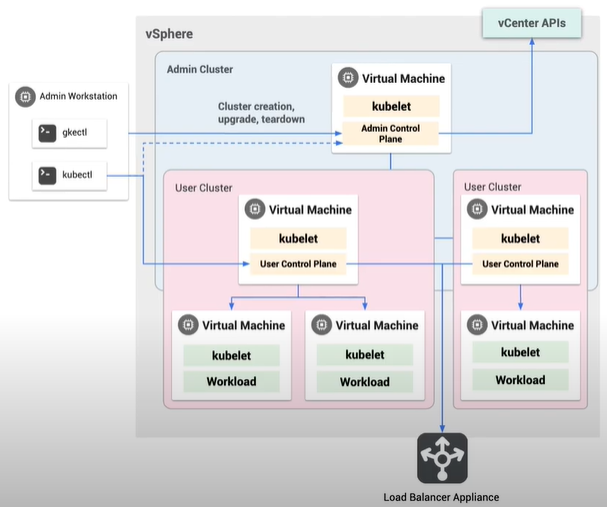

GKE On-Prem

Anthos introduces GKE On-prem and runs as an automatic deployment on top of vSphere within your environment. This allows you to not just run Kubernetes on your infrastructure, but GKE entirely, giving you all the GKE benefits on-prem. It offers:

- Benefits of GKE, plus

- Automated deployment on vSphere

- Easy upgrade to latest Kubernetes release

- Integration with cloud hosted container ecosystem

When using GKE On-Prem, there is now introduced an Admin Workstation and you can also use kubectl to talk to this new type of cluster called an Admin Cluster. The Admin Cluster is responsible for creating your clusters for you, in your environment. You can also continue to use kubectl to interface directly with your cluster as you had with Kubernetes and GKE.

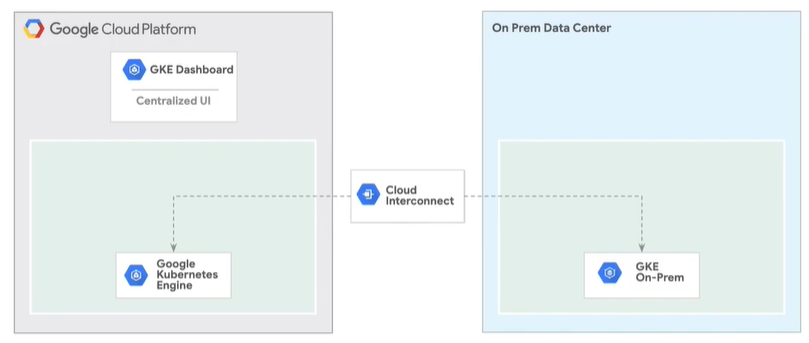

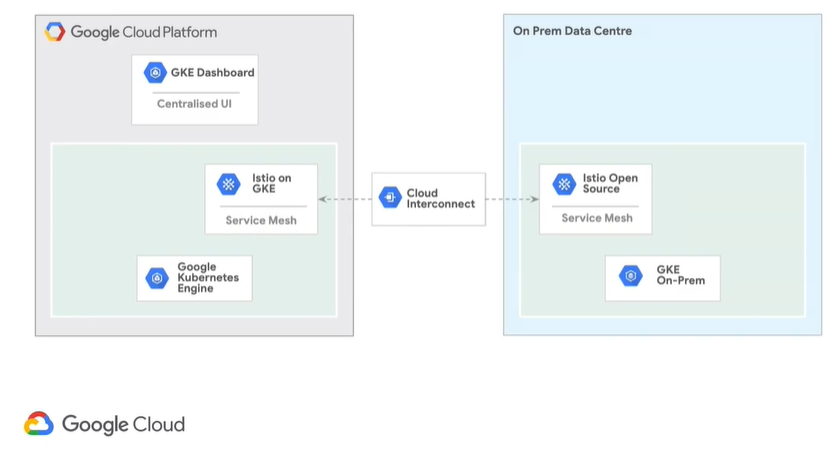

GKE Hub (within the GKE Dashboard)

Even easier than continuing to use kubectl, you can also manage your on-prem GKE and other Kubernetes environments from the cloud console via the GKE Hub. GKE On-Prem clusters are automatically registered upon creation and the Hub gives you centralized management of hybrid and multi-cloud infrastructure and workloads. You are given a single view for all clusters in all of your estate.

Now, if you refer back to the Anthos Components diagram above, using what we know so far from Google Kubernetes Engine (GKE) and GKE On-Prem and GKE Dashboard, we can start to build out the diagram from the ground up. The Cloud Interconnect is just added to allow the two to communicate.

Service Mesh

Let’s continue to add to our diagram by discussion the Service Mesh. The Service Mesh provides a transparent and language-independent way to flexibly and easily automate application network functions. Another way to look a Service Mesh is to think of it as a network that is designed for services, not bits of data. It’s a layer-3 network that does not know what applications it belongs to and does not make network decisions based on your application settings.

The Istio Service mesh is an open framework for connecting, securing, managing and monitoring services and manages interactions between services. The mesh deploys a proxy next to each Service, and this allows you to make smart decisions about routing traffic, and enforcing security and encryption policies. This gives you:

- Uniform observability

- Operational agility

- Policy-drive security

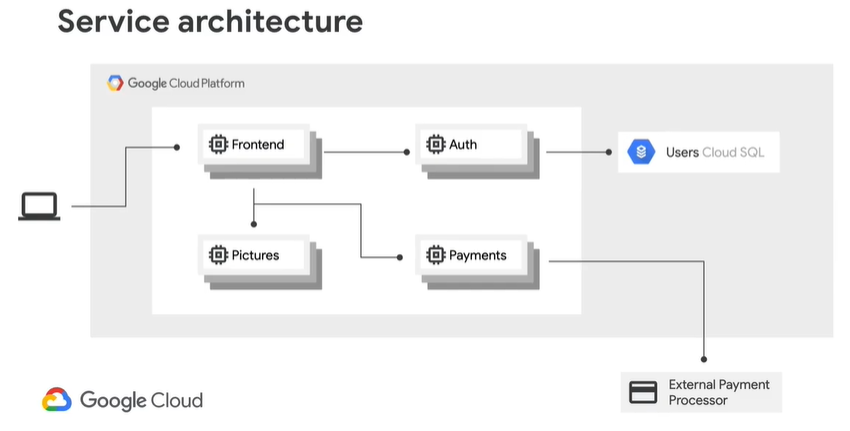

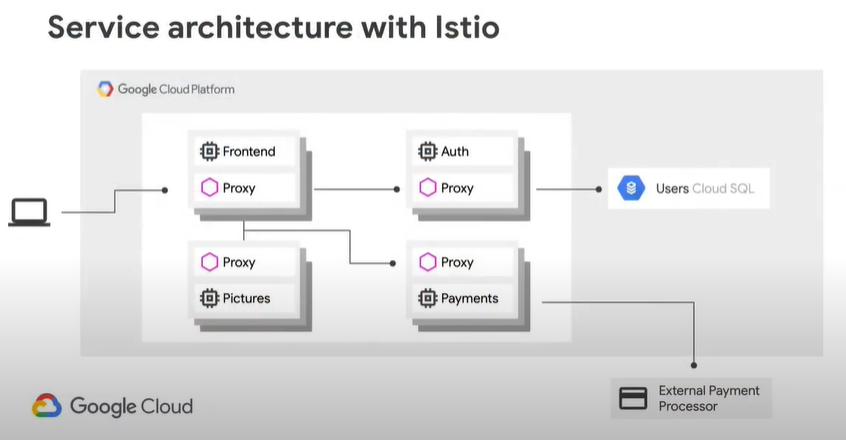

First, let’s look at a typical service architecture with our Service Mesh:

The problem with this configuration, is there is no way to enforce security policies between services. For example, even though it is not designed to do so, we do not want the Pictures service to be able to talk to the Auth service.

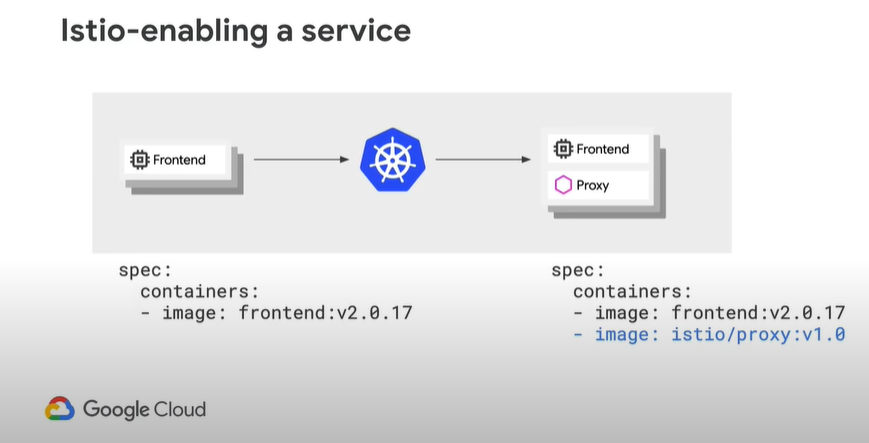

When deploying the Istio Service Mesh, we create a proxy that acts like the original Service Image, but instead is an Istio-enabled service where the communication to the image must go through the Istio proxy first:

With this deployment, no change in code was required as Kubernetes has deployed the service and simply provides smart decisions on how we route traffic.

The end result after Istio Service Mesh is enabled to the service architecture looks like this. You see they must all communicate through the proxy now:

You can also use Istio for Traffic Control, Observability, Security, Fault-Injection and Hybrid Cloud.

Just as Kubernetes has been turned in a managed service in the cloud with GKE, Istio has also has also been released as Istio on GKE (Beta). Istio and Kubernetes are independently released so they have independent versions. By using Istio on GKE, you are giving Google the trust to certify version compatibility between Istio and GKE. So when there is a new version of Kubernetes, there will be a matching version that Google will release of Istio that has been tested and certified to work. With Istio on GKE there are some additional adapters that were created to be able to plugin to other GCP products. Some of these Istio on GKE adapters are:

- Apogee Adapter

- Stackdriver (the destination Istio sends from telemetry data from the Istio Mixer component) – this gives you observability into Istio.

To continue our diagram, we now can include the Service Mesh components:

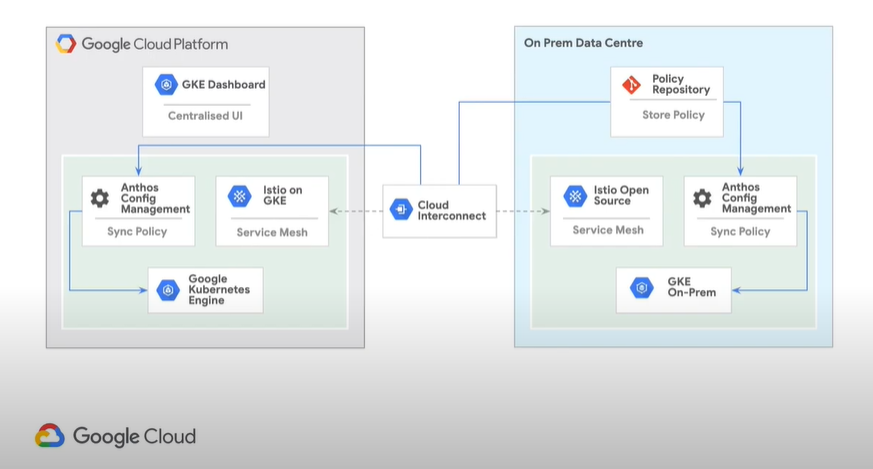

Anthos Config Management

Anthos Config Management was designed to make it easy to apply your policies between this heterogeneous of Multi-Cloud and On-Prem. Config Management lets you enforce guardrails for central IT governance. You can manage configuration of the tools in all of your clusters in one place. You don’t need a separate repository for on-prem and a separate one for any of your cloud providers. It becomes a single, auditable source of truth. It allows you to:

- Sync configs across clusters on-prem and in the cloud

- Continuous enforcement of policies

- Security and auditability through policy-as-code.

As Config Manager acts as the Policy-as-Code you can have:

- Git repository as source of truth where your policy gets checked into

- YAML applied to every cluster

- Integration with your SCM

- Pre-commit validation

Now, updating our diagram, we can now include Anthos Config Management:

Knative

Knative is the efforts by google to bring serverless workloads into Kubernetes. Knative is the open-source serverless framework that builds, compiles, and packages code for serverless deployment, and then deploys the package to the cloud. For some context, serverless computing itself is a cloud computing model in which Google allocates machine resources on demand, taking care of the servers on behalf of you. Serverless computing does not hold resources in volatile memory; computing is rather done in short bursts with the results persisted to storage. When an app is not in use, there are no computing resources allocated to the app.

Knative provides:

- Building-blocks for serverless workloads on Kubernetes

- Backed by Google, Pivotal, IBM, RedHat and SAP

Cloud Run

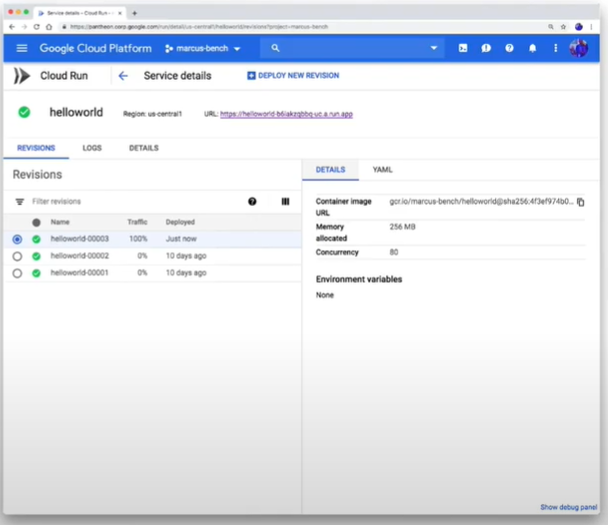

Remember that Knative is the open-source implementation of the serverless framework. Google has then taken Knative and provided a managed version of it called Cloud Run. Cloud Run is a fully managed serverless product, compatible with Knative. It provides:

- Stateless containers via HTTP(s) requests

- Built-in domain handling

- Scales to zero – or as high as you need

Cloud Run is fantastic for application developers because if you have any stateless component in your application, you can package it up as a container and give the container image to Cloud Run. Cloud run is going to deploy it for you and give you a secure URL that you can map to your domain and it will even scale it for you. You don’t need to think about any of the rest. You just focus on your code. The contract with the Cloud Run container is simple:

- It is Stateless

- The port that you need to run it on is given to you as an environmental variable

Cloud Run can make the application modernization journey much easier, because at the end of the day this is just a container. Meaning you can write it in any language you want, use whatever tools you desire, use any dependencies you desire, put them into a container, and Cloud Run handles the rest. So, in terms of bringing in legacy applications that are not working on the latest versions of Java, Python, COBOL or whatever, Cloud Run supports all of it. At the end, Cloud Run is just a binary. It’ll allow you to bring your applications into the cloud journey but still work within your comfort zone using the skillset that you are most comfortable with.

Further, Cloud Run for Anthos integrates into the Anthos ecosystem. We did mention that with Cloud Run, if you give it the container image it will be automatically scaled. You may want more control than that and scaling to not be fully automated. Cloud Run for Anthos allows you to deploy your containers on your clusters, giving you freedom to run Cloud Run anywhere, such as your on-prem environment. Let’s again review the iterations we’ve discussed:

- Cloud Run – Fully managed and allows you to run the instance without concern for your cluster

- Cloud Run for Anthos – Deploy into your GKE cluster, running serverless side-by-side with your existing workloads.

- Knative – Using the same APIs and tooling, you can run this anywhere you run Kubernetes, allowing you to stay vendor neutral.

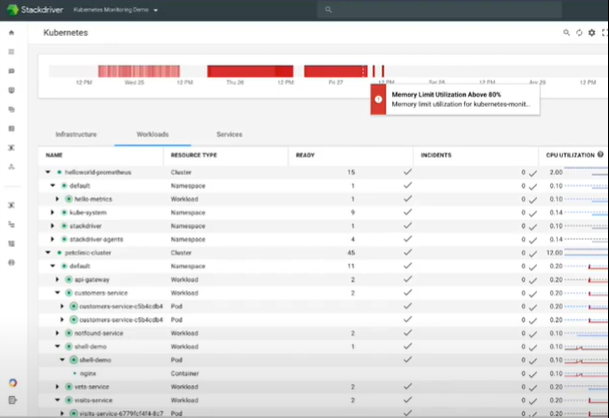

Stackdriver

Discussed earlier when we were reviewing Istio adaptors, Stackdriver is a tool for visibility. When you enable Istio and GKE, the logs and telemetry from your Kubernetes cluster are sent to Stackdriver. This one tool gives you logs across your entire estate. It provides:

- Logging

- Monitoring

- Application Performance Management

- Application Debugging (Link Stackdriver to source code)

- Incident Management

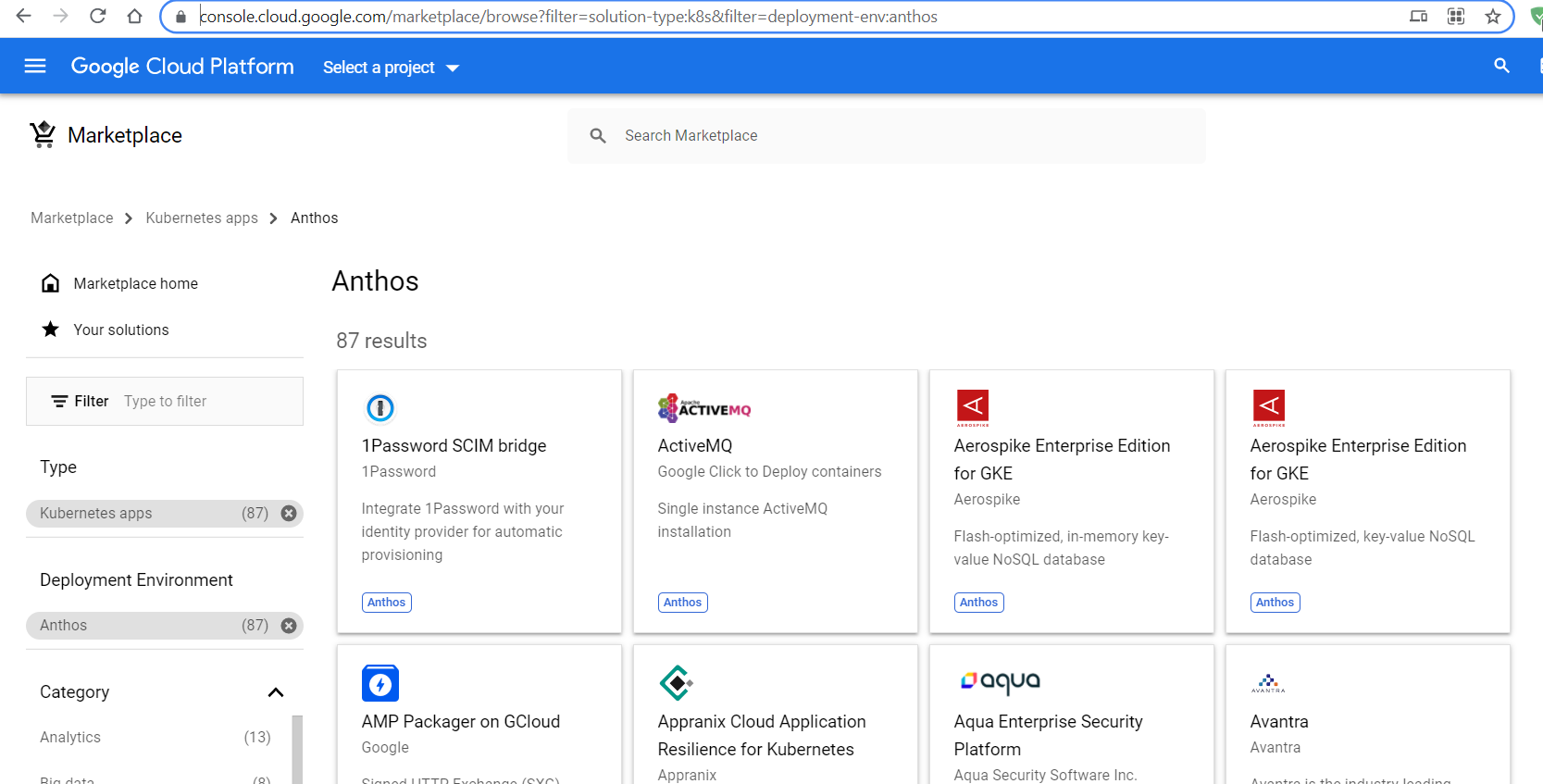

Marketplace

There are times when you don’t need to build everything yourself. You may have a specific job to do, and something like a standard Redis database would be perfect. The GCP Marketplace is a collaboration with Google and third-party vendors to certify software to be run on GCP. One of the places you can run that software is your Kubernetes cluster. And with Anthos, those clusters could be on-prem or in the cloud.

https://console.cloud.google.com/marketplace

Now, finally, we can complete our diagram as we saw it from the beginning, with all of our components for Anthos:

We’ve added Marketplace and Stackdriver in the middle. So now we should be able to understand all of this. We have

- Google Kubernetes Engine and GKE On-Prem for container orchestration

- Istio for security policies, traffic routing across the services in our estate

- Anthos Config Management to make sure we can have a centralized place for governance and application policies and settings, keeping them consistent between on-prem and GCP.

- Marketplace and Stackdriver to help us have a much better application experience

Migrate for Anthos

Up until now, everything we have talked about in Anthos has been centralized around the applications running as containers. Many of you still deploy your applications with Virtual Machines and if you have been looking to migrate to containers, the path is not necessarily straight forward. Migrate for Anthos literally takes your Virtual Machines and converts them into containers. This makes your container image much smaller than the virtual machine image as you no longer have the bulk of the operating system. Migrate also releases the operating system security burden for you, as once the virtual machine is containerized, the OS security is now handled on Google’s hosted infrastructure. The unique automated approach extracts the critical application elements from the Virtual Machine so those elements can be inserted into containers in Google Kubernetes Engine or Anthos clusters without the VM layers (like Guest OS) that become unnecessary with containers.

Use discovery tool to determine which applications might be a good fit for migration, as not all applications can migrate this method.

Great content!