Delving Deeper into the Mathematical Fo…

Delving Deeper into the Mathematical Foundations of Machine Learning

As we have previously explored the surface of machine learning (ML) and its implications on various aspects of technology and society, it’s time to tunnel into the bedrock of ML—its mathematical foundations. Understanding these foundations not only demystifies how large language models and algorithms work but also illuminates the path for future advancements in artificial intelligence (AI).

The Core of Machine Learning: Mathematical Underpinnings

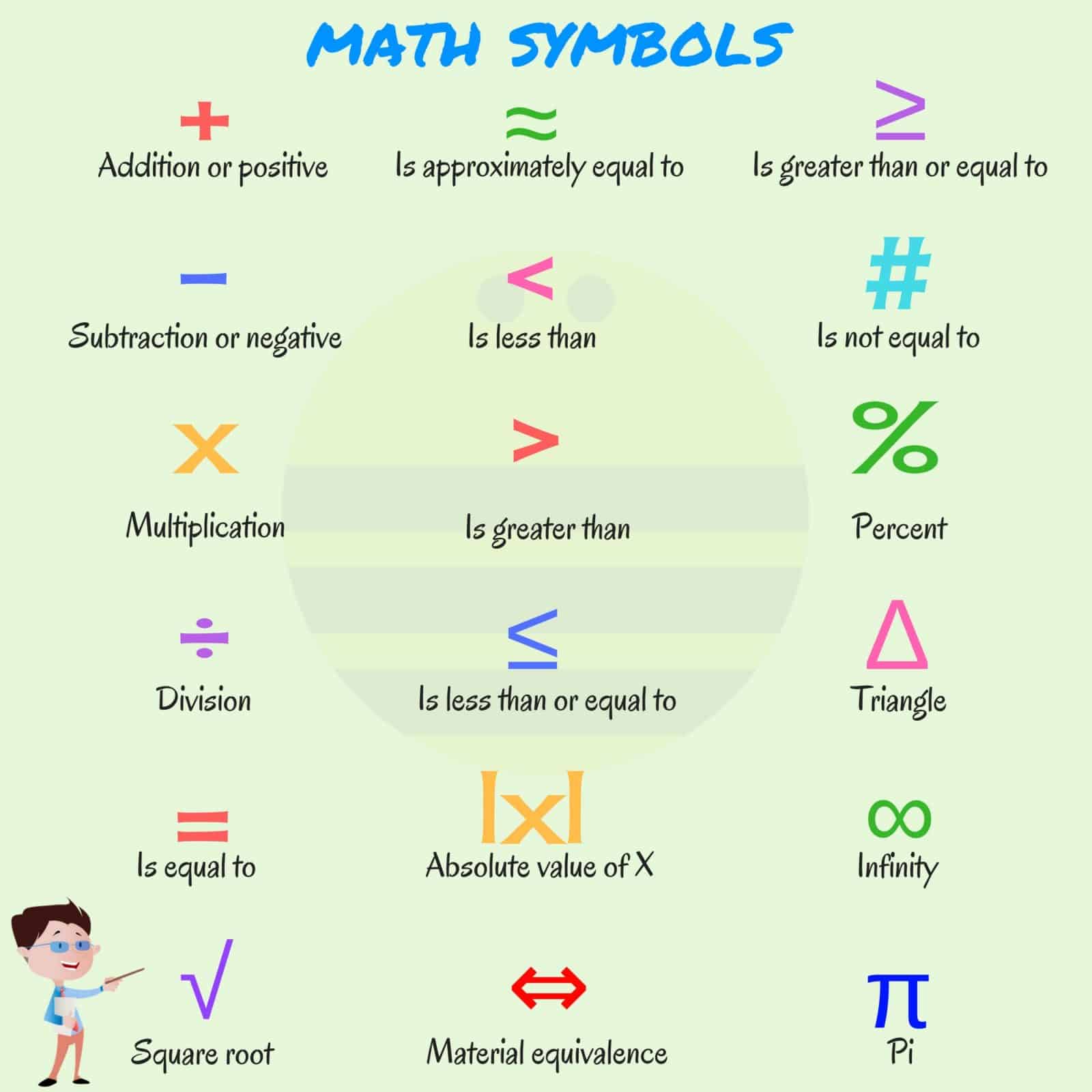

At the heart of machine learning lie various mathematical concepts that work in harmony to enable machines to learn from data. These include, but are not limited to, linear algebra, probability theory, calculus, and statistics. Let’s dissect these components to understand their relevance in machine learning.

Linear Algebra: The Structure of Data

Linear algebra provides the vocabulary and the framework for dealing with data. Vectors and matrices, core components of linear algebra, are the fundamental data structures in ML. They enable the representation of data sets and the operations on these data sets efficiently. The optimization of neural networks, a cornerstone technique in deep learning (a subset of ML), heavily relies on linear algebra for operations such as forward and backward propagation.

Calculus: The Optimization Engine

Calculus, specifically differential calculus, plays a critical role in the optimization processes of ML algorithms. Techniques such as gradient descent, which is pivotal in training deep learning models, use calculus to minimize loss functions—a measure of how well the model performs.

Probability Theory and Statistics: The Reasoning Framework

ML models often make predictions or decisions based on uncertain data. Probability theory and statistics provide the framework for modeling and reasoning under uncertainty. These concepts are heavily used in Bayesian learning, anomaly detection, and reinforcement learning, helping models make informed decisions by quantifying uncertainty.

< >

>

Unveiling Large Language Models Through Mathematical Lenses

Our recent discussions have highlighted the significance of Large Language Models (LLMs) in pushing the boundaries of AI and ML. The mathematical foundations not only power these models but also shape their evolution and capabilities. Understanding the mathematics behind LLMs allows us to peel back layers revealing how these models process and generate human-like text.

For instance, the transformer architecture, which is at the core of many LLMs, leverages attention mechanisms to weigh the relevance of different parts of the input data differently. The mathematics behind this involves complex algorithms calculating probabilities, further showcasing the deep interconnection between ML and mathematics.

Future Directions: The Mathematical Frontier

The rapid advancement in ML and AI points towards an exciting future where the boundaries of what machines can learn and do are continually expanding. However, this future also demands a deeper, more nuanced understanding of the mathematical principles underlying ML models.

Emerging areas such as quantum machine learning and the exploration of new neural network architectures underscore the ongoing evolution of the mathematical foundation of ML. These advancements promise to solve more complex problems, but they also require us to deepen our mathematical toolkit.

< >

>

Incorporating Mathematical Rigor in ML Education and Practice

For aspiring ML practitioners and researchers, grounding themselves in the mathematical foundations is pivotal. This not only enhances their understanding of how ML algorithms work but also equips them with the knowledge to innovate and push the field forward.

As we venture further into the detailed study of ML’s mathematical underpinnings, it becomes clear that these principles are not just academic exercises but practical tools that shape the development of AI technologies. Therefore, a solid grasp of these mathematical concepts is indispensable for anyone looking to contribute meaningfully to the future of ML and AI.

< >

>

As we continue to explore the depths of large language models and the broader field of machine learning, let us not lose sight of the profound mathematical foundations that underpin this revolutionary technology. It is in these foundations that the future of AI and ML will be built, and it is through a deep understanding of these principles that we will continue to advance the frontier of what’s possible.

Focus Keyphrase: Mathematical foundations of machine learning

nice article!