Machine Learning’s Evolutionary Leap with QueryPanda: A Game-Changer for Data Science

In today’s rapidly advancing technological landscape, the role of Machine Learning (ML) in shaping industries and enhancing operational efficiency cannot be overstated. Having been on the forefront of this revolution through my work at DBGM Consulting, Inc., my journey from conducting workshops and developing ML models has provided me with first-hand insights into the transformative power of AI and ML. Reflecting on recent developments, one particularly groundbreaking advancement stands out – QueryPanda. This tool not only symbolizes an evolutionary leap within the realm of Machine Learning but also significantly streamlines the data handling process, rendering it a game-changer for data science workflows.

The Shift Towards Streamlined Data Handling

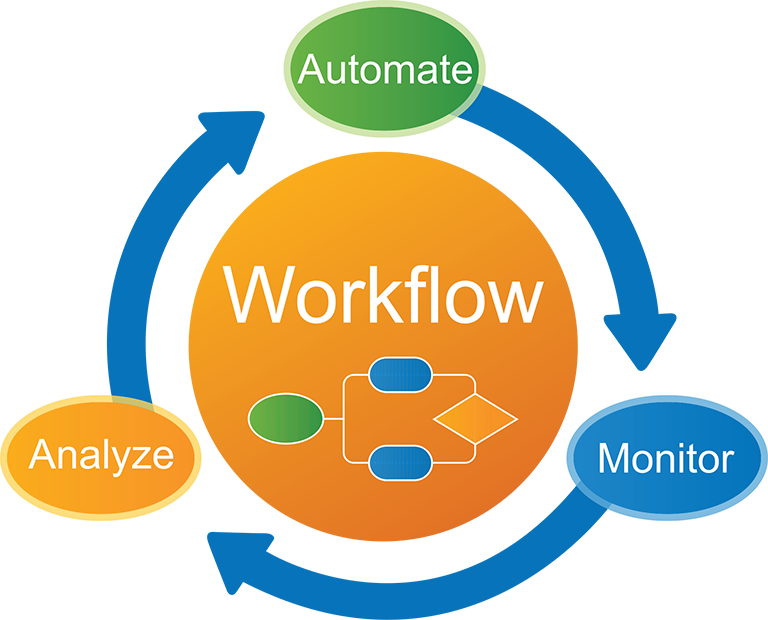

Machine Learning projects are renowned for their data-intensive nature. The need for efficient data handling processes is paramount, as the foundational steps of cleaning, organizing, and managing data directly correlate with the outcome of ML algorithms. Here, QueryPanda emerges as an innovative solution, designed to simplify the complexities traditionally associated with data preparation.

- Ease of Use: QueryPanda’s user-friendly interface allows both novices and seasoned data scientists to navigate data handling tasks with relative ease.

- Efficiency: By automating repetitive tasks, it significantly reduces the time spent on data preparation, enabling a focus on more strategic aspects of ML projects.

- Flexibility: Supports various data formats and sources, facilitating seamless integration into existing data science pipelines.

Integrating QueryPanda into Machine Learning Paradigms

An exploration of ML paradigms reveals a diverse landscape, ranging from supervised learning to deep learning techniques. Each of these paradigms has specific requirements in terms of data handling and preprocessing. QueryPanda’s adaptability makes it a valuable asset across these varying paradigms, offering tailored functionalities that enhance the efficiency and effectiveness of ML models. This adaptability not only streamlines operations but also fosters innovation by allowing data scientists to experiment with novel ML approaches without being hindered by data management challenges.

Reflecting on the broader implications of QueryPanda within the context of previously discussed ML topics, such as the impact of AI on traditional industries (David Maiolo, April 6, 2024), it’s evident that such advancements are not just facilitating easier data management. They are also enabling sustainable, more efficient practices that align with long-term industry transformation goals.

The Future of Machine Learning and Data Science

The introduction of tools like QueryPanda heralds a new era for Machine Learning and data science. As we continue to break barriers and push the limits of what’s possible with AI, the emphasis on user-friendly, efficient data handling solutions will only grow. For businesses and researchers alike, this means faster project completion times, higher-quality ML models, and ultimately, more innovative solutions to complex problems.

Video: [1,Machine Learning project workflow enhancements with QueryPanda]

In conclusion, as someone who has witnessed the evolution of Machine Learning from both academic and practical perspectives, I firmly believe that tools like QueryPanda are indispensable. By democratizing access to efficient data handling, we are not just improving ML workflows but are also setting the stage for the next wave of technological and industrial innovation.

Adopting such tools within our projects at DBGM Consulting, we’re committed to leveraging the latest advancements to drive value for our clients, reinforcing the transformative potential of AI and ML across various sectors.

Exploring how QueryPanda and similar innovations continue to shape the landscape will undoubtedly be an exciting journey, one that I look forward to navigating alongside my peers and clients.

Focus Keyphrase: Machine Learning Data Handling