Revolutionizing Agricultural Carbon Cycle Quantification with AI

The fight against climate change has taken a monumental leap forward with the integration of artificial intelligence (AI) in agricultural emissions monitoring. A collaborative effort between researchers at the University of Minnesota Twin Cities and the University of Illinois Urbana-Champaign has yielded a groundbreaking study, published in Nature Communications, showcasing the capabilities of Knowledge-Guided Machine Learning (KGML) in accurately predicting carbon cycles within agroecosystems.

Understanding the KGML-ag-Carbon Framework

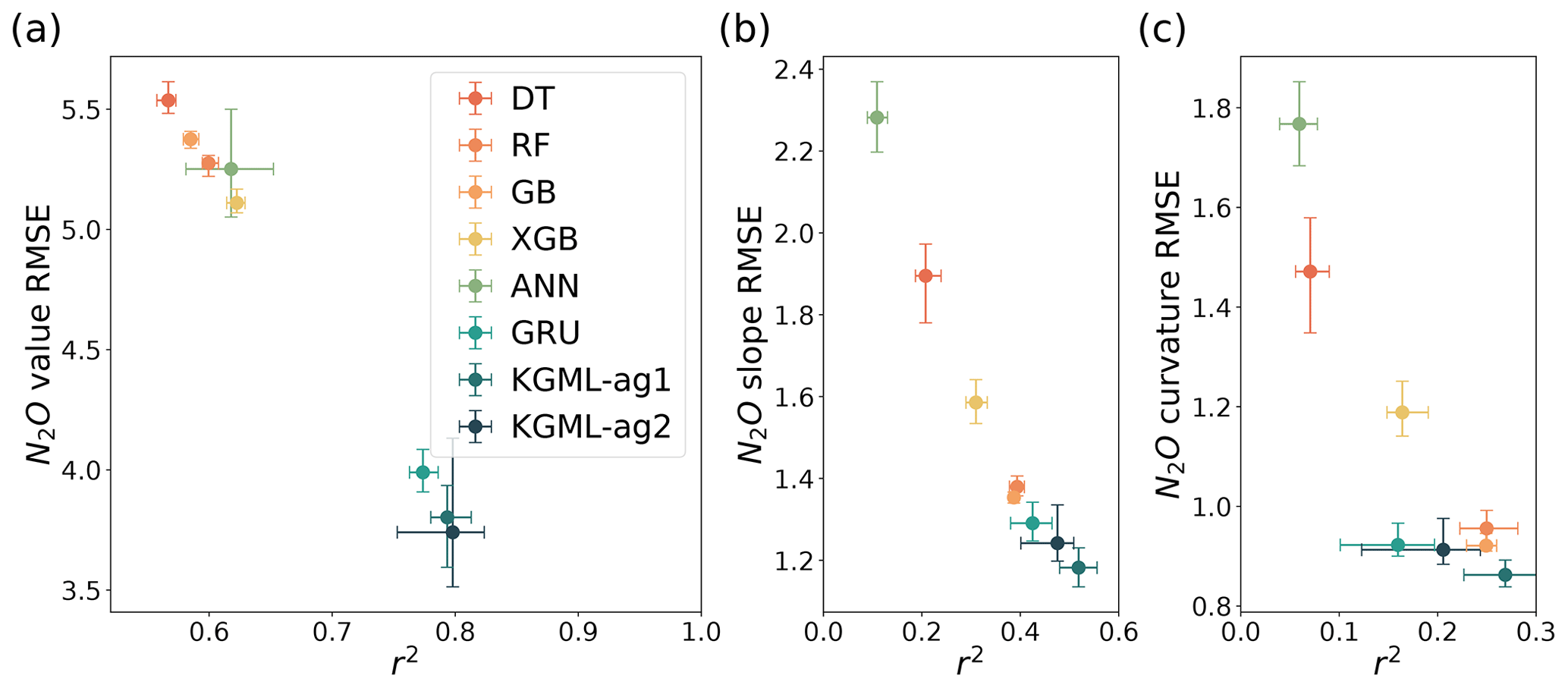

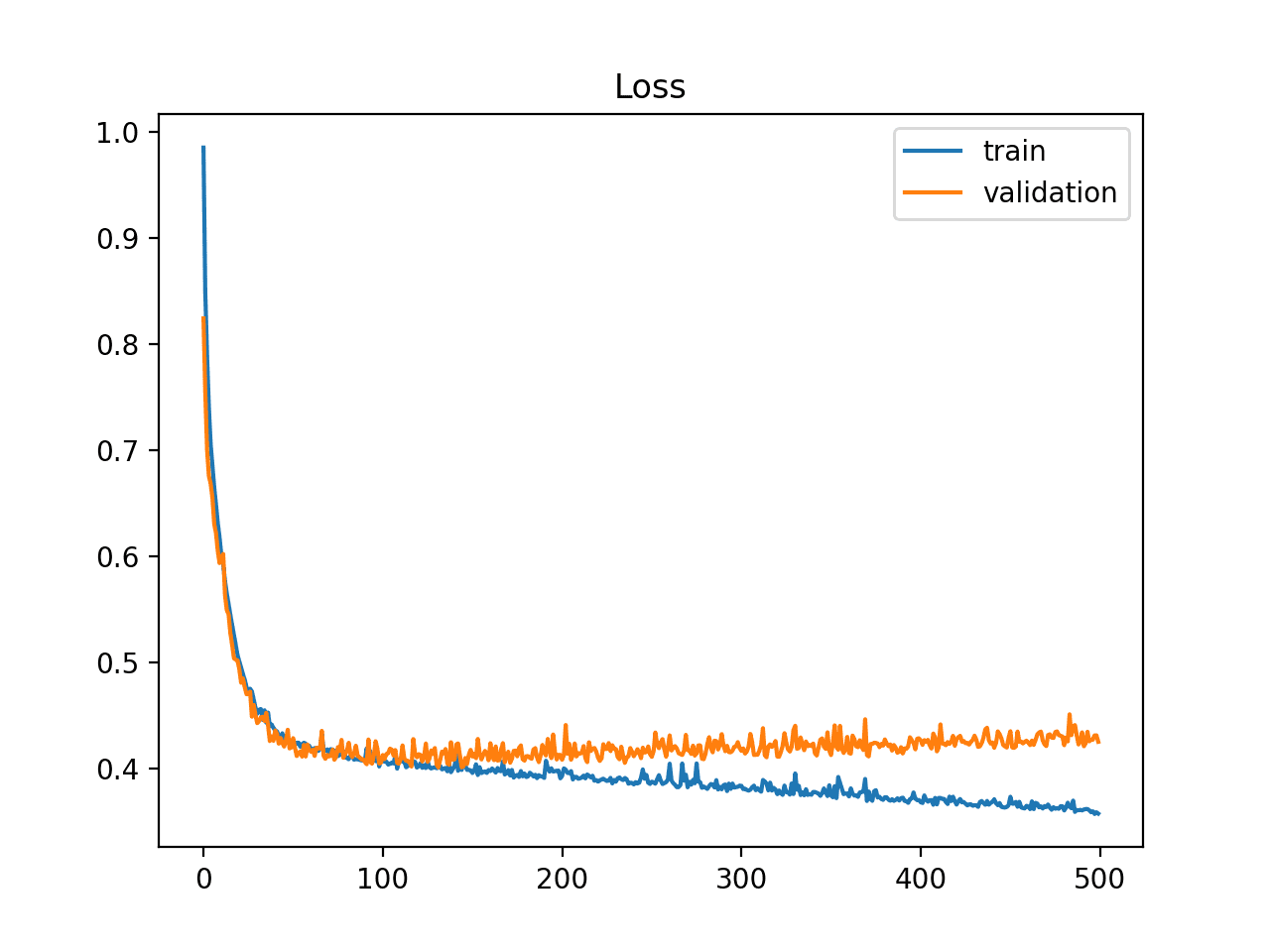

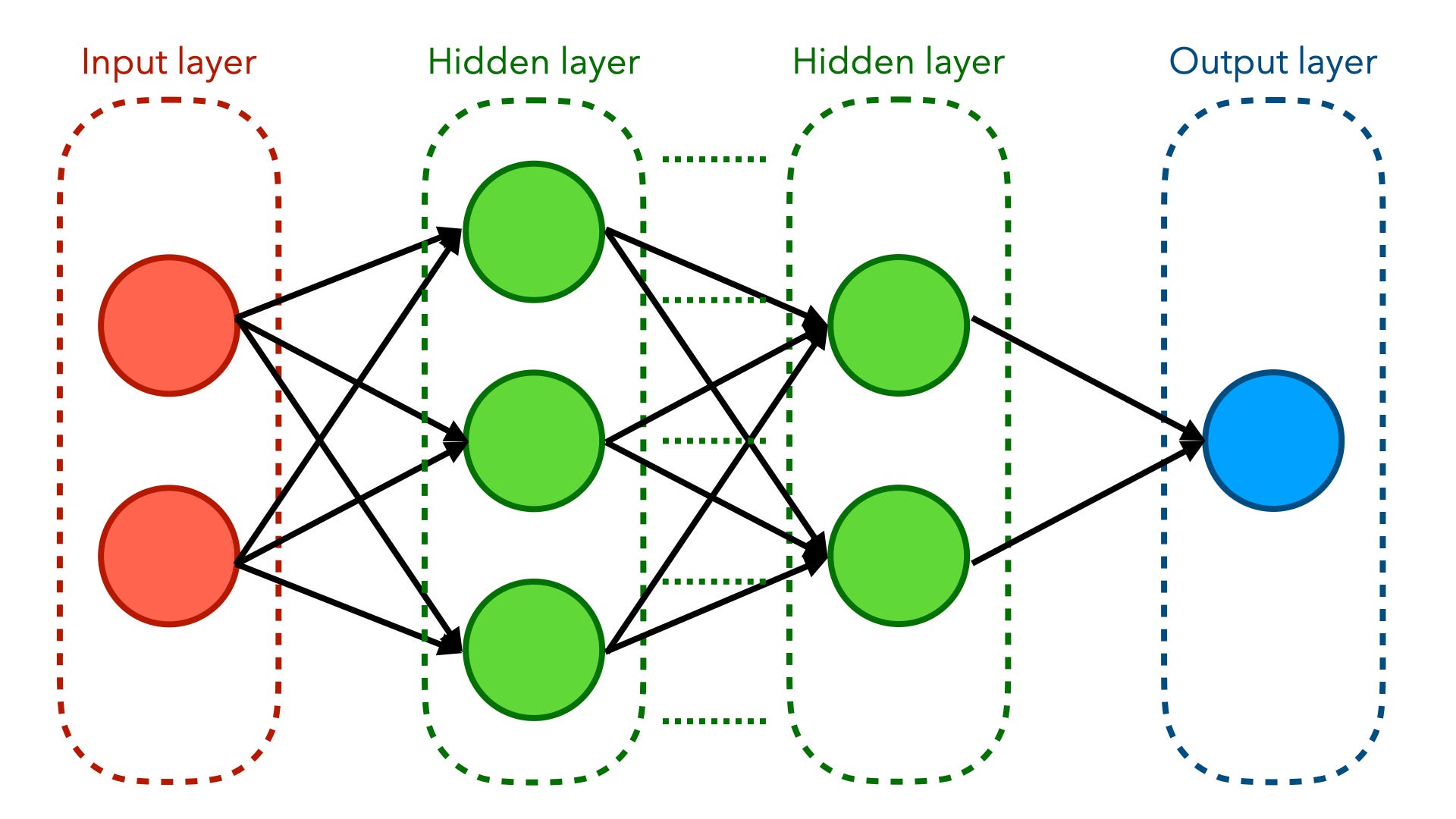

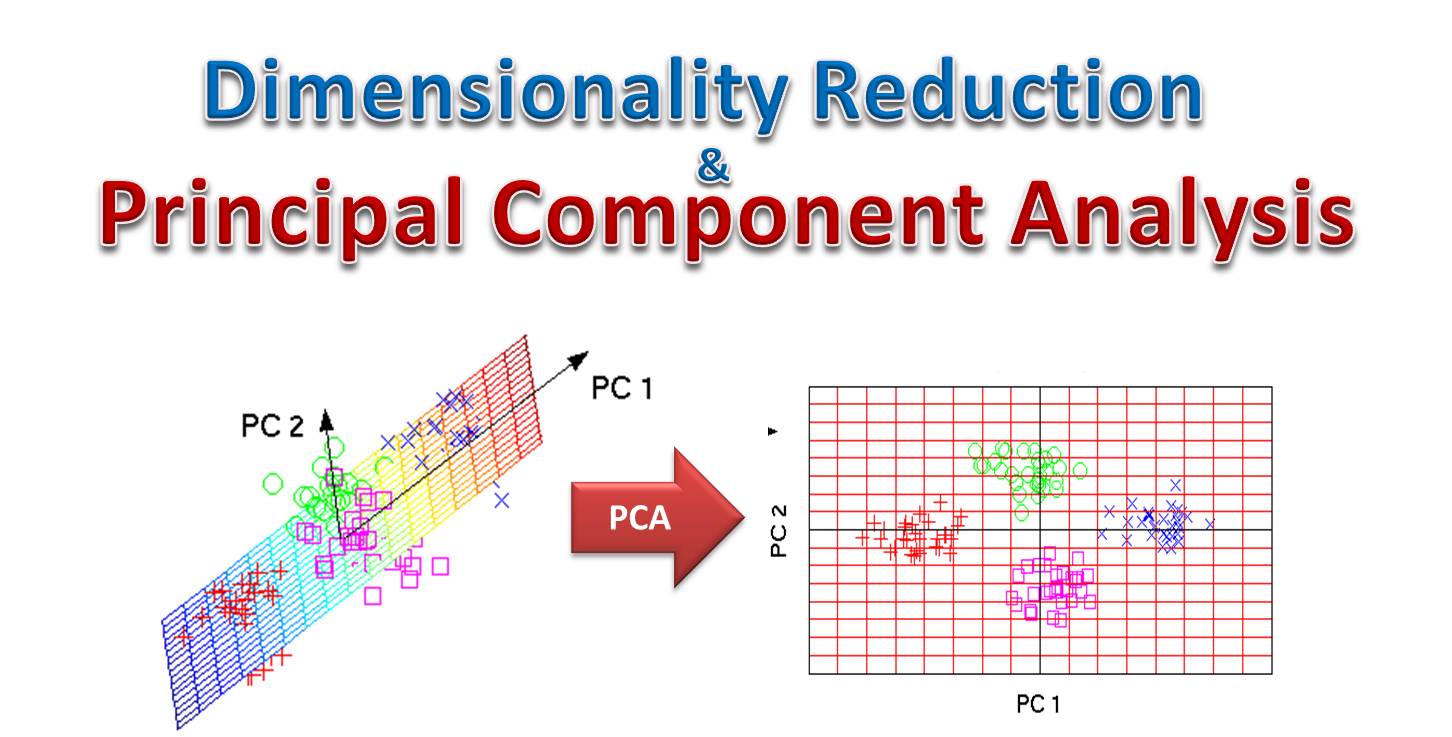

At the heart of this advancement lies the KGML-ag-Carbon model, developed through a meticulous process that merges AI with the deep-rooted intricacies of agricultural science. The framework’s architecture is designed around the causal relationships identified within agricultural models, refined through pre-training on synthetic data, and fine-tuned using real-world observations. This method not only enhances the precision of carbon cycle predictions but achieves these results with remarkable efficiency and speed.

Why This Matters

- Climate-Smart Agriculture: The ability to monitor and verify agricultural emissions is crucial for implementing practices that combat climate change while also benefiting rural economies.

- Carbon Credits: Transparent and accurate quantification of greenhouse gas emissions is essential for the validation of carbon credits, encouraging more companies to invest in sustainable practices.

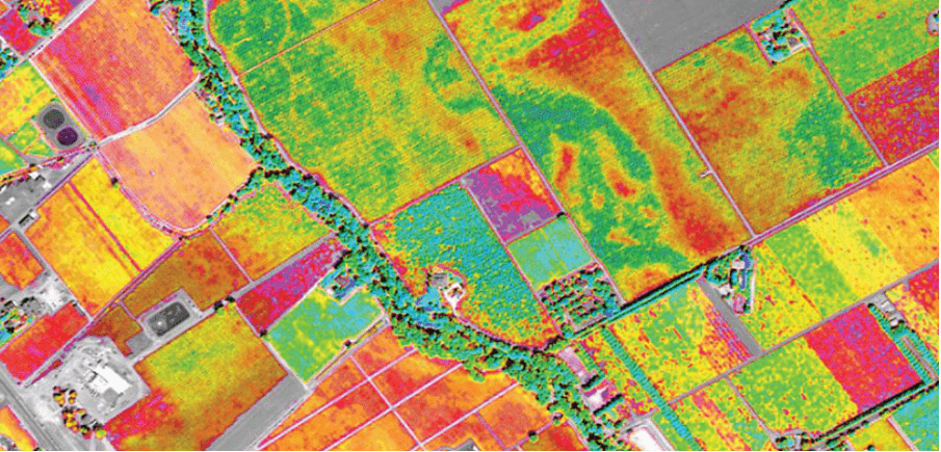

The Role of Satellite Remote Sensing and AI

Traditionally, capturing data on carbon stored in soil has been a labor-intensive and costly process requiring physical soil samples. However, with the implementation of KGML-ag, the combined power of satellite imagery, computational models, and AI now provides a comprehensive and accessible solution. This innovation not only proposes a more feasible approach for farmers but also paves the way for enhanced accuracy in carbon credit markets.

The Broader Impact

The implications of this study extend far beyond agricultural emissions monitoring. By laying the groundwork for credible and scalable Measurement, Monitoring, Reporting, and Verification (MMRV) systems, this technology fosters trust in carbon markets and supports the wider adoption of sustainable practices across various sectors.

Looking Ahead: Expanding KGML Applications

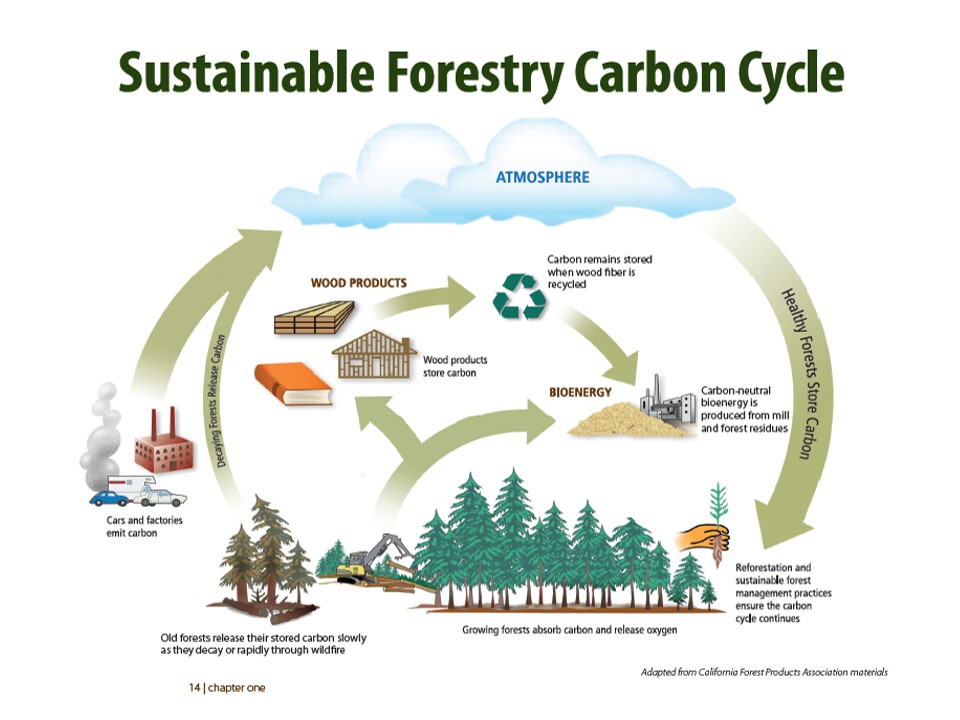

The success of KGML-ag within the realm of agriculture has opened new avenues for its application, particularly in sustainable forestry management. By harnessing KGML’s ability to assimilate diverse satellite data types, researchers are now exploring ways to optimize forest carbon storage and management. This progress exemplifies the transformative potential of AI in not only understanding but also combating climate change on a global scale.

Final Thoughts

As we navigate the complexities of preserving our planet, it becomes increasingly clear that innovative solutions like KGML-ag are instrumental in bridging the gap between technology and sustainability. By enhancing our capability to accurately monitor and manage carbon cycles, we take a significant stride towards a more sustainable future.

In the realm of technology and sustainability, my work and experiences have shown me the importance of innovation in driving change. The advances in AI and their application to critical global issues like climate change affirm the belief that technology holds the key to not only understanding but also preserving our world for future generations.

>

> >

> >

>

>

> >

> >

>

>

> >

> >

>