Powering Artificial Intelligence and the Challenges Ahead

As we continue to push the boundaries of what artificial intelligence (AI) can achieve, one critical factor is becoming increasingly apparent: the immense power requirements needed to sustain and advance these technologies. This article explores the challenges and opportunities in powering AI, focusing on energy needs and the importance of merit-based hiring in the tech industry.

The Energy Requirements of Modern AI

The power demands for modern AI systems are incredibly high. To put it into perspective, doubling or tripling our current electricity supply wouldn’t be enough to fully support an AI-driven economy. This staggering requirement stems from the sheer volume of computing power needed for AI applications, from self-driving cars to advanced medical diagnostics.

For example, the computational resources required for an AI to analyze a photograph and accurately diagnose skin cancer are enormous. While such advancements could save countless lives and reduce medical costs, the energy required to sustain these operations is immense. Think of the electricity needed to power New York City; now double or even triple that just to meet the energy requirements for these advanced AI applications.

Industry experts argue that we have the necessary energy resources if we fully leverage our natural gas and nuclear capabilities. The natural gas reserves in Ohio and Pennsylvania alone could power an AI-driven economy for centuries. However, current policies restrict the extraction and utilization of these resources, putting the future of AI innovation at risk.

< >

>

Merit-Based Hiring in AI Development

Another crucial factor in the AI race is the talent behind the technology. It’s essential that we prioritize merit-based hiring to ensure the most capable individuals are developing and managing these complex systems. Whether one is black or white, the focus should be on skill and expertise rather than fulfilling diversity quotas.

Many industry leaders, such as Elon Musk, have shifted their focus to hiring the most talented engineers and developers, regardless of bureaucratic diversity requirements. Musk’s evolution from a center-left Democrat to a more conservative stance can be attributed to his desire to hire the best talent to accomplish ambitious goals like colonizing Mars. This focus on merit over mandated diversity is crucial for keeping the U.S. competitive in the global AI race.

< >

>

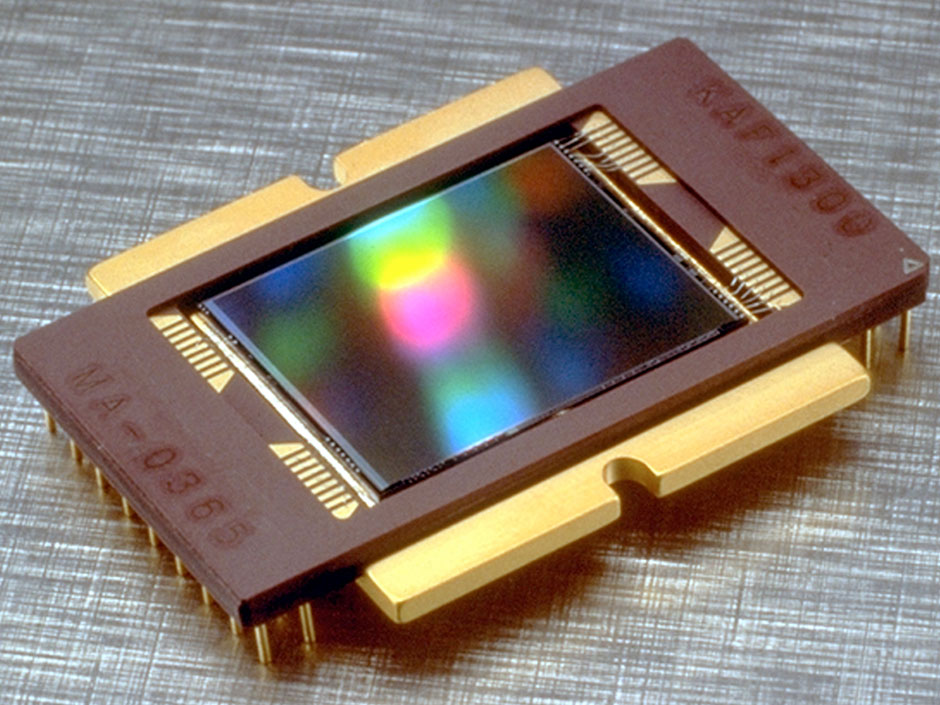

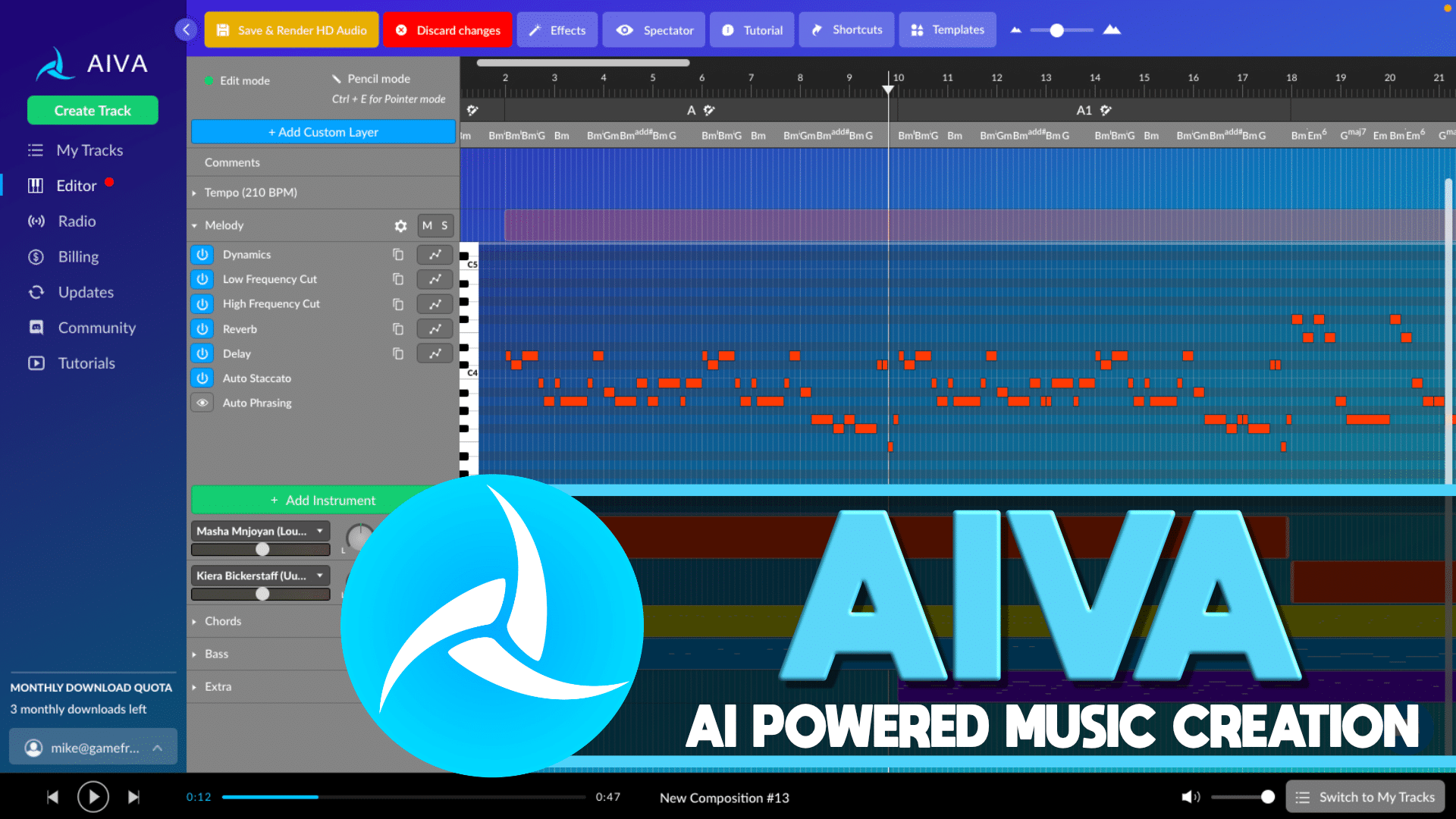

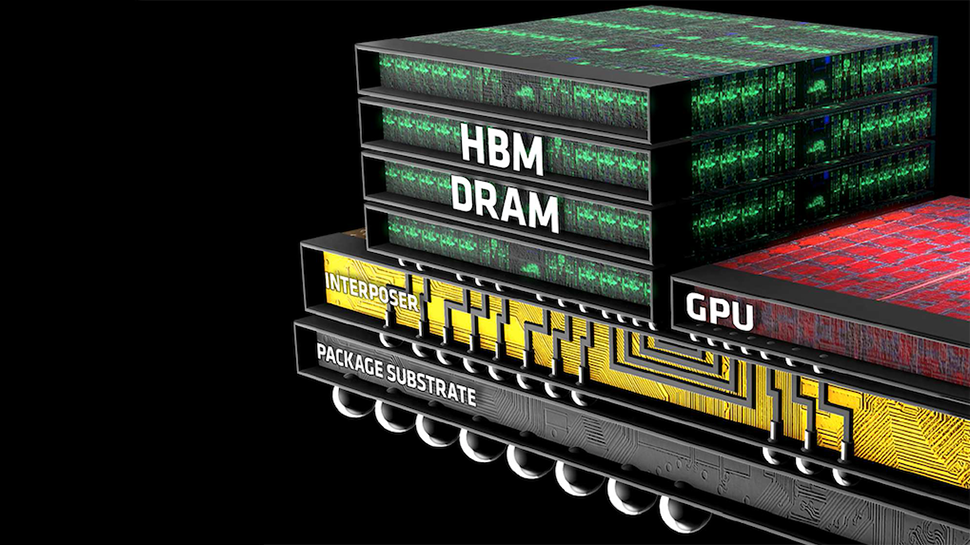

The Importance of Advanced Hardware Infrastructure

AI development isn’t just about software; it’s also heavily reliant on hardware—specifically, advanced computer chips. China has made significant investments in this area, posing a substantial competitive threat. The U.S. must build the infrastructure to manufacture next-generation computer chips domestically, ensuring we remain at the forefront of AI technology.

Legislation aimed at promoting the American computer chip industry has been enacted but has faced implementation challenges due to progressive political agendas. Companies are required to meet various diversity and human resources criteria before they can even start manufacturing. This focus on bureaucratic requirements can hinder the rapid development needed to compete with global AI leaders like China.

What Needs to Be Done

To power the future of AI effectively, several steps need to be taken:

- Unleashing American Energy: Utilizing existing natural gas and nuclear resources to meet the energy demands of an AI-driven economy.

- Merit-Based Hiring: Ensuring the best talent is hired based on skill and expertise rather than fulfilling diversity quotas.

- Investment in Hardware: Building the infrastructure to manufacture advanced computer chips domestically.

<![]() >

>

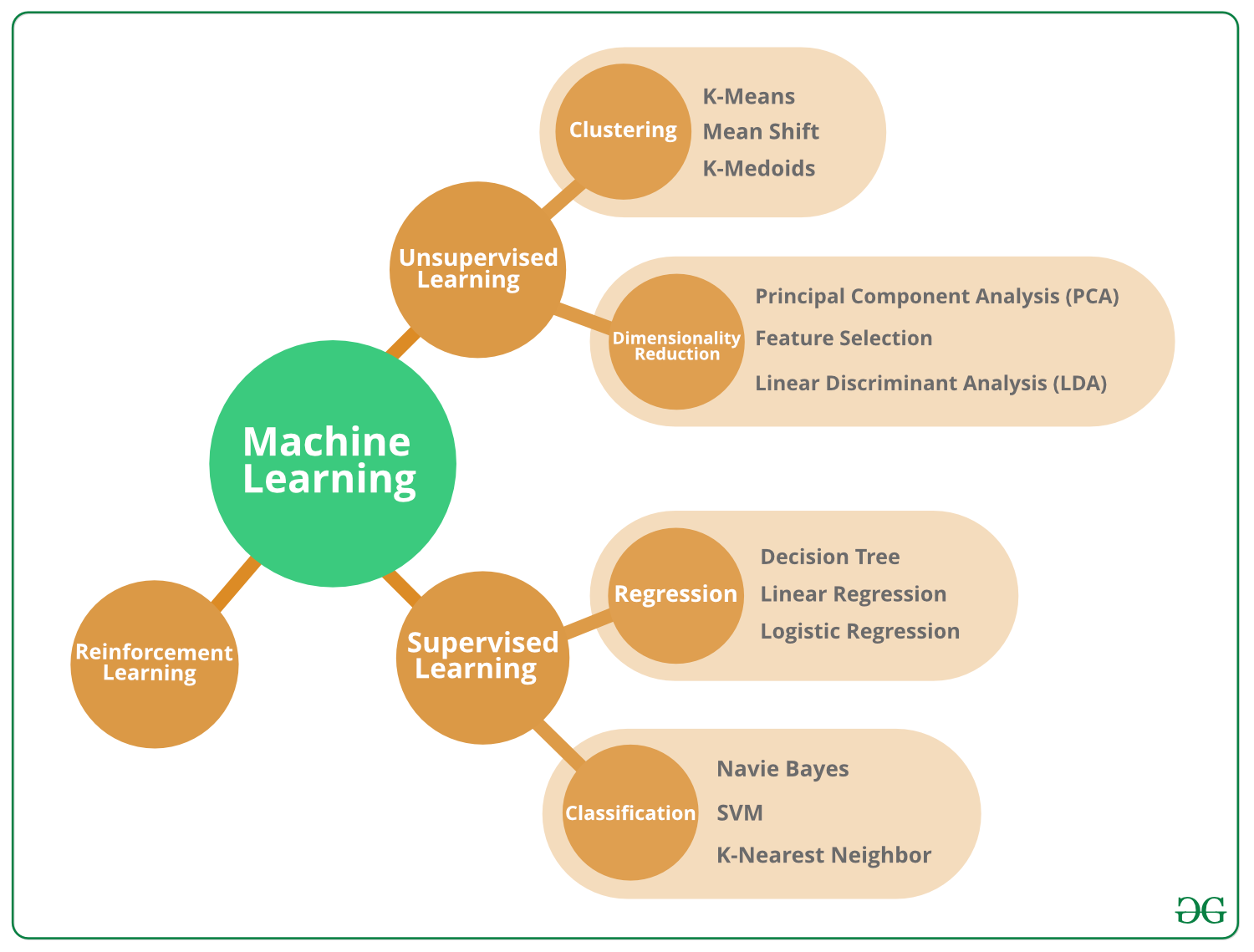

In previous articles, I’ve discussed the challenges of debugging machine learning algorithms and the power needs of artificial intelligence. These issues are interconnected; solving one aspect often impacts the other. For instance, advanced hardware can make debugging more efficient, which in turn demands more power.

One thing is clear: the future of AI is bright but fraught with challenges. By addressing these power needs and focusing on merit-based hiring and hardware development, we can continue to innovate and lead in the global AI race.

<

>

Ultimately, ensuring we have the power and talent to advance AI technologies is not just an industrial priority but a national one. We must take strategic steps today to secure a prosperous, AI-driven future.

Focus Keyphrase: Powering AI

>

> >

> >

>

>

> >

>

>

> >

>

>

> >

>