Delving Deep into Clustering: The Unseen Backbone of Machine Learning Mastery

In recent articles, we’ve traversed the vast and intricate landscape of Artificial Intelligence (AI) and Machine Learning (ML), understanding the pivotal roles of numerical analysis techniques like the Newton’s Method and exploring the transformative potential of renewable energy in AI’s sustainable future. Building on this journey, today, we dive deep into Clustering—a fundamental yet profound area of Machine Learning.

Understanding Clustering in Machine Learning

At its core, Clustering is about grouping sets of objects in such a way that objects in the same group are more similar (in some sense) to each other than to those in other groups. It’s a mainstay of unsupervised learning, with applications ranging from statistical data analysis in many scientific disciplines to pattern recognition, image analysis, information retrieval, and bioinformatics.

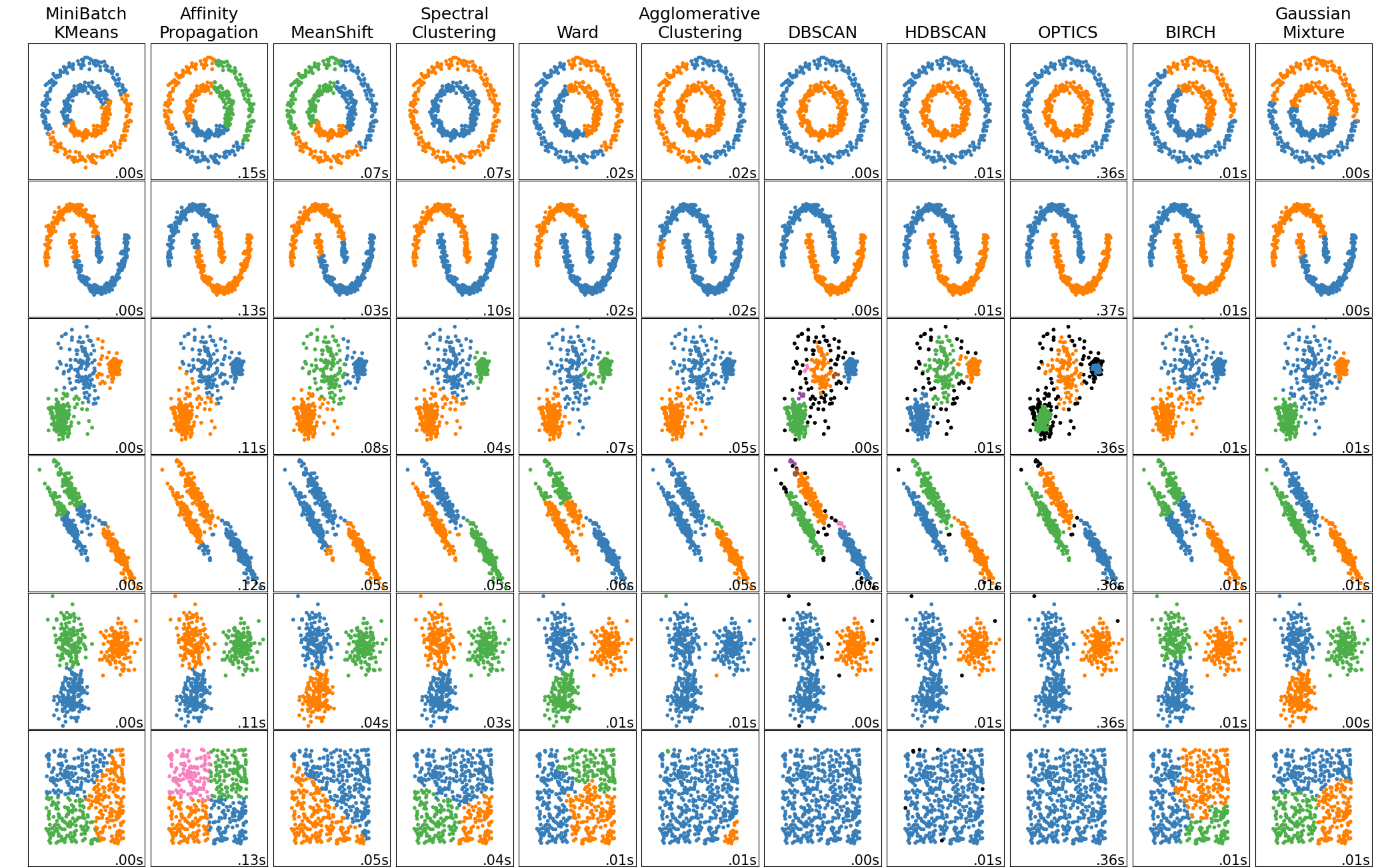

Types of Clustering Algorithms

- K-means Clustering: Perhaps the most well-known of all clustering techniques, K-means groups data into k number of clusters by minimizing the variance within each cluster.

- Hierarchical Clustering: This method builds a multilevel hierarchy of clusters by creating a dendrogram, a tree-like diagram that records the sequences of merges or splits.

- DBSCAN (Density-Based Spatial Clustering of Applications with Noise): This technique identifies clusters as high-density areas separated by areas of low density. Unlike K-means, DBSCAN does not require one to specify the number of clusters in advance.

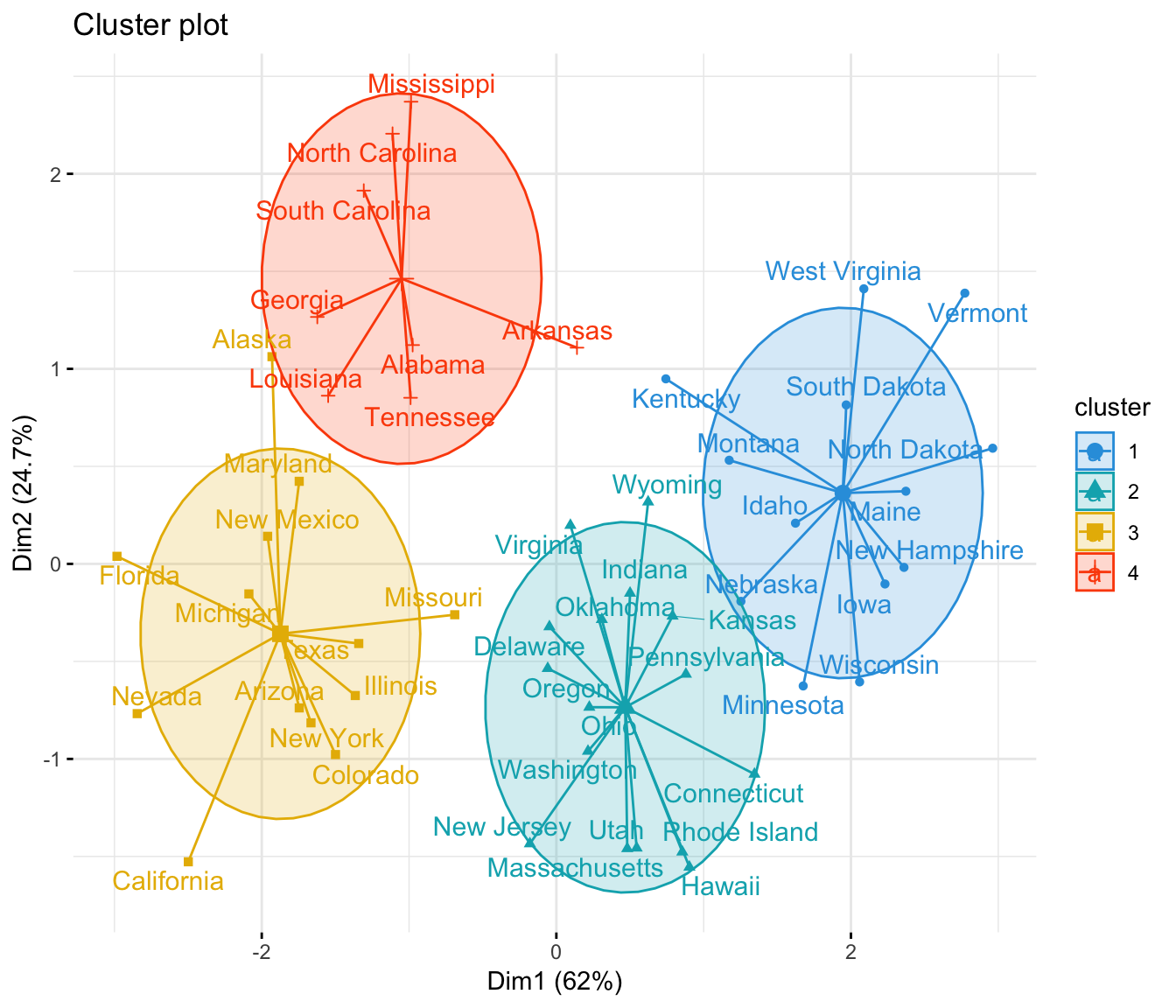

Clustering in Action: A Use Case from My Consultancy

In my work at DBGM Consulting, where we harness the power of ML across various domains like AI chatbots and process automation, clustering has been instrumental. For instance, we deployed a K-means clustering algorithm to segment customer data for a retail client. This effort enabled personalized marketing strategies and significantly uplifted customer engagement and satisfaction.

The Mathematical Underpinning of Clustering

At the heart of clustering algorithms like K-means is an objective to minimize a particular cost function. For K-means, this function is often the sum of squared distances between each point and the centroid of its cluster. The mathematical beauty in these algorithms lies in their simplicity yet powerful capability to reveal the underlying structure of complex data sets.

def compute_kmeans(data, num_clusters):

# Initialization and computation steps omitted for brevity

return clusters

Challenges and Considerations in Clustering

Despite its apparent simplicity, effective deployment of clustering poses challenges:

- Choosing the Number of Clusters: Methods like the elbow method can help, but the decision often hinges on domain knowledge and the specific nature of the data.

- Handling Different Data Types: Clustering algorithms may need adjustments or preprocessing steps to manage varied data types and scales effectively.

- Sensitivity to Initialization: Some algorithms, like K-means, can yield different results based on initial cluster centers, making replicability a concern.

Looking Ahead: The Future of Clustering in ML

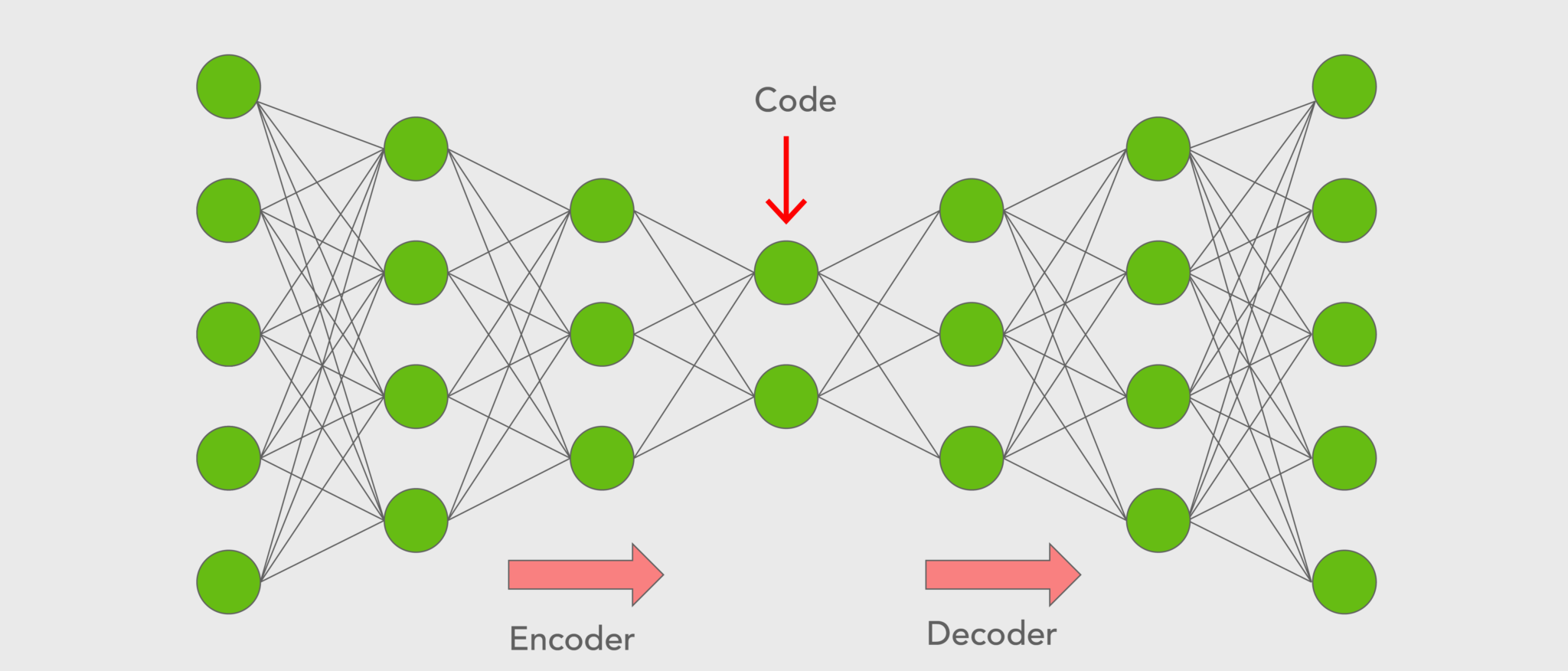

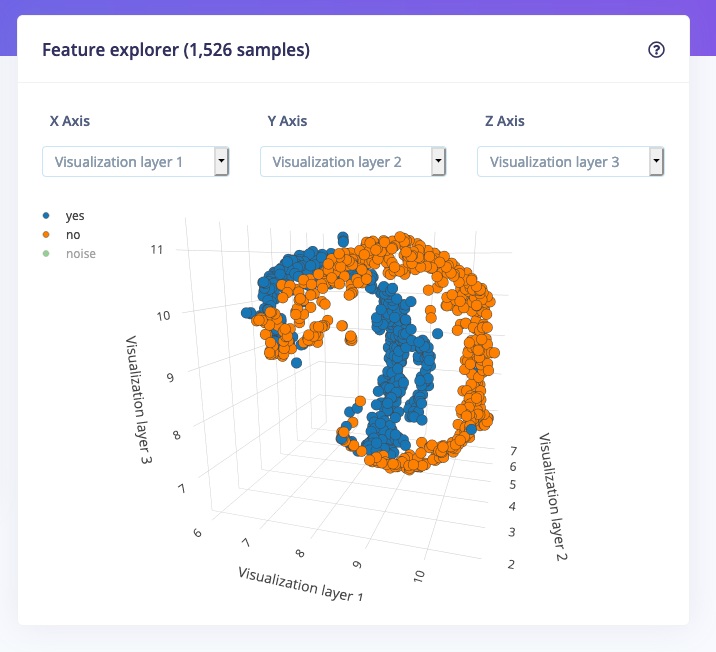

As Machine Learning continues to evolve, the role of clustering will only grow in significance, driving advancements in fields as diverse as genetics, astronomy, and beyond. The convergence of clustering with deep learning, through techniques like deep embedding for clustering, promises new horizons in our quest for understanding complex, high-dimensional data in ways previously unimaginable.

In conclusion, it is evident that clustering, a seemingly elementary concept, forms the backbone of sophisticated Machine Learning models and applications. As we continue to push the boundaries of AI, exploring and refining clustering algorithms will remain a cornerstone of our endeavors.

>

> >

> >

>