Human and AI Cognition: Beyond the Anthropocentric Frame

In navigating the intersection of human cognition and artificial intelligence (AI), it’s imperative to challenge our anthropocentric perspectives. The fabric of human cognition, interwoven with emotional states, societal norms, and physiological necessities, defines our understanding of “thinking.” Yet, the advent of AI cognition presents a paradigm fundamentally distinct yet potentially complementary to our own.

The Biological Paradigm and AI’s Digital Cognition

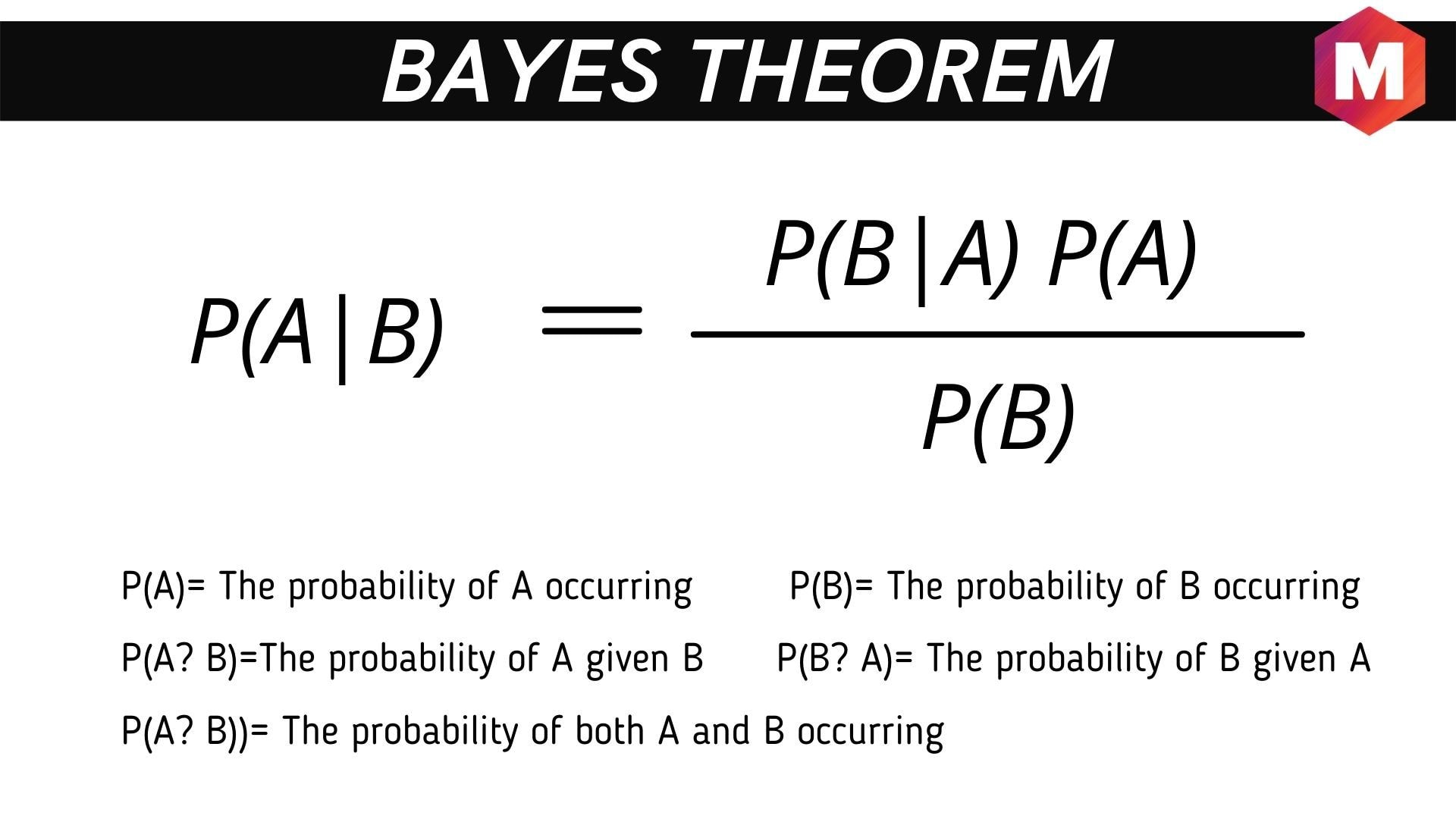

Humans operate within the sphere of a biological destiny—our cognitive processes shaped significantly by our physiological responses to stimuli, like the instinctual fight-or-flight mechanism triggered by adrenaline. This contrasts sharply with AI’s cognition, which is devoid of such biological markers and operates through algorithms and data analysis. An exploration into this dichotomy reveals the potential for AI to not duplicate but supplement human cognition in its unique capacity.

Digital Cognition: Unbounded Potential

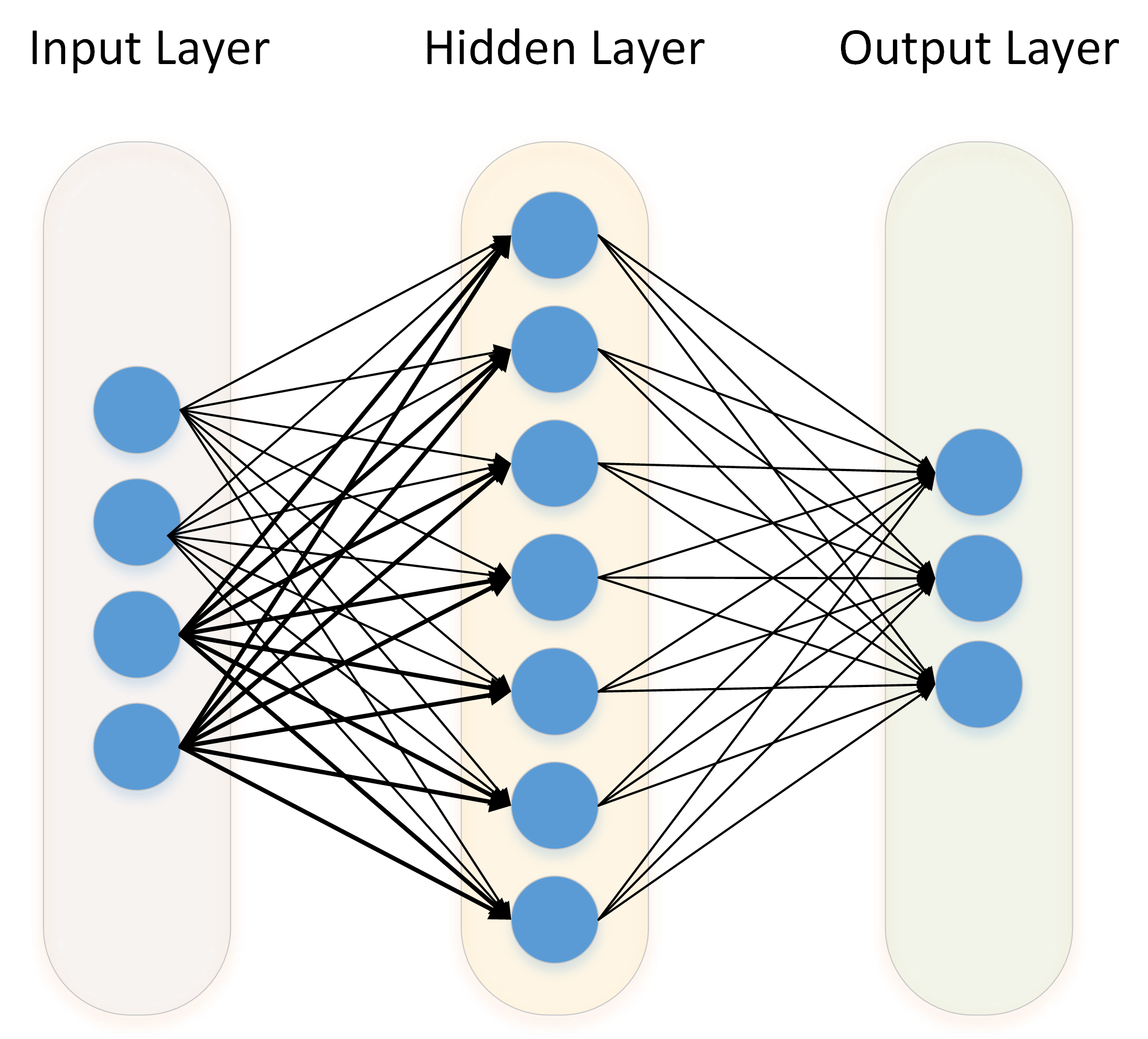

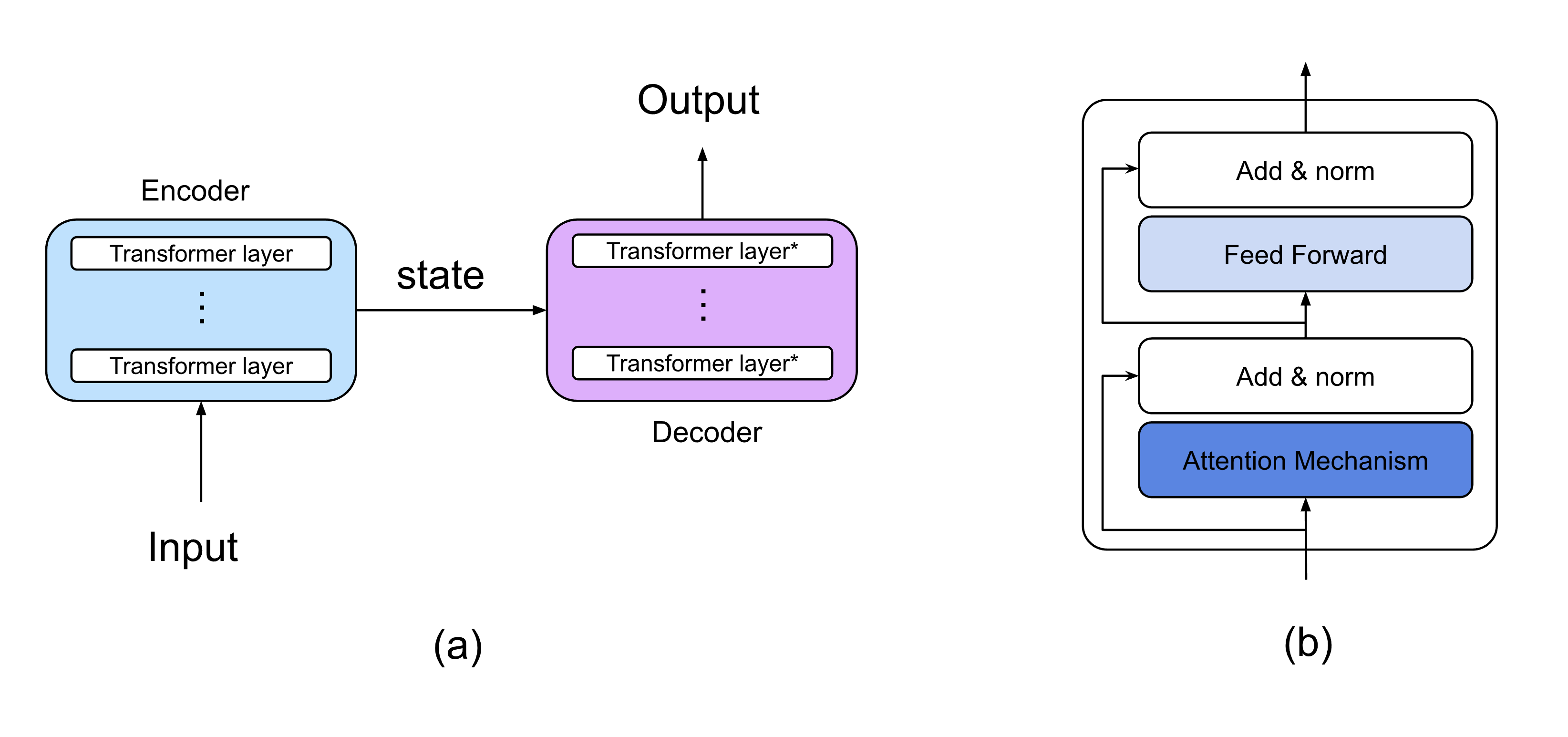

The AI “brain” is not constrained by the physical and emotional limitations that bound human thought processes. It thrives on data, patterns, and algorithmic learning, iterating and refining its processing capabilities at a pace and breadth far beyond human capacity. This divergence signifies AI’s potential to arrive at forms of understanding and insight unfathomable within the confines of human cognition. As such, AI promises to augment human decision-making, propelling us towards tackling challenges with unprecedented innovative approaches.

Reenvisioning Thought and Consciousness

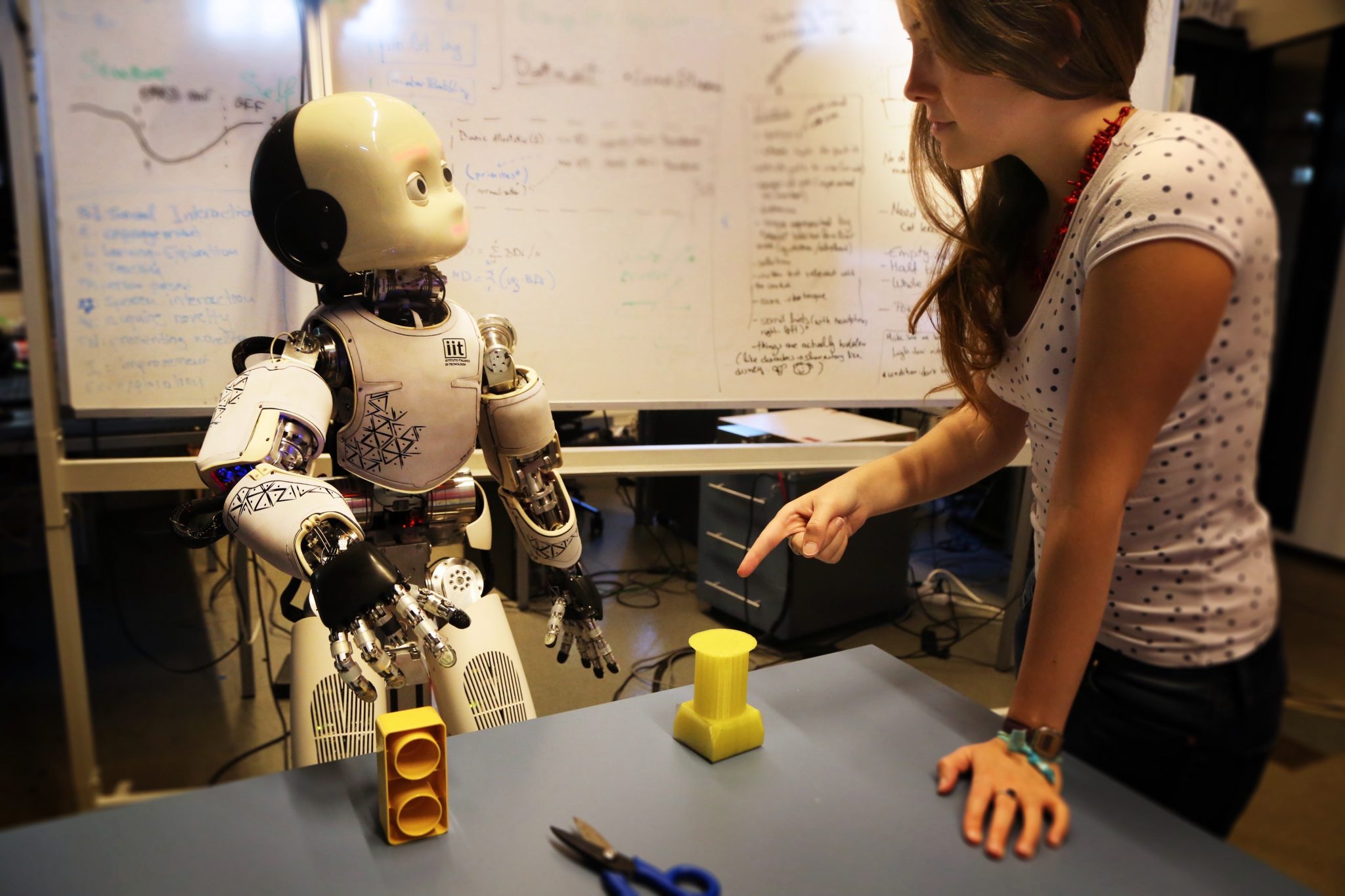

Our quest for AI has largely been driven by a desire to replicate human cognitive capabilities. However, this anthropocentric lens may inadvertently restrict our grasp on AI’s potential. The emergent digital cognition of AI, distinctly different yet capable of meshing with human cognition, suggests the possibility of a synergistic coalescence rather than a rivalry. Here, AI could serve as an extension of human intelligence, providing newfound perspectives that catalyze cognitive and societal progress.

Towards a Synergetic Cognitive Evolution

The paradigm shift to a cooperative model between human and AI cognition invites a reevaluation of our engagement with AI technologies. It fosters a landscape where AI doesn’t emulate human thought but introduces a new form of cognition. This digital cognition, in tandem with human intellect, heralds a dynamic duo capable of unlocking transformative insights and solutions.

Embracing Our Cognitive Collaboration

The frontier of AI and human cognitive collaboration underscores the necessity of human-centric principles guiding our advancements. By envisioning AI as a collaborator, we leverage the strengths inherent in both human and digital cognition, ensuring that our collective future is one marked by enrichment and ethical progress. This collaborative ethos not only redefines our interaction with AI systems but also paves the way for a future where our cognitive capacities, both biological and digital, evolve together towards shared horizons of understanding and innovation.

In reflection, our journey through the realms of AI and machine learning, highlighted by discussions on Supervised Learning’s Impact on AI Evolution, iterates the significance of viewing AI’s role not as a mere mimicry of human intellect but as a vibrant contributor to our cognitive symphony. As we chart the course of this synergistic relationship, we stand on the cusp of not just witnessing but actively shaping a redefined ambit of cognition.

Focus Keyphrase: Human and AI Cognition

>

> >

> >

> >

> >

> >

> >

>