The Integral Role of Calculus in Optimizing Cloud Resource Allocation

As a consultant specializing in cloud solutions and artificial intelligence, I’ve come to appreciate the profound impact that calculus, particularly integral calculus, has on optimizing resource allocation within cloud environments. The mathematical principles of calculus enable us to understand and apply optimization techniques in ways that are not only efficient but also cost-effective—key elements in the deployment and management of cloud resources.

Understanding Integral Calculus

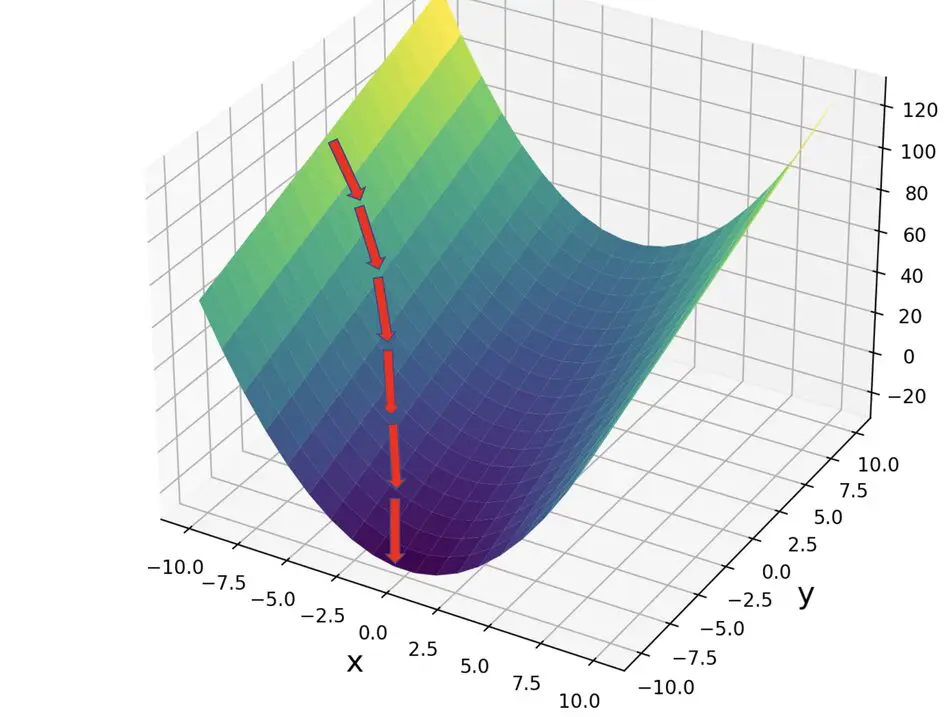

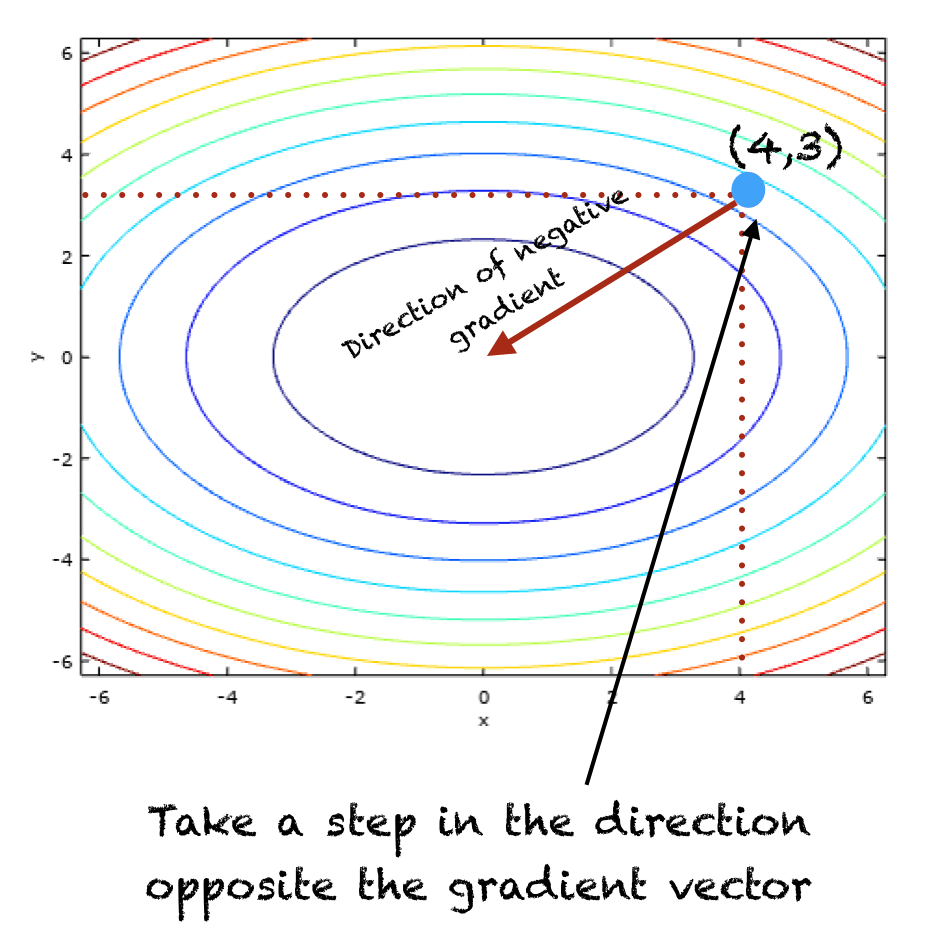

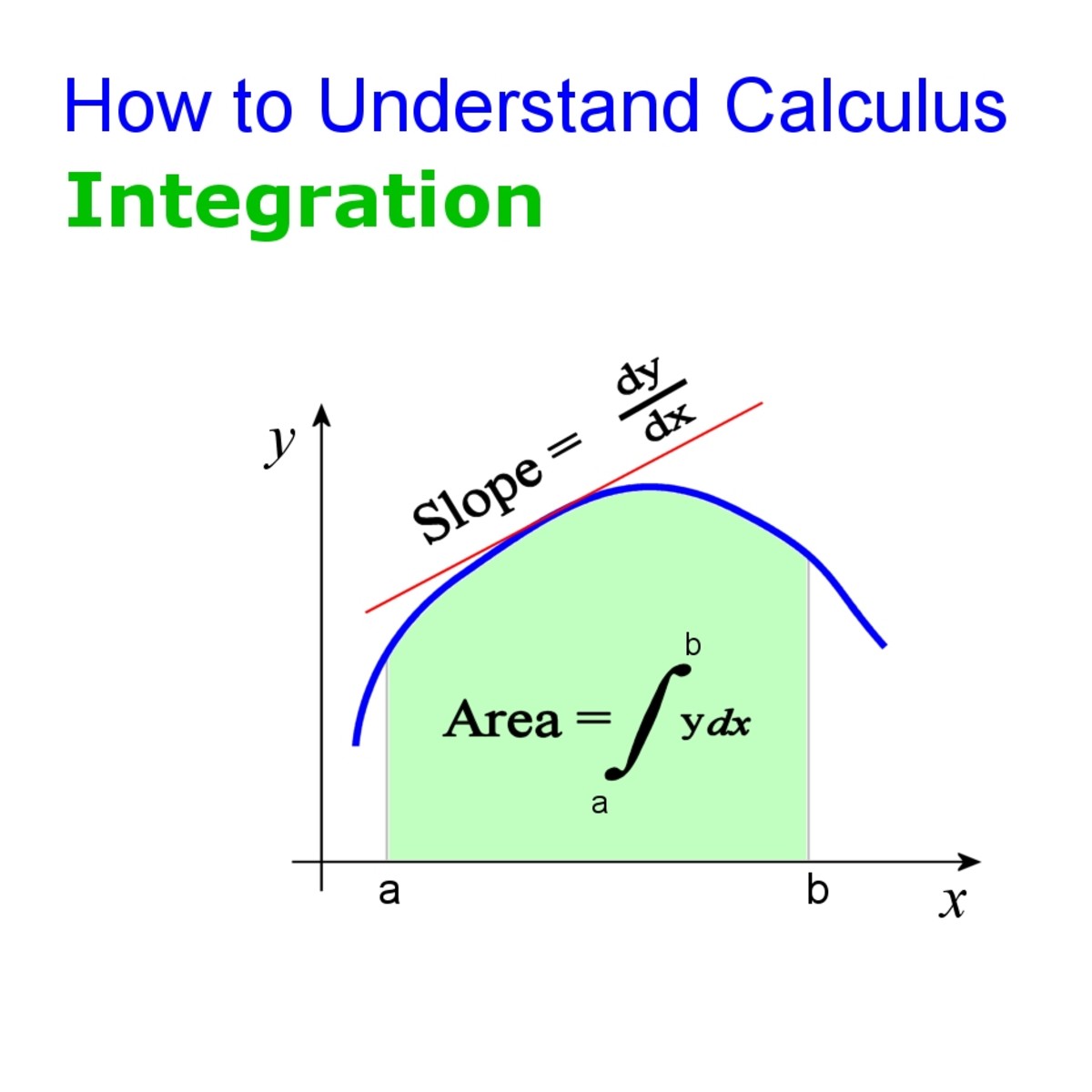

At its core, integral calculus is about accumulation. It helps us calculate the “total” effect of changes that happen in small increments. When applied to cloud resource allocation, it enables us to model and predict resource usage over time accurately. This mathematical tool is essential for implementing strategies that dynamically adjust resources in response to fluctuating demands.

Integral calculus focuses on two main concepts: the indefinite integral and the definite integral. Indefinite integrals help us find functions whose derivatives are known, revealing the quantity of resources needed over an unspecified time. In contrast, definite integrals calculate the accumulation of resources over a specific interval, offering precise optimization insights.

< >

>

Application in Cloud Resource Optimization

Imagine a cloud-based application serving millions of users worldwide. The demand on this service can change drastically—increasing during peak hours and decreasing during off-peak times. By applying integral calculus, particularly definite integrals, we can model these demand patterns and allocate resources like computing power, storage, and bandwidth more efficiently.

The formula for a definite integral, represented as

\[\int_{a}^{b} f(x) dx\], where \(a\) and \(b\) are the bounds of the interval over which we’re integrating, allows us to calculate the total resource requirements within this interval. This is crucial for avoiding both resource wastage and potential service disruptions due to resource shortages.

Such optimization not only ensures a seamless user experience by dynamically scaling resources with demand but also significantly reduces operational costs, directly impacting the bottom line of businesses relying on cloud technologies.

< >

>

Linking Calculus with AI for Enhanced Resource Management

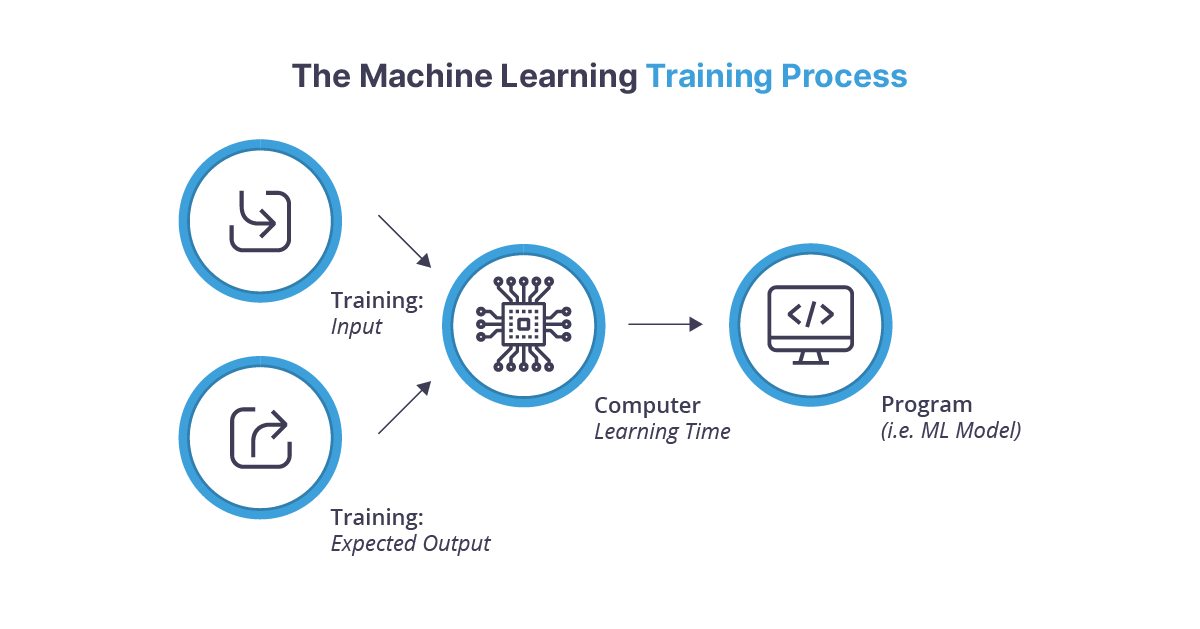

Artificial Intelligence and Machine Learning models further enhance the capabilities provided by calculus in cloud resource management. By analyzing historical usage data through machine learning algorithms, we can forecast future demand with greater accuracy. Integral calculus comes into play by integrating these forecasts over time to determine optimal resource allocation strategies.

Incorporating AI into this process allows for real-time adjustments and predictive resource allocation, minimizing human error and maximizing efficiency—a clear demonstration of how calculus and AI together can revolutionize cloud computing ecosystems.

<429 for Popular cloud management software>

Conclusion

The synergy between calculus and cloud computing illustrates how fundamental mathematical concepts continue to play a pivotal role in the advancement of technology. By applying the principles of integral calculus, businesses can optimize their cloud resource usage, ensuring cost-efficiency and reliability. As we move forward, the integration of AI and calculus will only deepen, opening new frontiers in cloud computing and beyond.

Further Reading

To deepen your understanding of calculus in technology applications and explore more about the advancements in AI, I highly recommend diving into the discussion on neural networks and their reliance on calculus for optimization, as outlined in Understanding the Role of Calculus in Neural Networks for AI Advancement.

Whether you’re progressing through the realms of cloud computing, AI, or any field within information technology, the foundational knowledge of calculus remains an unwavering requirement, showcasing the timeless value of mathematics in contemporary scientific exploration and technological innovation.