Revolutionizing Data Handling in Machine Learning Projects with Query2DataFrame

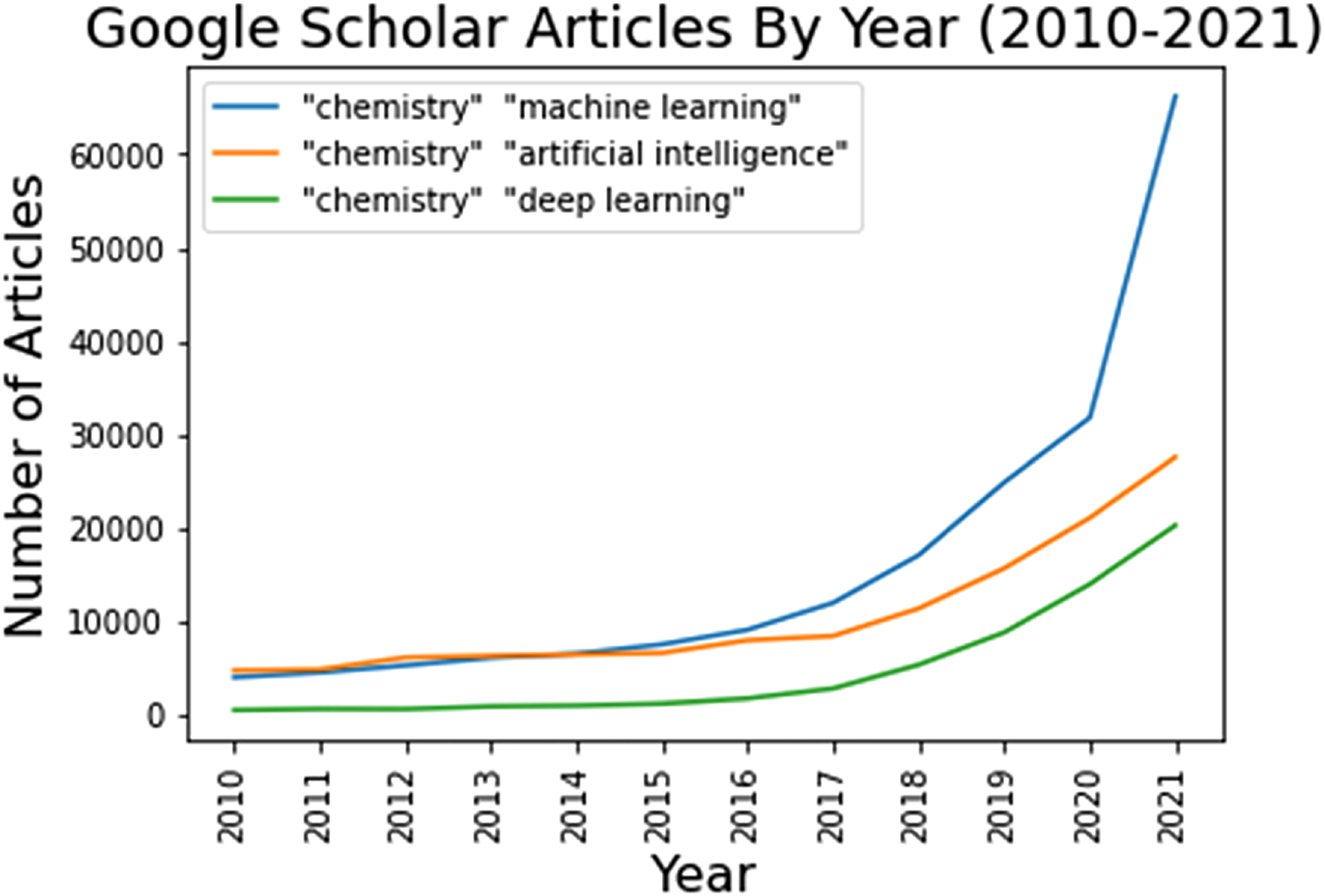

In the rapidly evolving landscape of machine learning and data analysis, the ability to effortlessly manage, retrieve, and preprocess data is paramount. I recently came across an innovative project, Query2DataFrame, which promises to dramatically simplify these processes for those working with PostgreSQL databases. As someone deeply immersed in the realm of Artificial Intelligence and machine learning, I find the potential of such tools to be both exciting and indispensable for pushing the boundaries of what we can achieve in this field.

Introducing Query2DataFrame

Query2DataFrame is a toolkit designed to facilitate the interaction with PostgreSQL databases, streamlining the retrieval, saving, and loading of datasets. Its primary aim is to ease the data handling and preprocessing tasks, often seen as cumbersome and time-consuming steps in data analysis and machine learning projects.

Key Features at a Glance:

- Customizable Data Retrieval: Allows for retrieving data from a PostgreSQL database using customizable query templates, catering to the specific needs of your project.

- Robust Data Saving and Checkpointing: Offers the ability to save retrieved data in various formats including CSV, PKL, and Excel. Moreover, it supports checkpointing to efficiently manage long-running data retrieval tasks.

- Efficient Data Loading: Enables loading datasets from saved files directly into pandas DataFrames, supporting a wide range of file formats for seamless integration into data processing pipelines.

Getting Started with Query2DataFrame

To embark on utilizing Query2DataFrame, certain prerequisites including Python 3.8 or higher are required. Installation is straightforward, beginning with cloning the repository and installing the necessary libraries as outlined in their documentation. Configuration for your PostgreSQL database connection is also made simple via modifications to the provided config.json file.

Practical Applications

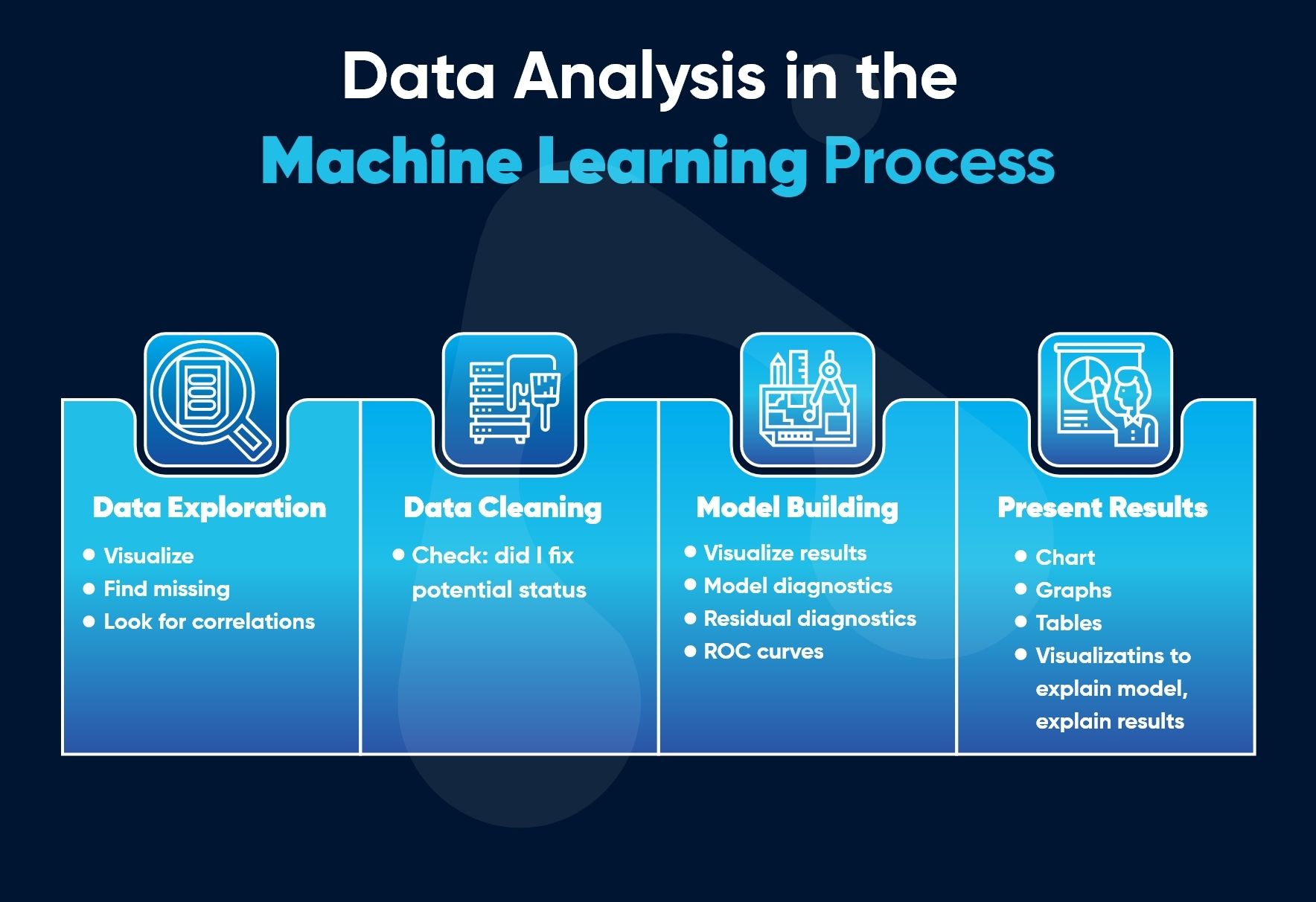

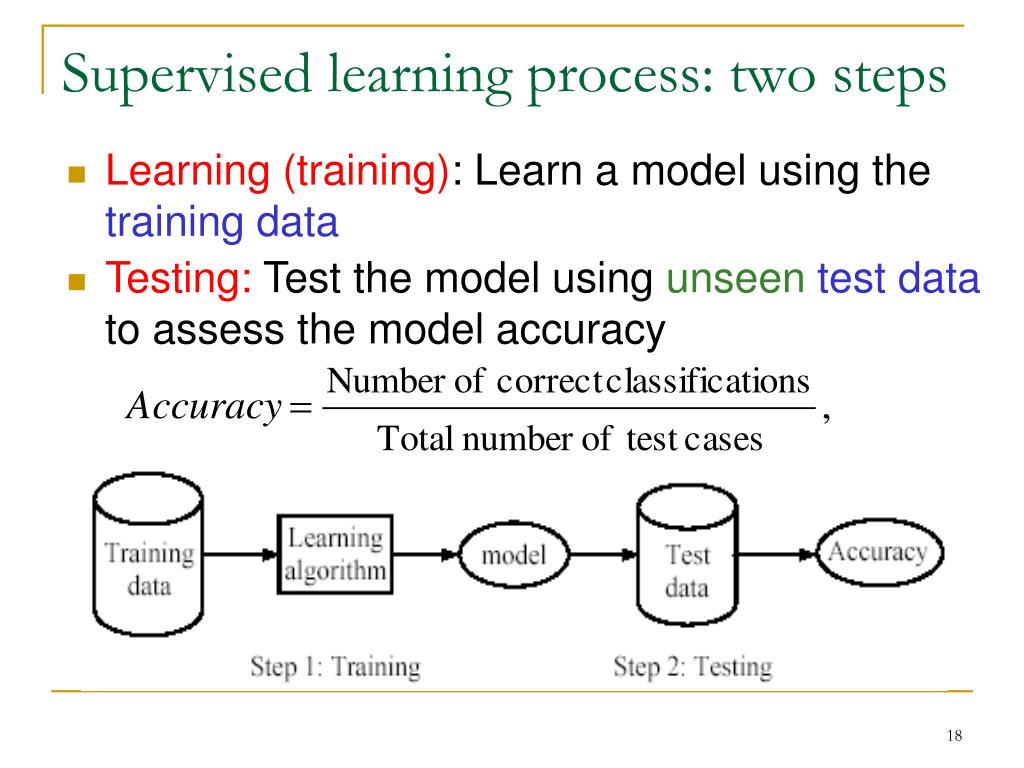

The beauty of Query2DataFrame lies not just in its features but in its practical application within the realm of machine learning. In a project I undertook, involving dimensionality reduction—a machine learning technique discussed in previous articles—the tool proved invaluable. With it, gathering and preparing the vast datasets required for accurate machine learning models was made significantly less daunting.

Enhanced Productivity for Researchers and Developers

The traditional roadblocks of data management can bog down even the most seasoned data scientists. By automating and simplifying the processes of data retrieval and preparation, Query2DataFrame empowers researchers and developers to focus more on analysis and model development, rather than being ensnared in the preliminary stages of data handling.

Conclusion

The advent of tools like Query2DataFrame marks a leap forward in the field of data science and machine learning. They serve not only to enhance efficiency but also to democratize access to advanced data handling capabilities, allowing a broader range of individuals and teams to participate in creating innovative solutions to today’s challenges. As we continue to explore the vast potential of machine learning, tools like Query2DataFrame will undoubtedly play a pivotal role in shaping the future of this exciting domain.

Join the Community

For those interested in contributing to or learning more about Query2DataFrame, I encourage you to dive into their project repository and consider joining the community. Together, we can drive forward the advancements in machine learning and AI, making the impossible, possible.

Video: [1,Overview of using Query2DataFrame in a machine learning project]

In the quest for innovation and making our lives easier through technology, embracing tools like Query2DataFrame is not just beneficial, but essential. The implications for time savings, increased accuracy, and more intuitive data handling processes cannot be overstated.

Focus Keyphrase: Query2DataFrame toolkit in machine learning projects

>

> >

> >

> >

> >

> >

> >

> >

>

>

> >

> >

>

>

> >

> >

>