The Intersection of Randomness and Algorithms: Celebrating Avi Wigderson’s Turing Award

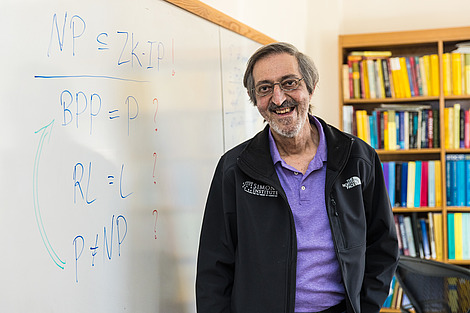

The computing and mathematical communities have long pursued the secrets nestled within the complex relationship between randomness and predictability. It’s this intrigue that positions the recent 2023 Turing Award, given to mathematician Avi Wigderson, as not just a celebration of individual accomplishment, but a testament to the evolving dialogue between mathematics and computer science.

A Lifetime Devoted to Theoretical Computer Science

With an illustrious career at the Institute for Advanced Study, Wigderson has dedicated his professional life to unraveling the mysteries of theoretical computer science. What sets Wigderson apart is his focus not merely on solutions, but the essence of a problem’s solvability. This quest has led him to explore the realms of randomness and unpredictability in computing—a journey that highlights the essence of problem-solving itself.

Revolutionizing Algorithmic Approaches

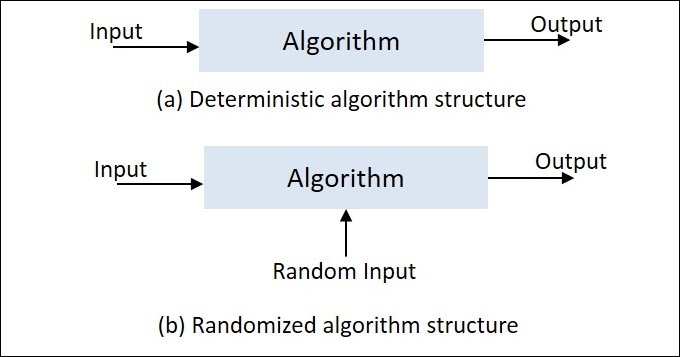

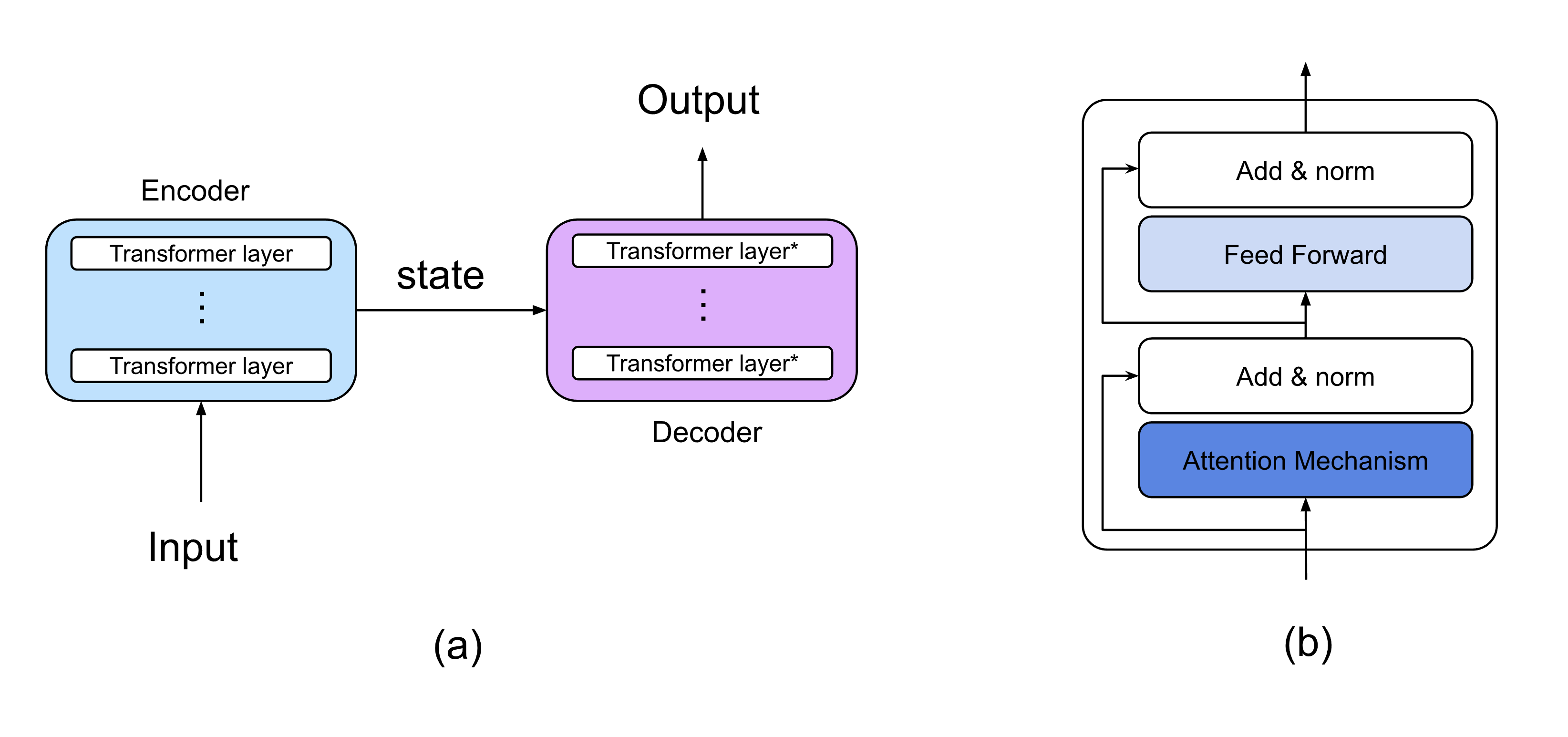

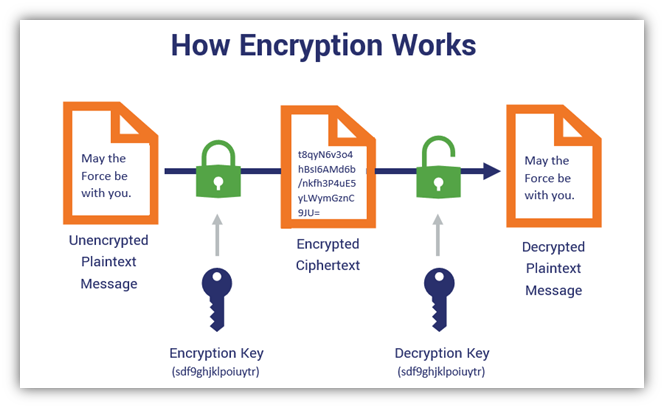

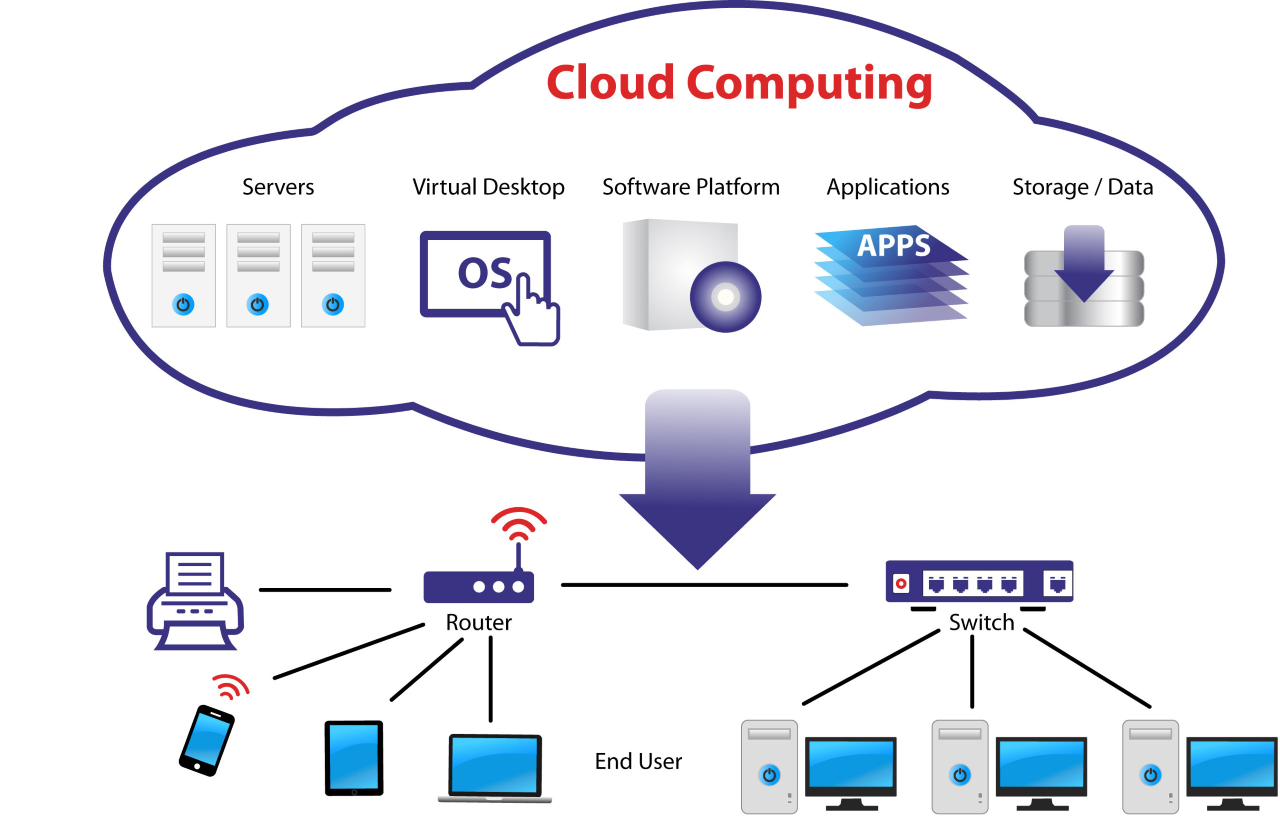

Wigderson’s early work in the 1980s marked a pivotal shift in how algorithms were understood. He discovered that injecting randomness into algorithms could, paradoxically, lead to simpler and faster solutions. Conversely, his research also illustrated how reducing randomness could streamline the journey to an answer. These discoveries have left an indelible mark on the field, influencing everything from cryptography to cloud computing.

Redefining the P versus NP Problem

A cornerstone of Wigderson’s legacy is his contribution to the P versus NP problem, one of computer science’s most famous challenges. By integrating randomness into the equation, Wigderson not only shed light on specific proofs but also blurred the line between what constitutes an ‘easy’ and ‘hard’ problem in computational terms. His work underscores the fluid nature of problem-solving, suggesting the solutions we seek may be more a matter of perspective than inherent difficulty.

Expanding the Frontier: Beyond Computer Science

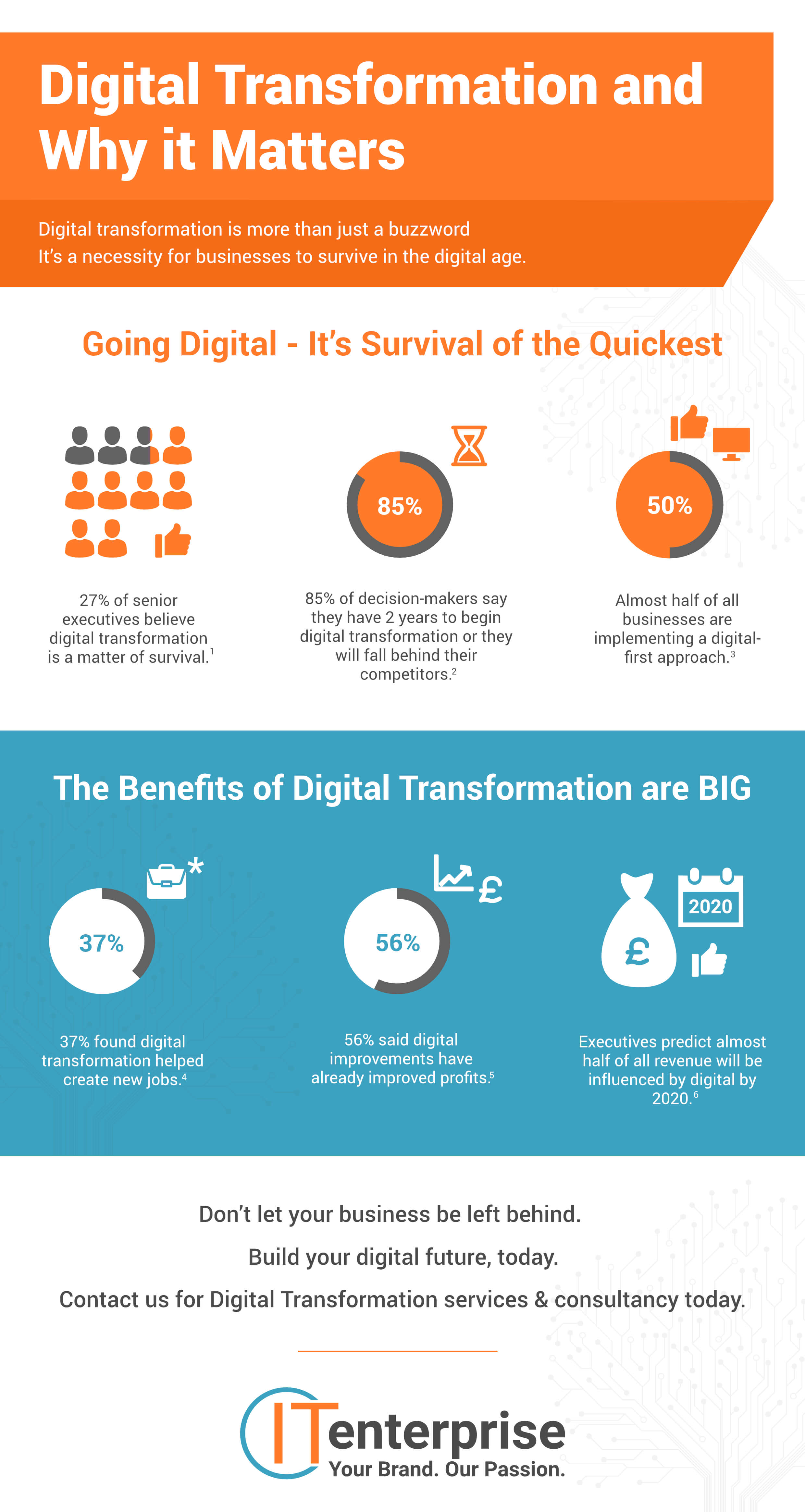

What makes Wigderson’s work truly groundbreaking is its universality. The principles of randomness and predictability he has explored do not confine themselves to computer science but extend into natural processes and the fabric of human society. From the unpredictability of stock markets to the spread of diseases, the implications of his work are both profound and pervasive.

A Legacy of Intersectionality

Wigderson’s achievements are emblematic of a broader narrative: the convergence of diverse disciplines. His recognition with both the Turing Award and the Abel Prize highlights an ever-growing acknowledgment that the future of innovation lies at the intersection of computer science and mathematics. By harnessing randomness, a concept as ancient as the universe itself, Wigderson has not only advanced our understanding but has also reminded us of the beauty in unpredictability.

In Honor of a True Pioneer

For those of us engaged in the exploration of theoretical computer science, Wigderson’s recognition serves as both an inspiration and a challenge. His journey encourages us to look beyond the binary of right answers and wrong ones, to embrace the complexity of the unknown, and to always seek the unifying threads between seemingly disparate fields. As we reflect on Wigderson’s contributions, we are reminded of the boundless potential that lies in the marriage of mathematics and computer science.

In closing, Avi Wigderson’s journey illuminates a path forward for all of us. Whether we find ourselves pondering the vastness of the cosmos, the intricacy of natural phenomena, or the elegance of a well-crafted algorithm, his work teaches us to appreciate the dance between determinism and randomness. Today, as we celebrate his achievements, we also look forward to the new horizons his work opens for future explorers in the boundless frontier of theoretical computer science and mathematics.

As we delve deeper into this fascinating intersection, we surely carry forth the torch lit by Wigderson, inspired by the vast landscape of knowledge that awaits our discovery—and the promise of unlocking yet more mysteries that string together the fabric of our universe.

>

> >

>

>

> >

> >

>